How to create an Application Package

Here is a short explanation of how to create an application package.

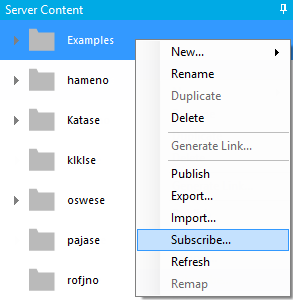

Create new package by clicking right moutse button and select New - Package.

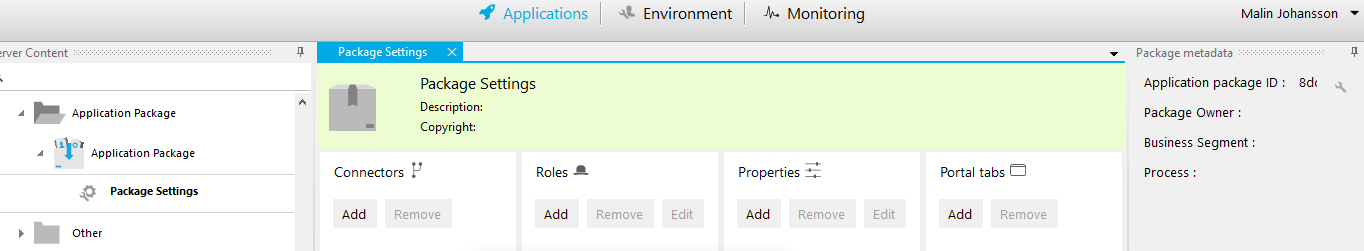

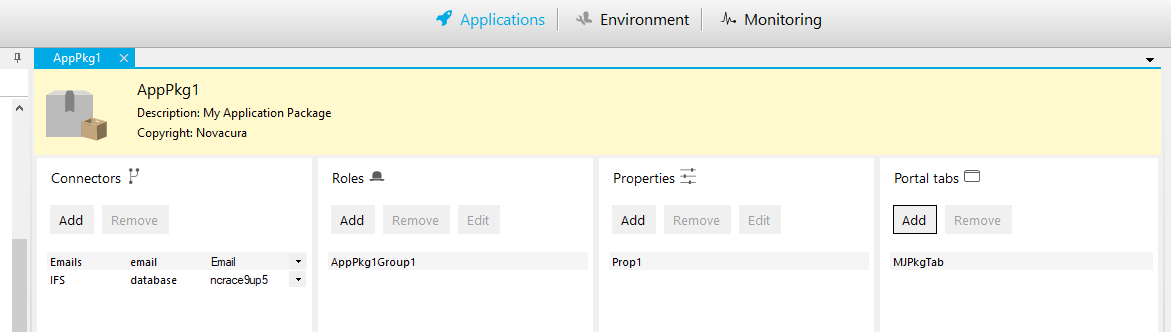

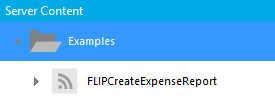

The package folder and package settings are created.

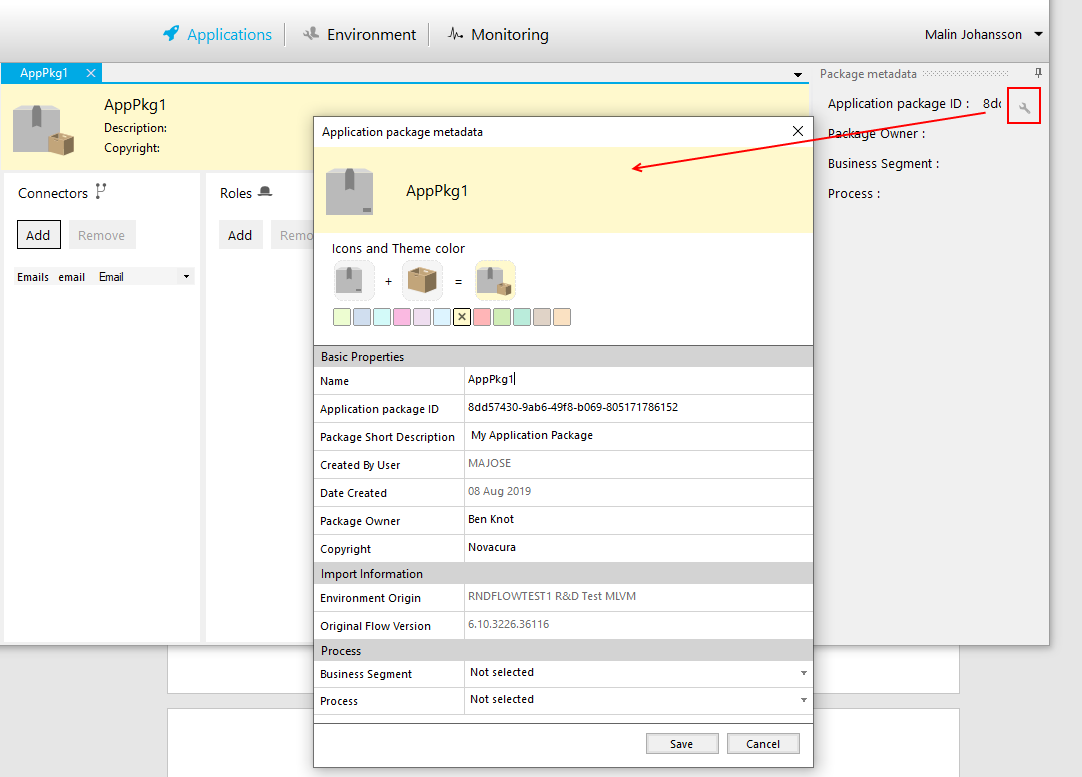

Change the package metadata.

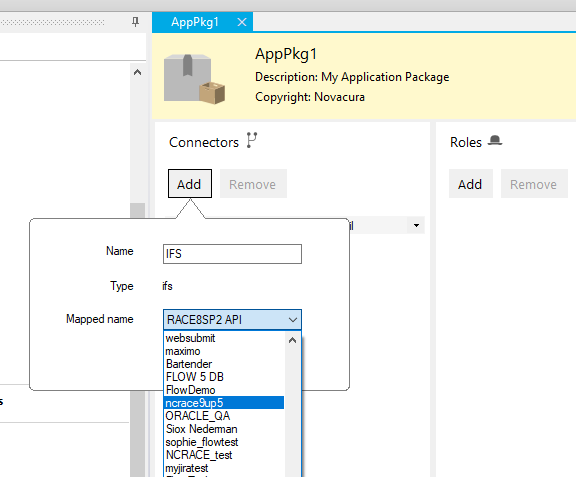

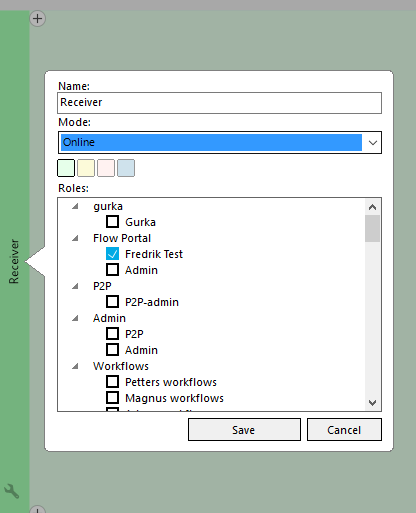

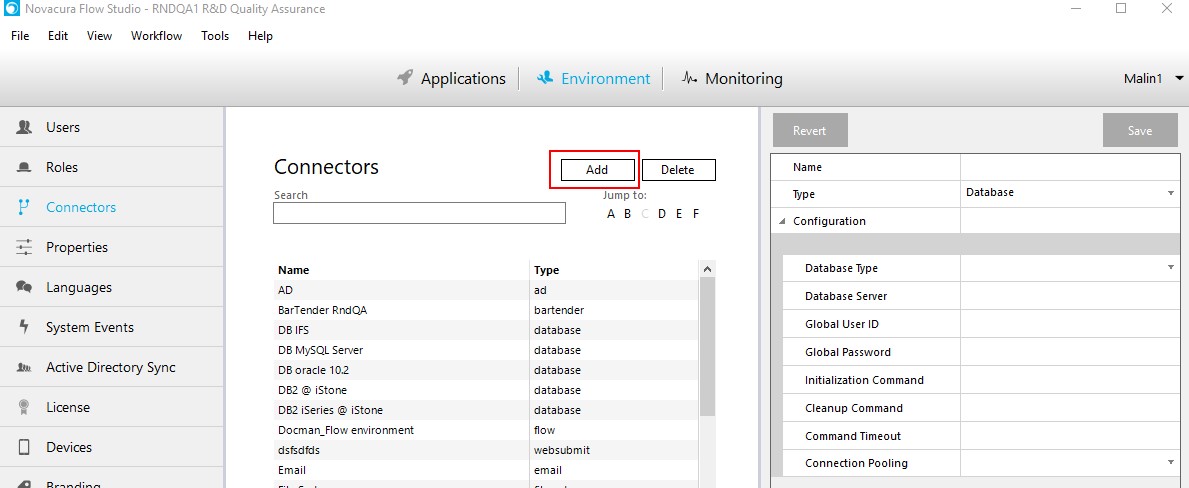

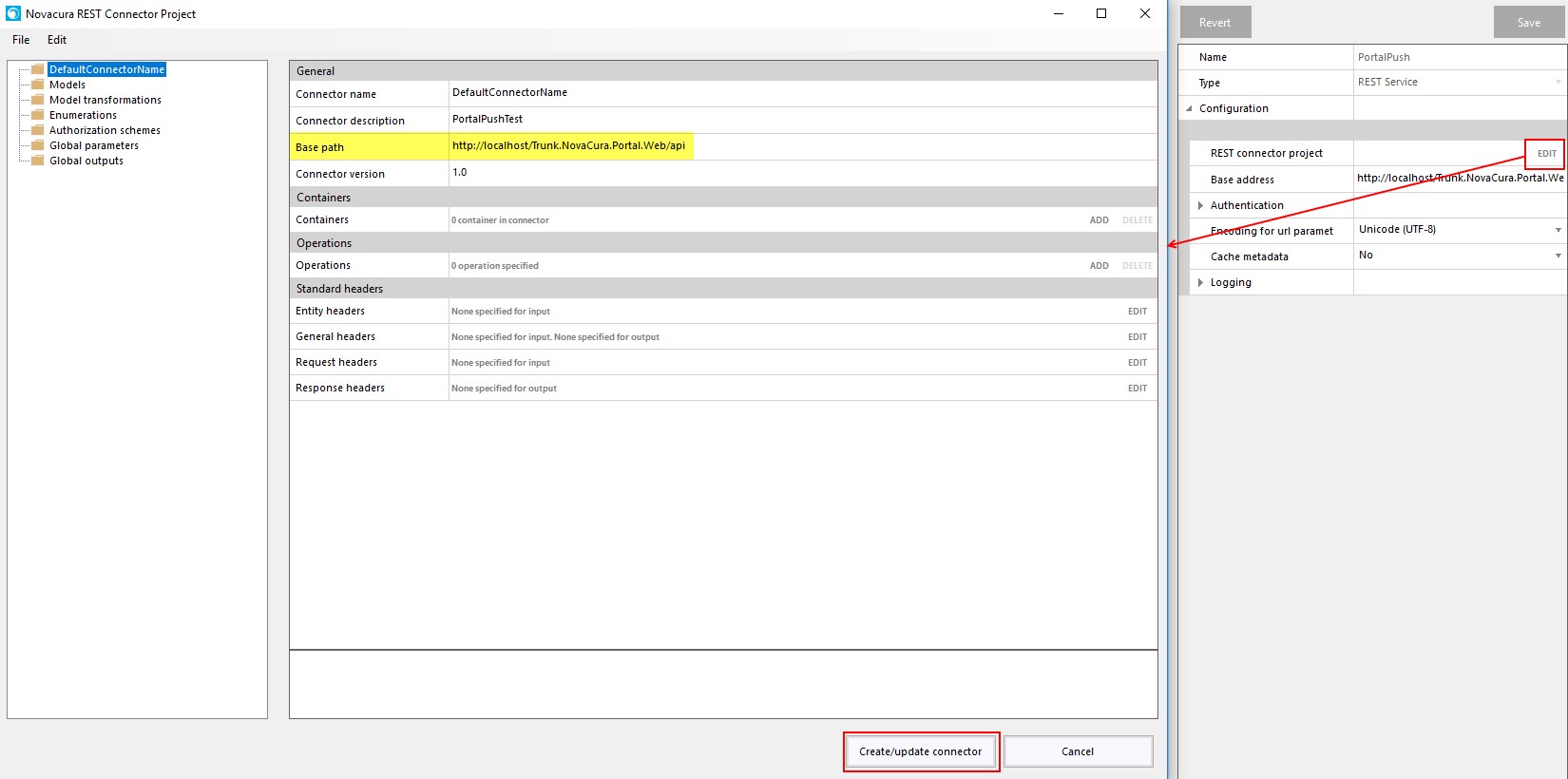

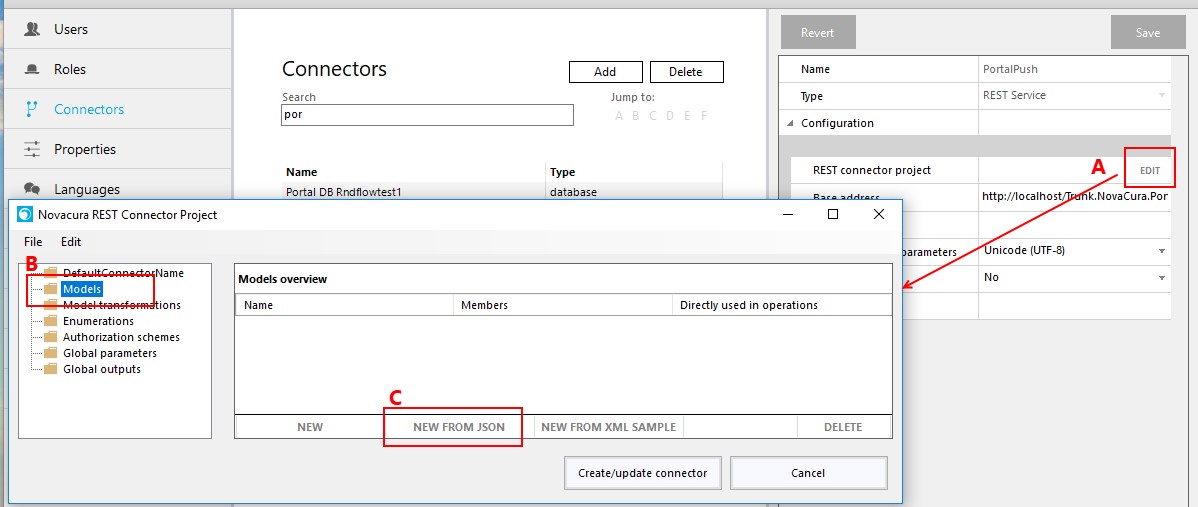

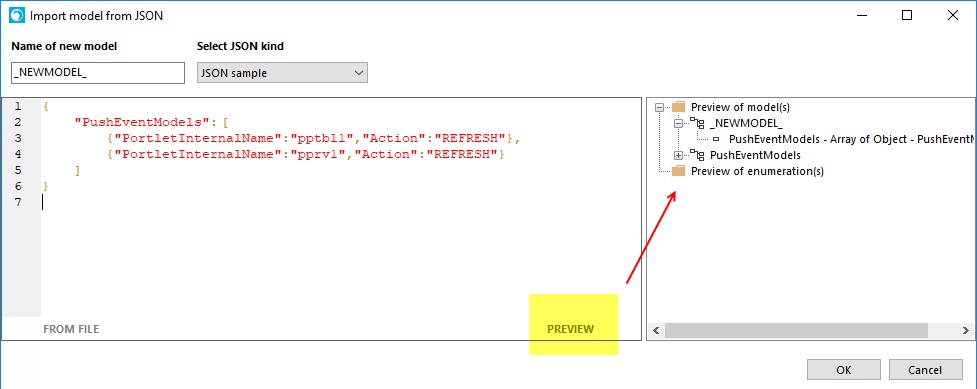

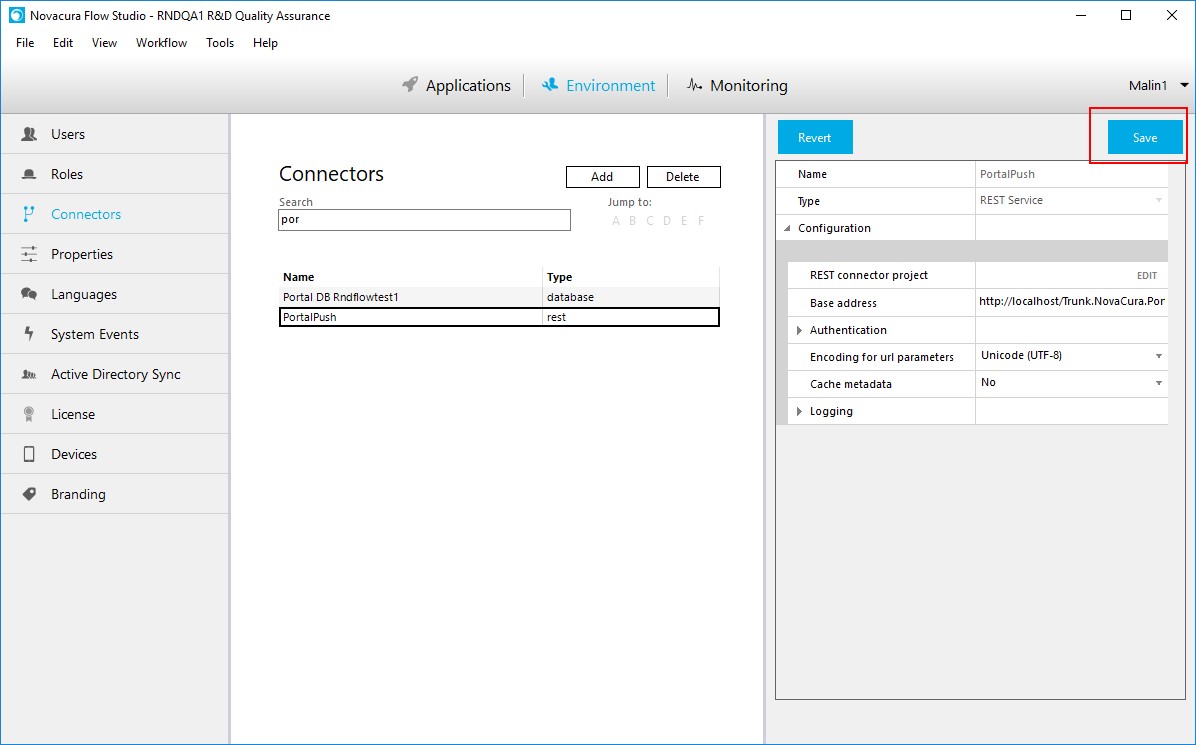

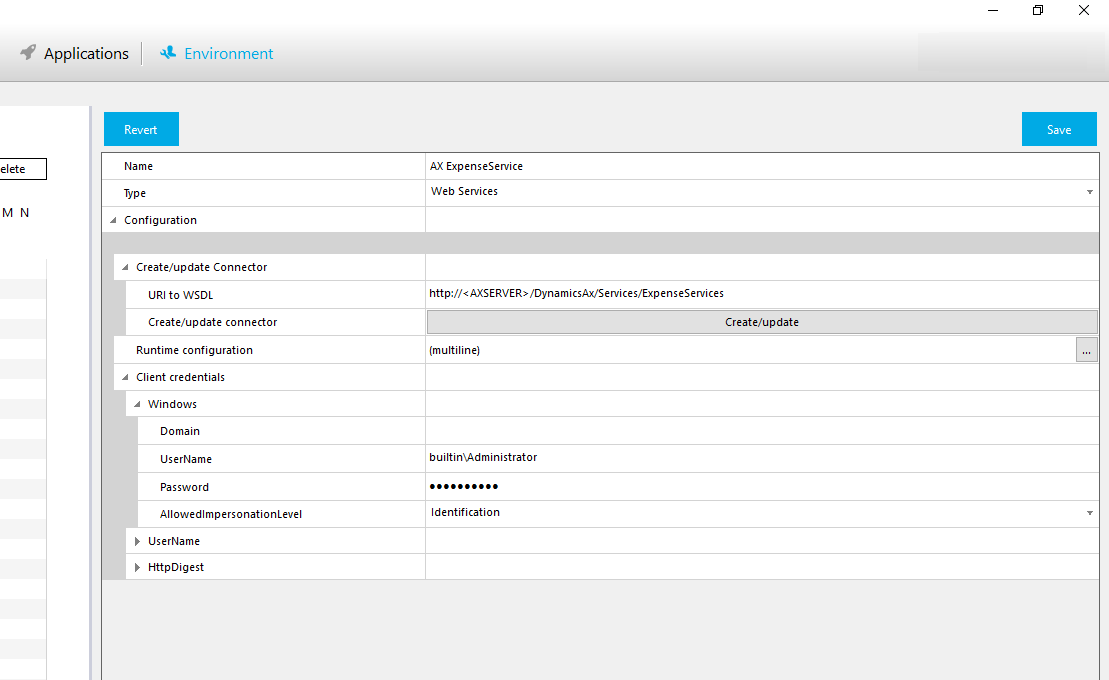

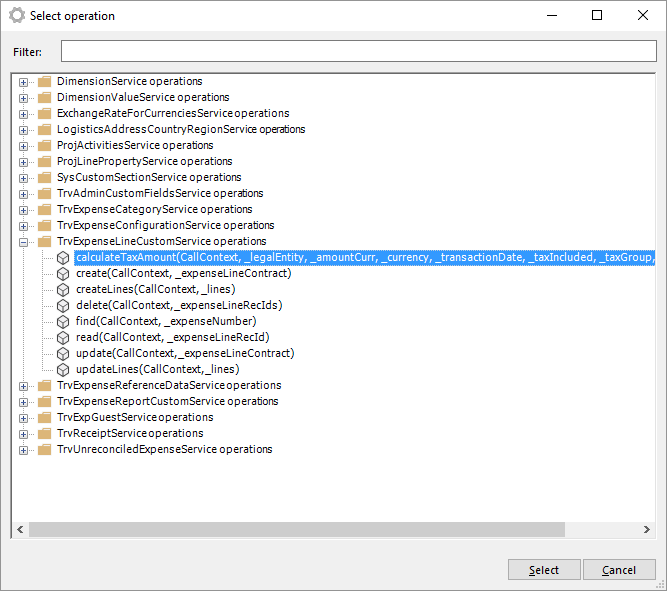

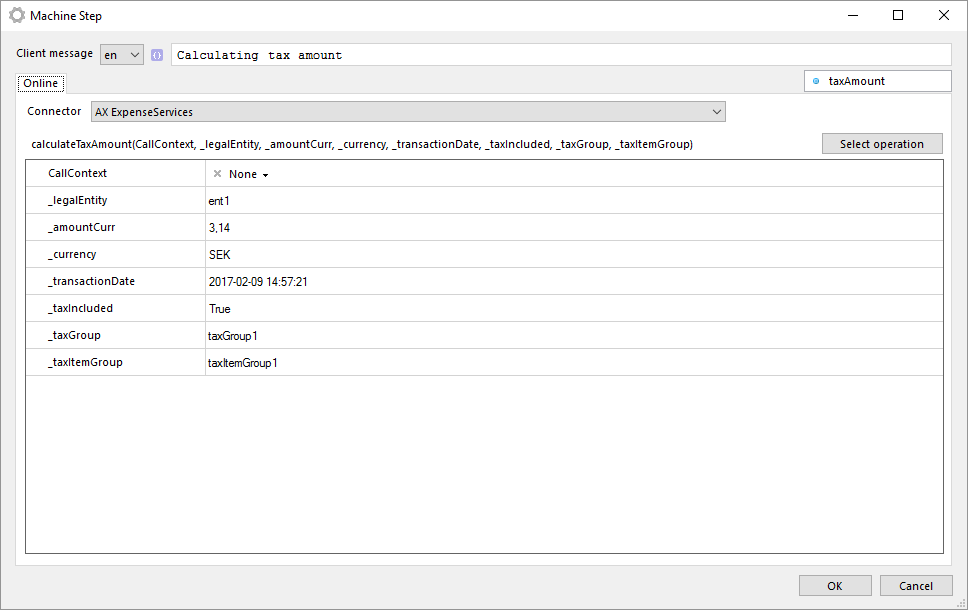

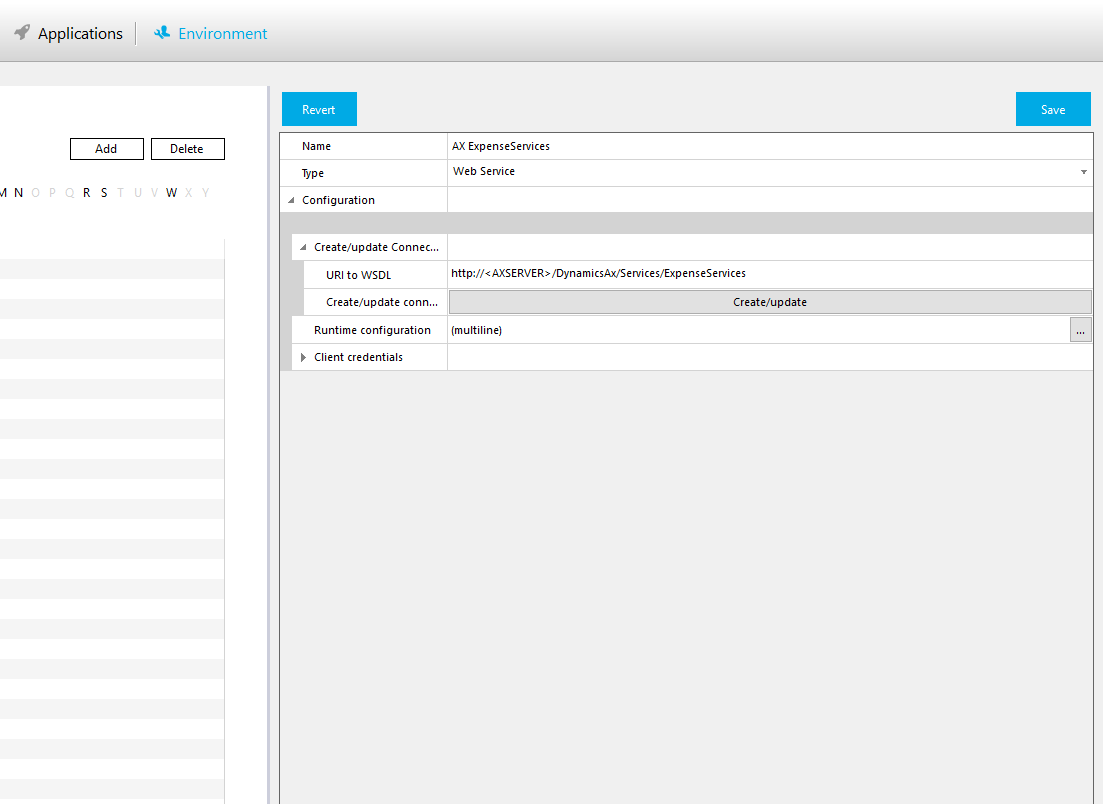

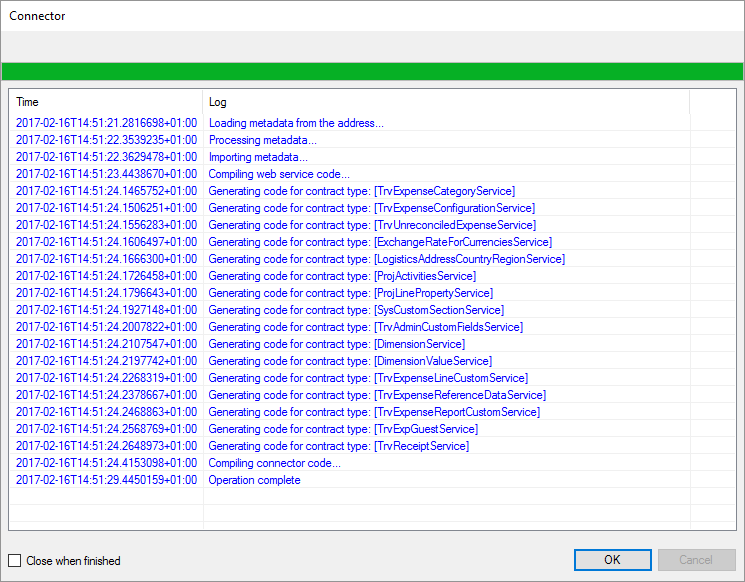

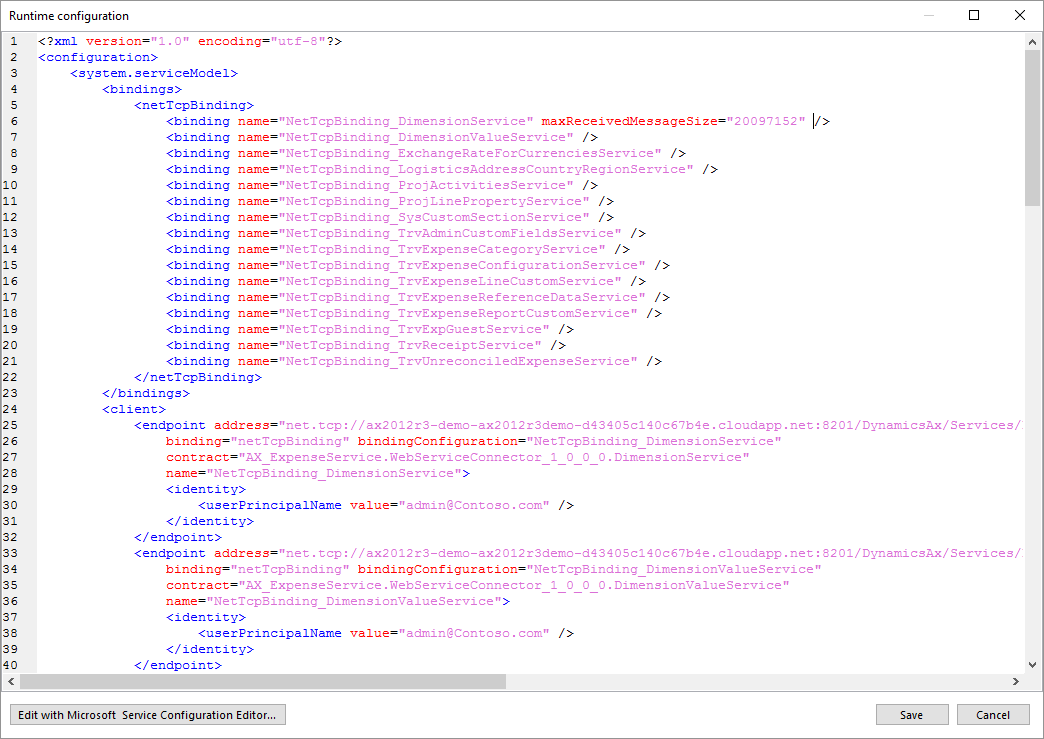

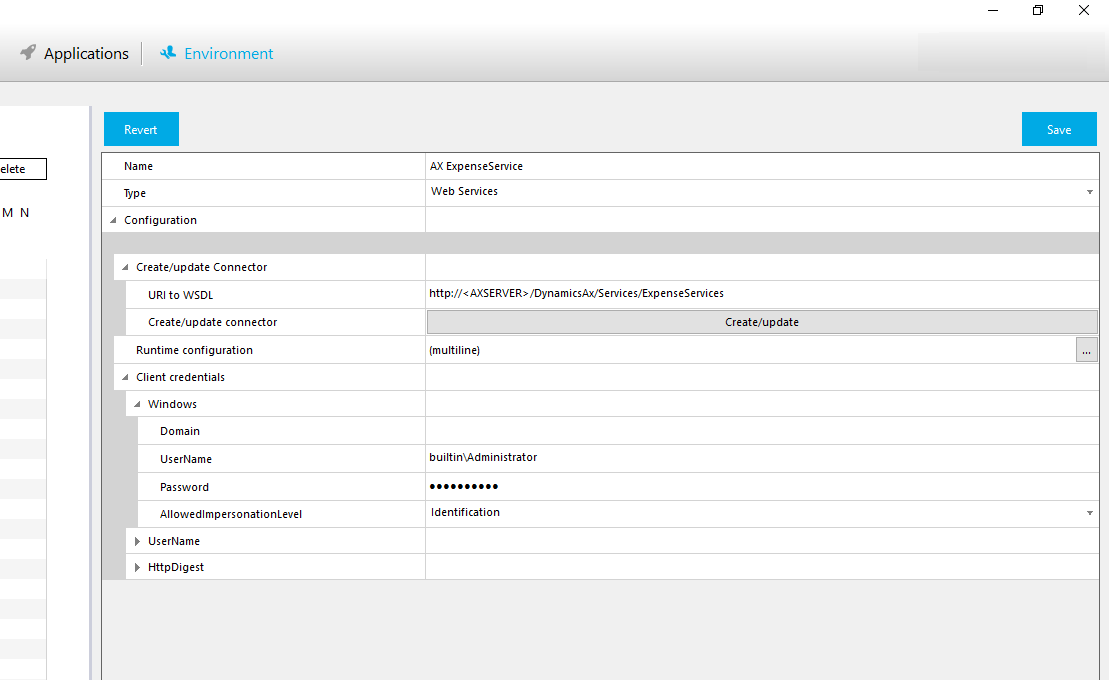

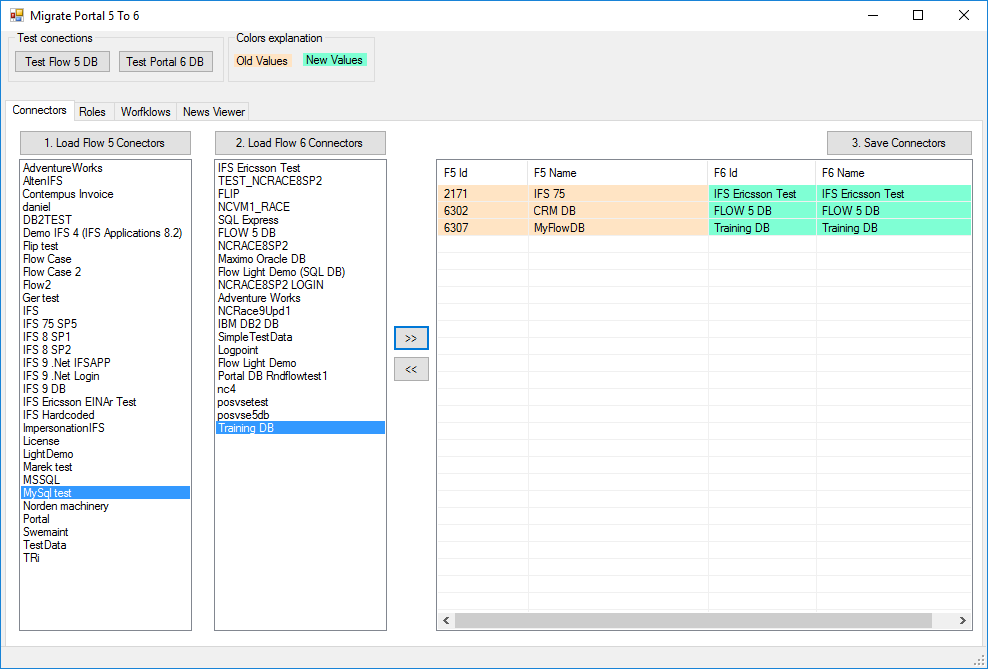

Add connectors.

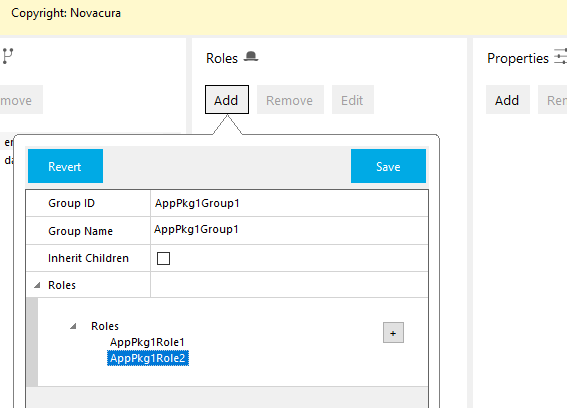

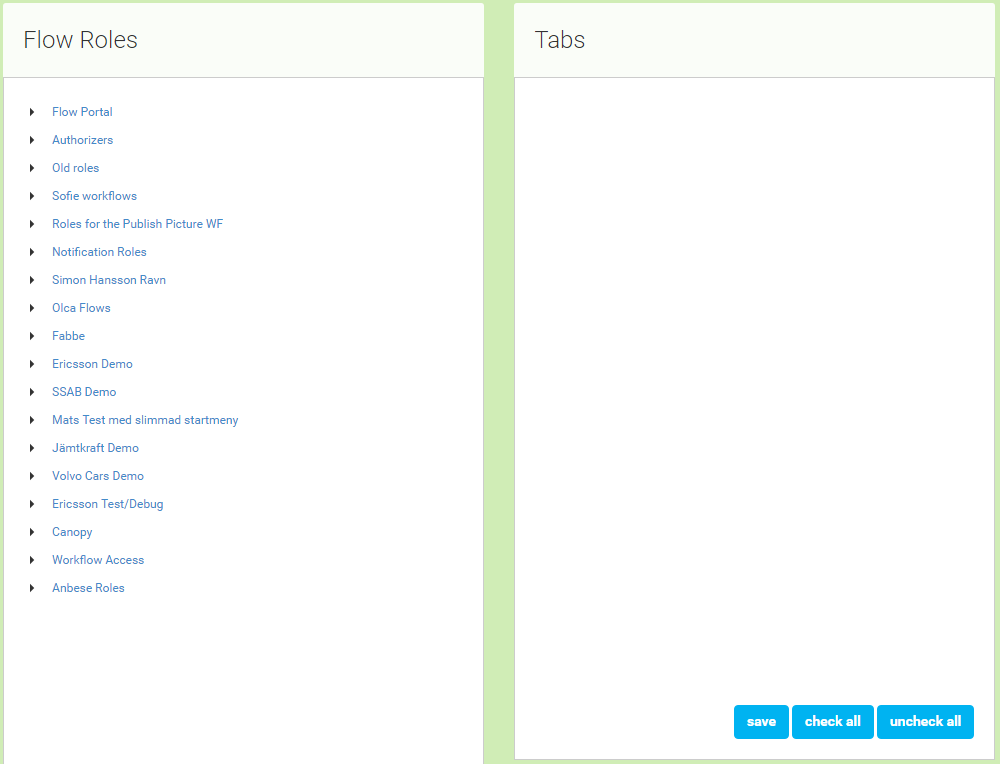

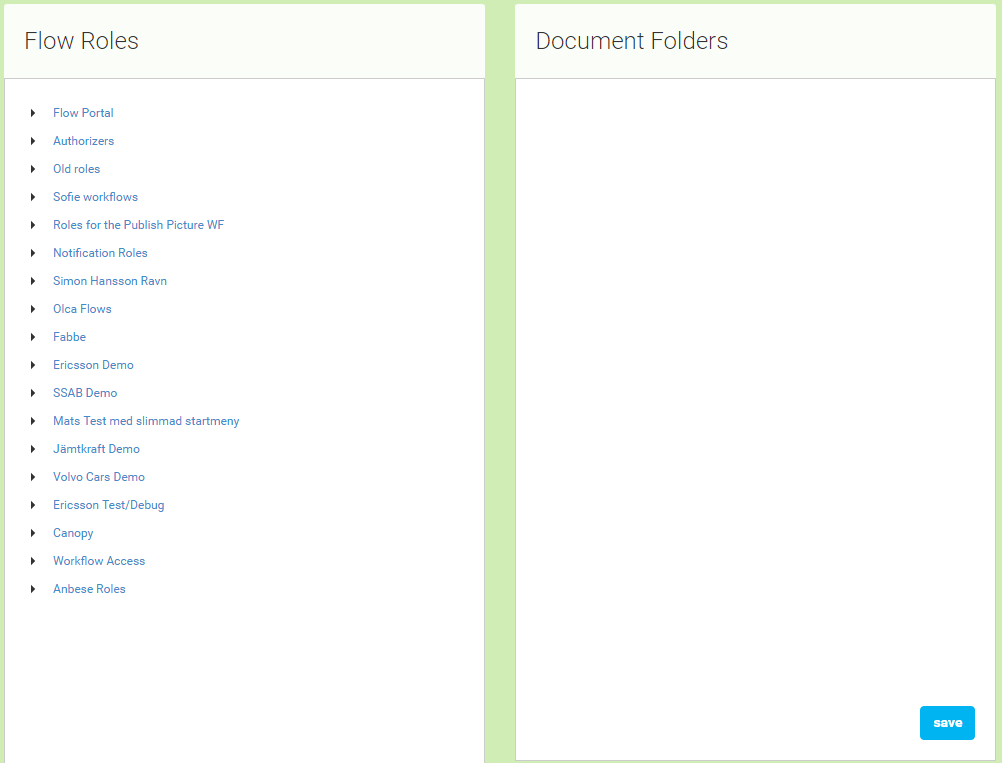

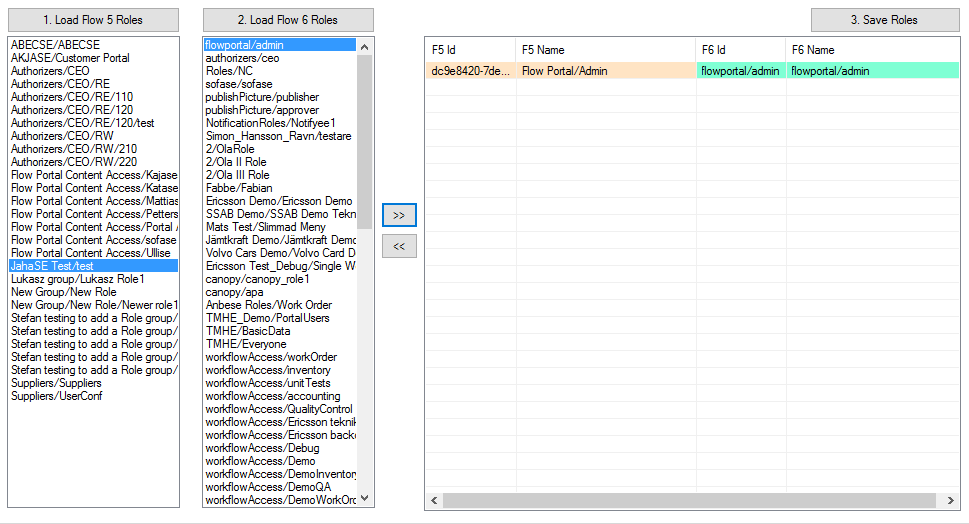

Add roles.

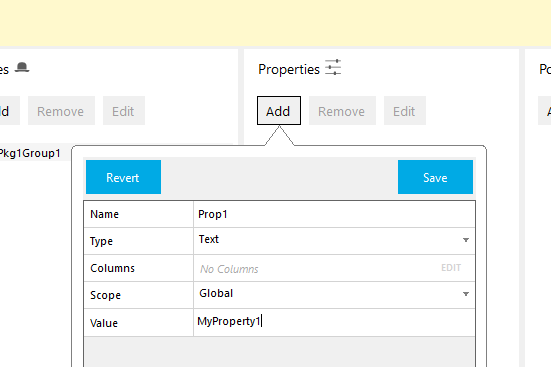

Add properties.

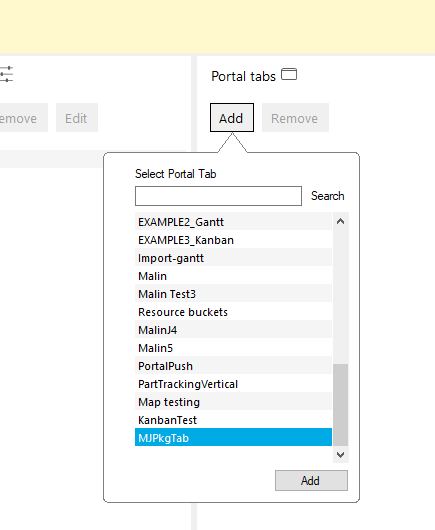

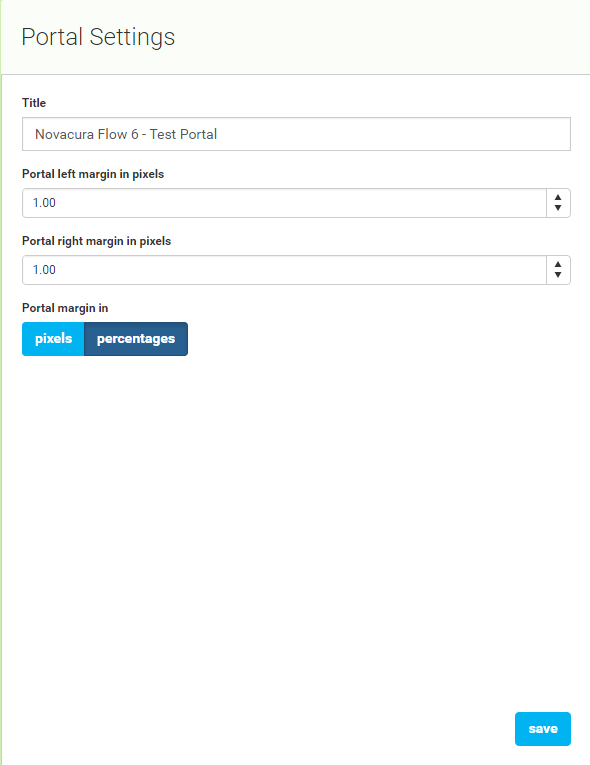

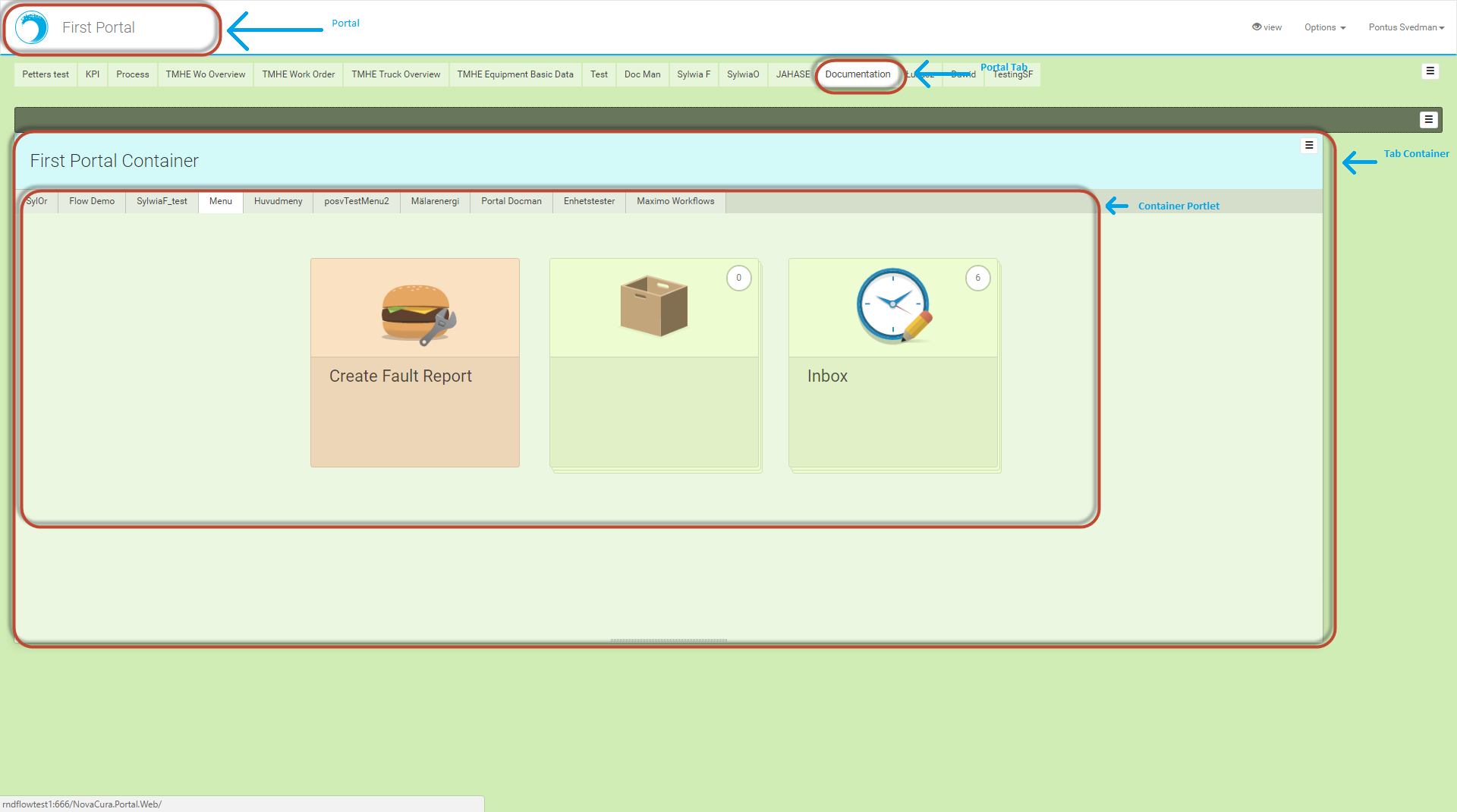

Add portal tabs.

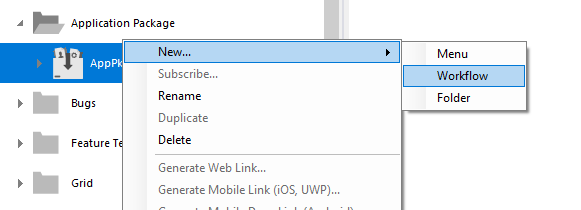

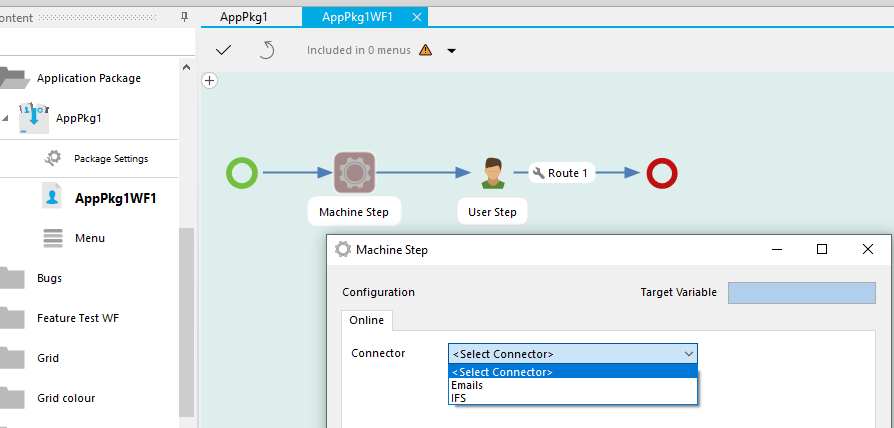

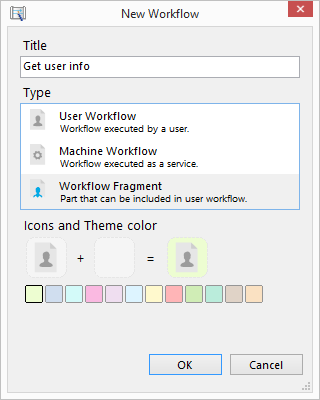

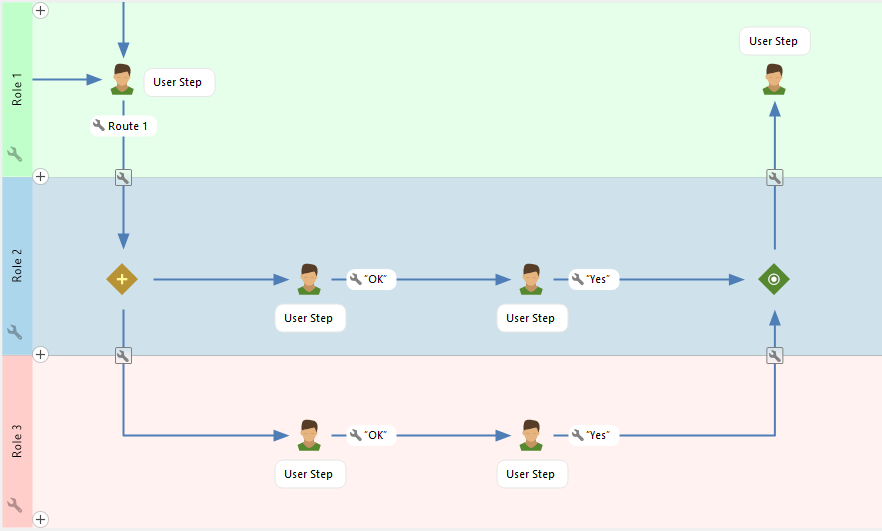

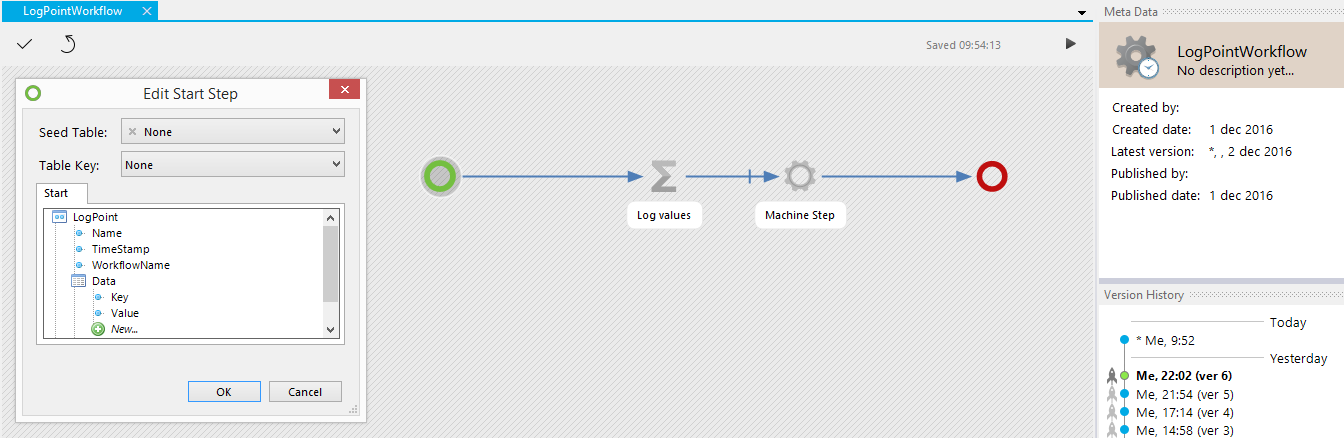

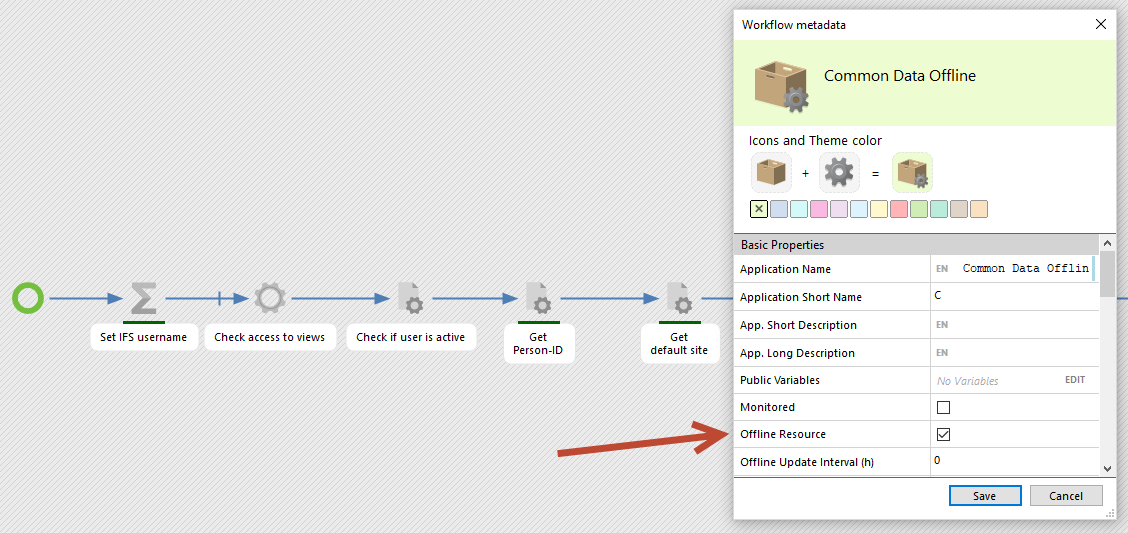

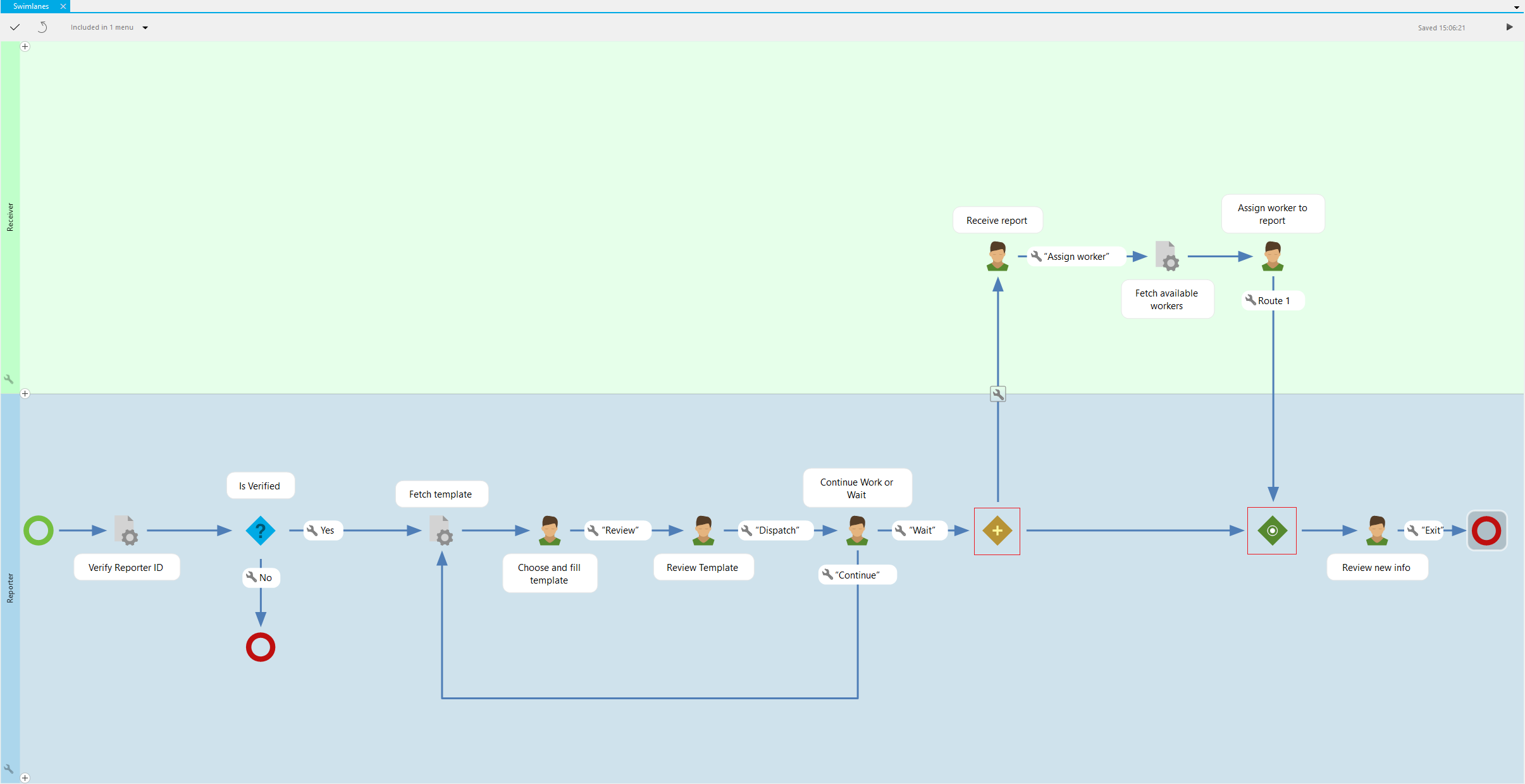

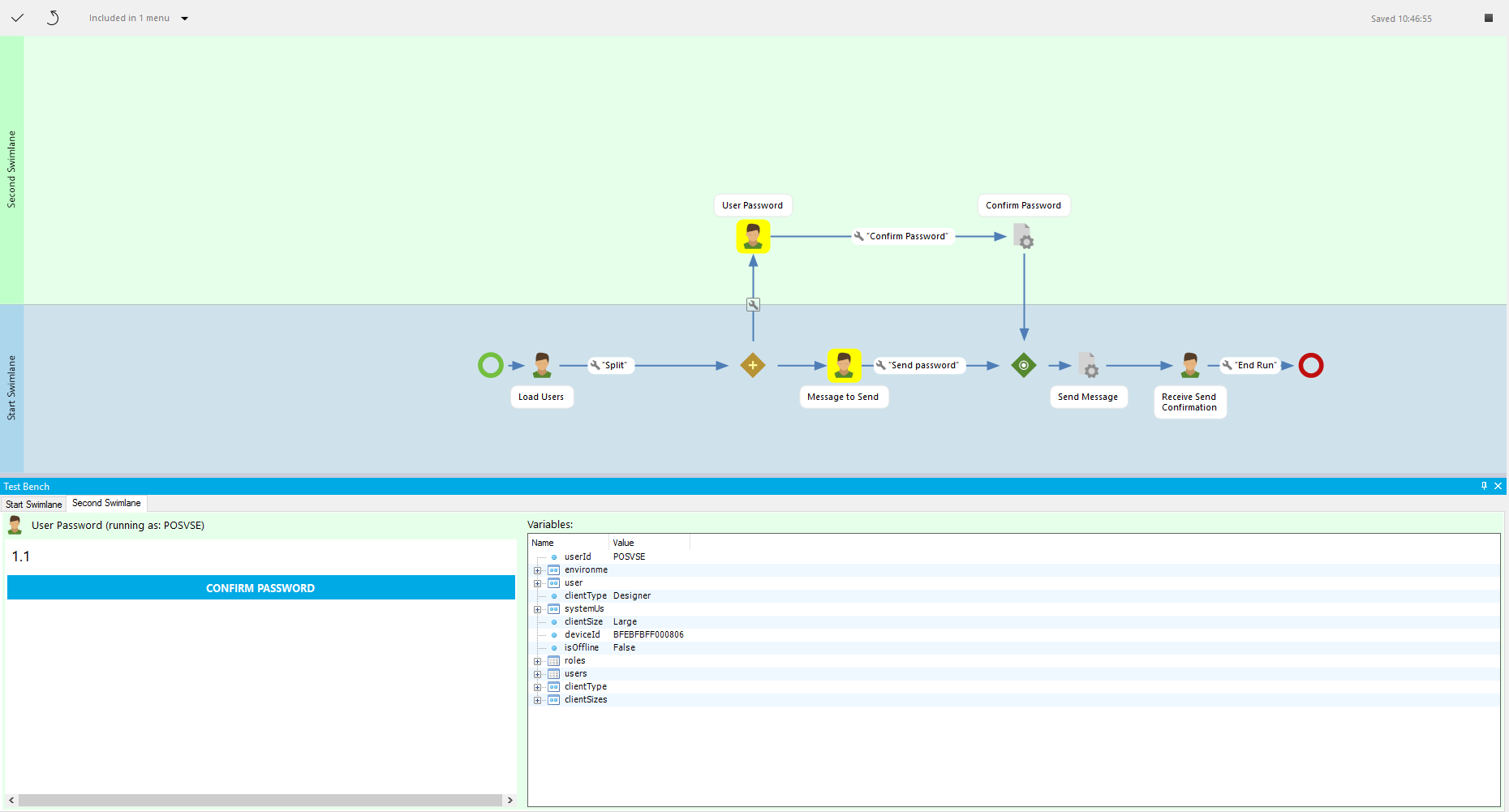

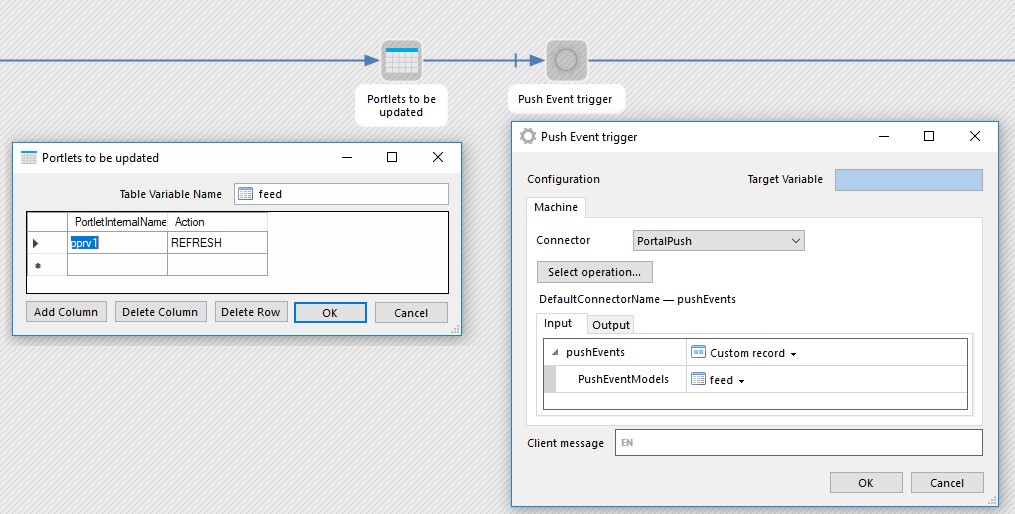

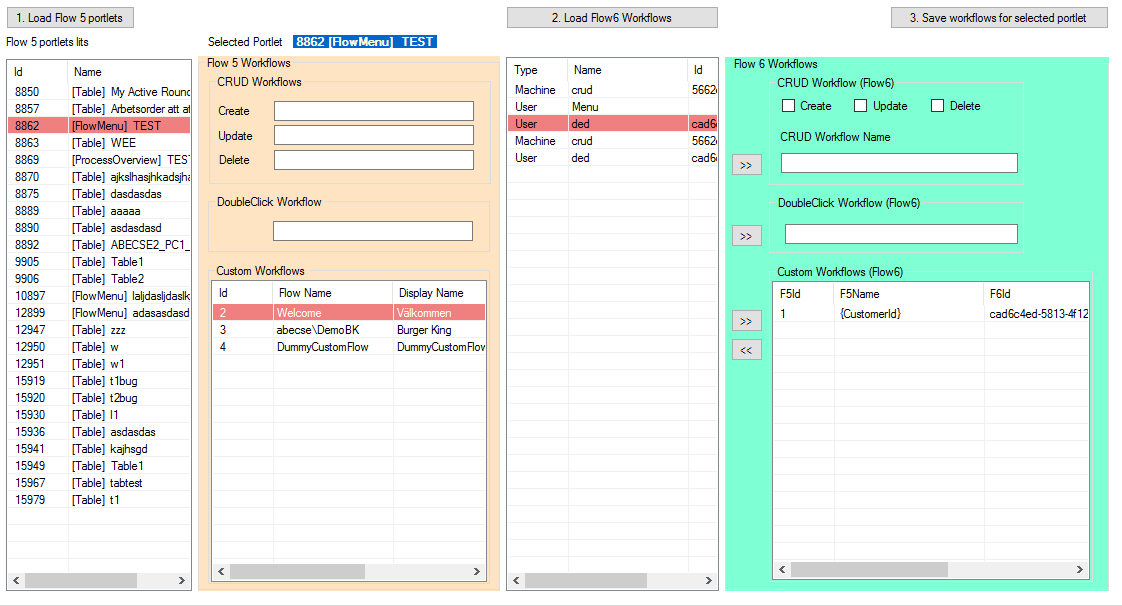

Create workflow in package.

Only the connectors defined in package settings can be selected in the workflow.

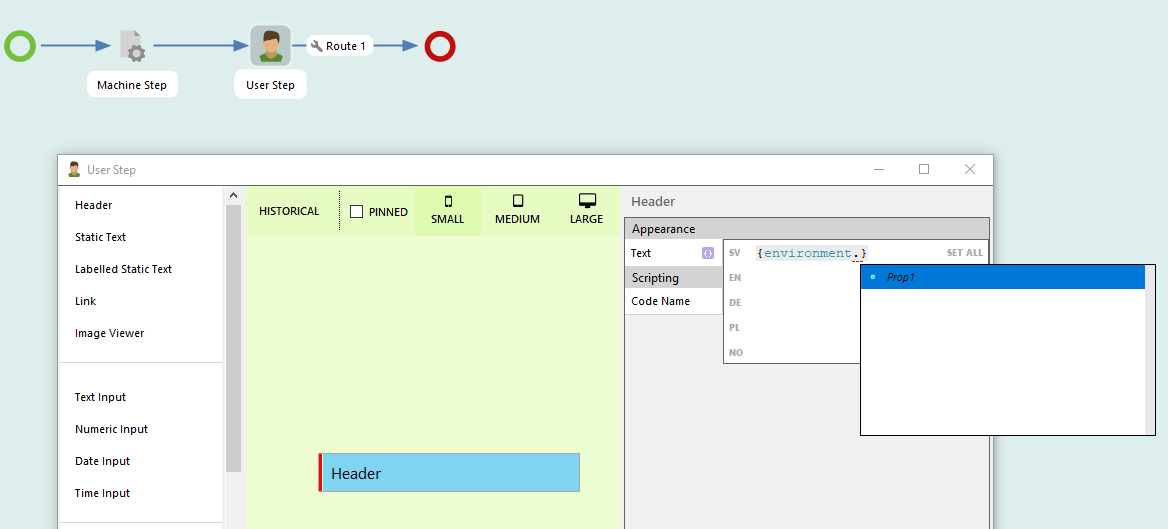

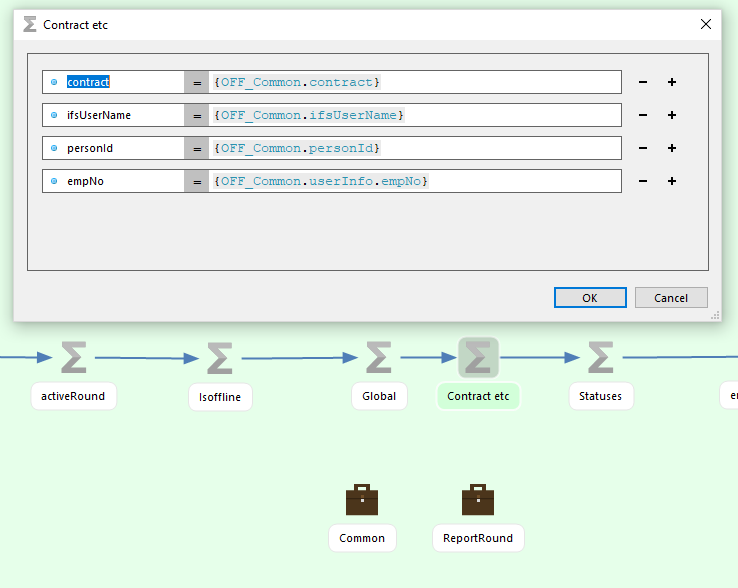

The package property can be used in the workflow.

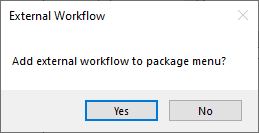

In the package menu it is possible add workflows that is not included in the package. If this is done, the user gets a warning message. External workflows are not included when exporting. Warning message:

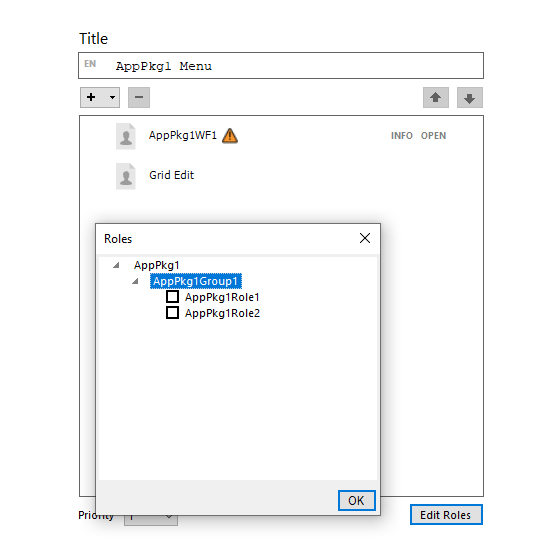

Add roles to the menu. Only package setting roles are available to be selected.

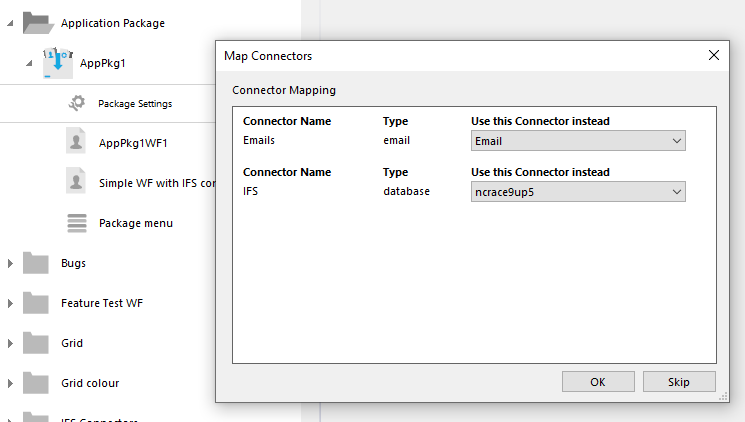

Drag and drop workflow as workflow into the package. Connector mapping is requested.

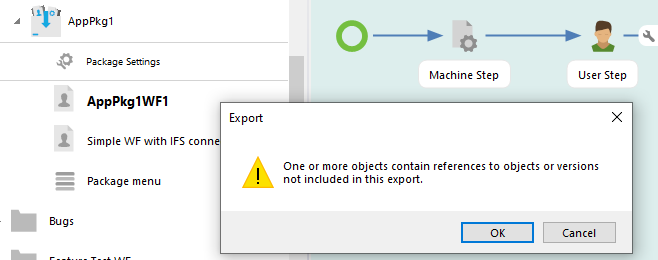

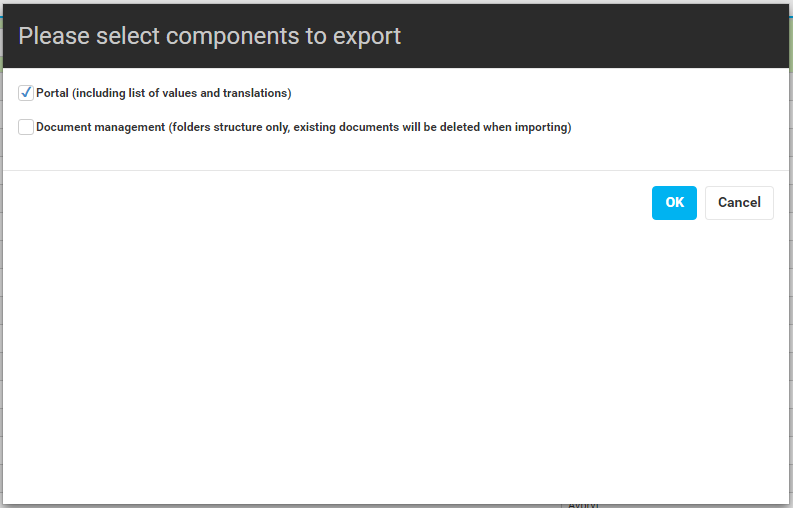

Export warning when menu contains workflow that is not in the package.

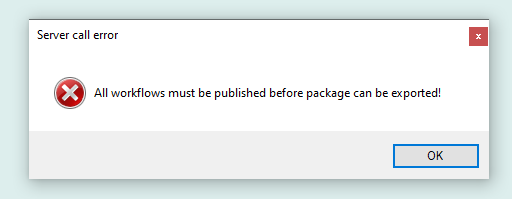

Export when workflows not published.

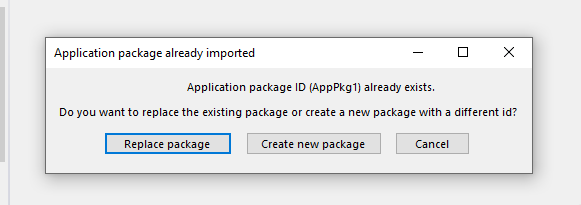

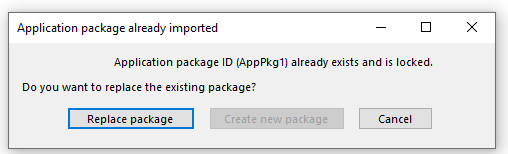

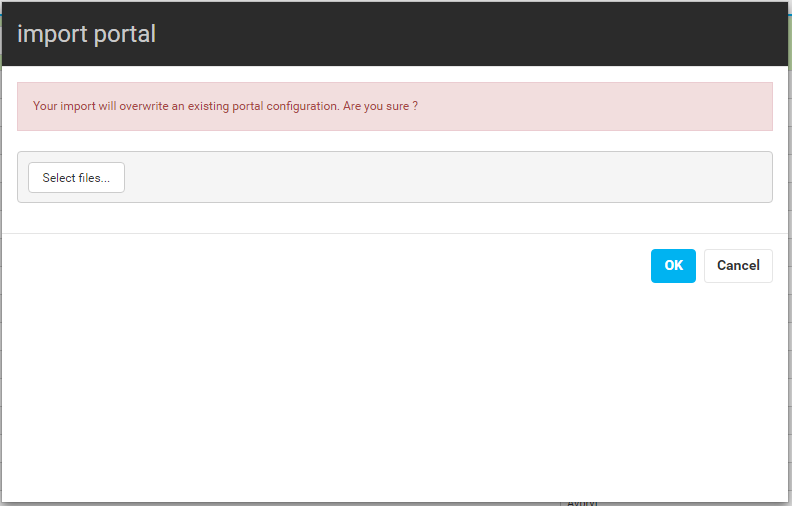

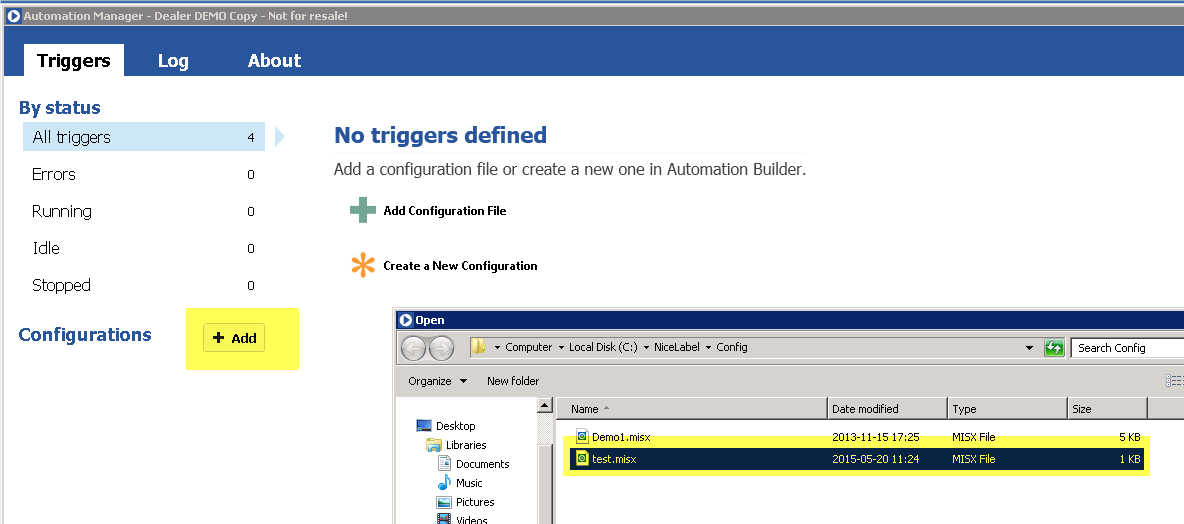

Import of existing package.

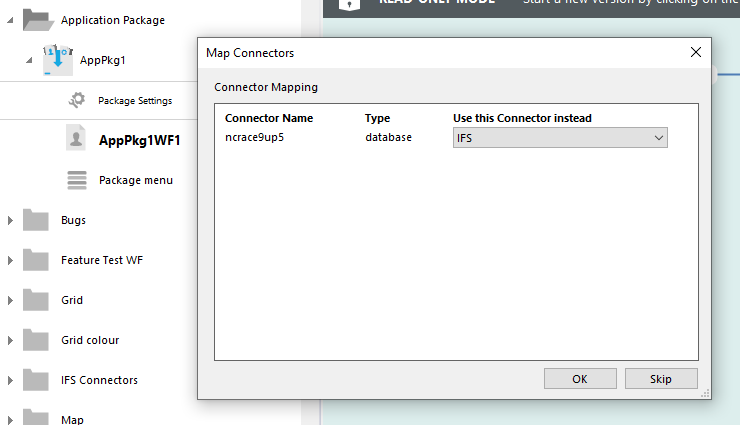

Connector mapping when creating new package at import.

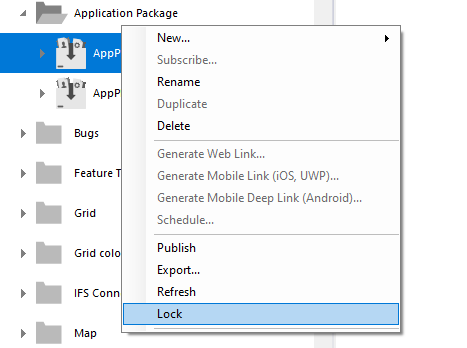

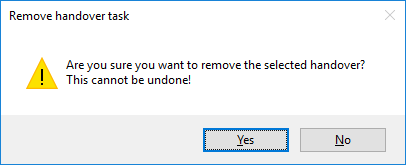

Lock package.

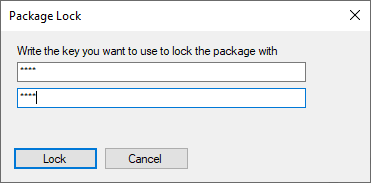

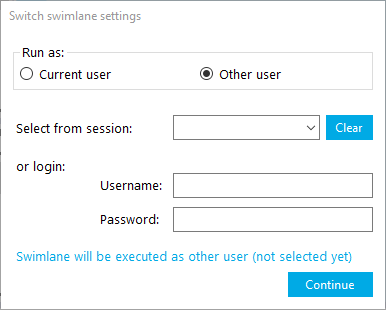

Enter and confirm password.

Package locked.

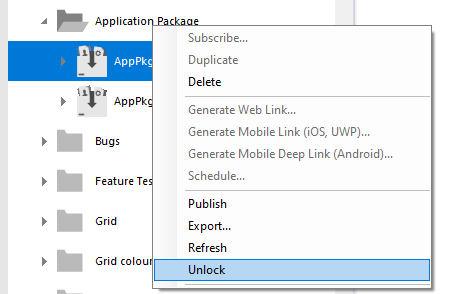

Unlock package

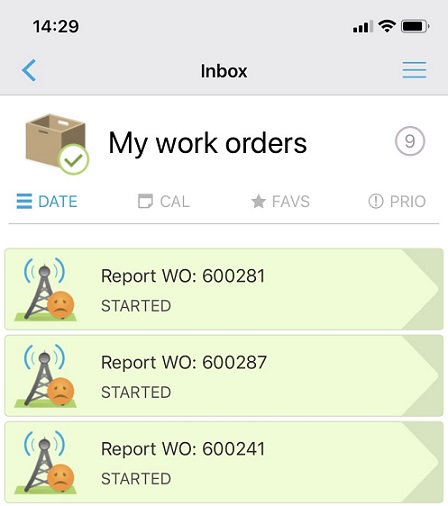

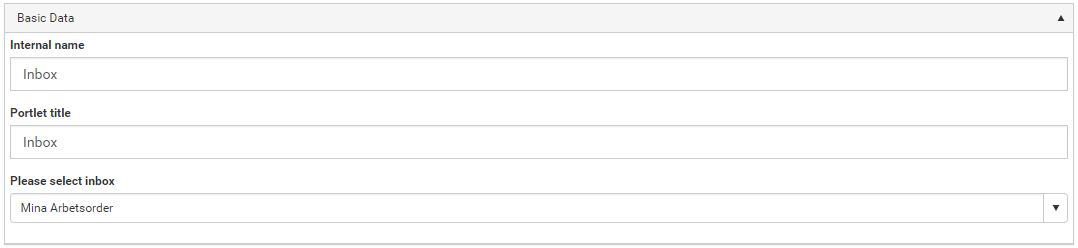

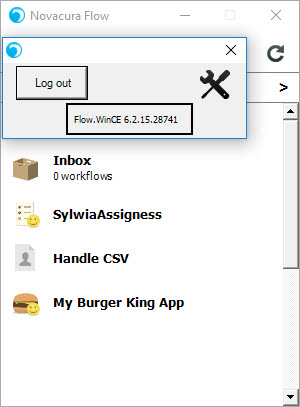

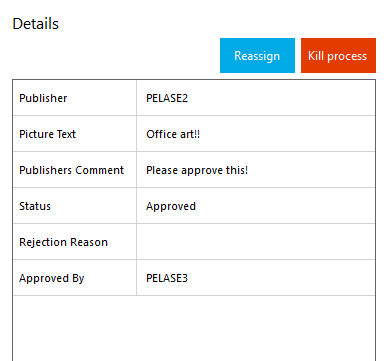

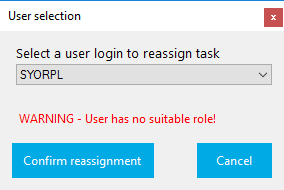

Inbox

There are two types of inboxes one can add to a menu, seed item and handover item.

Seed Item

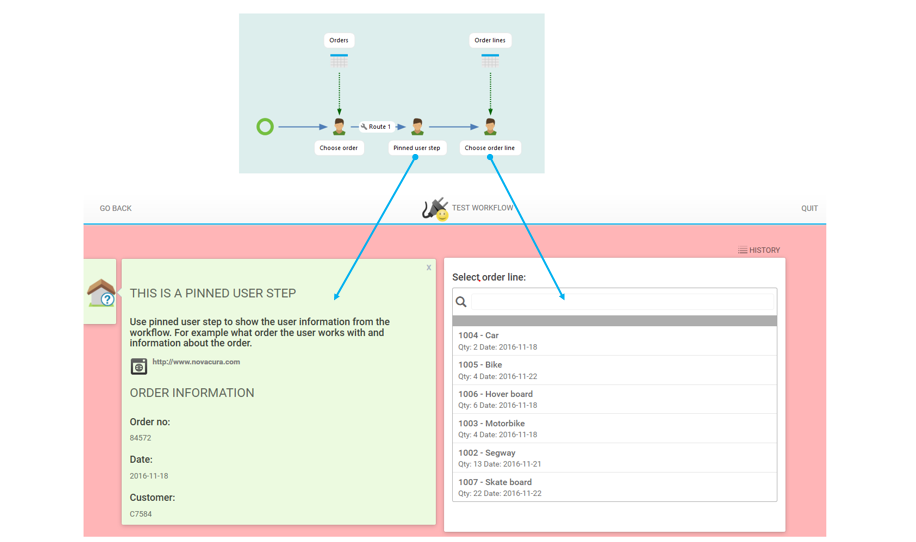

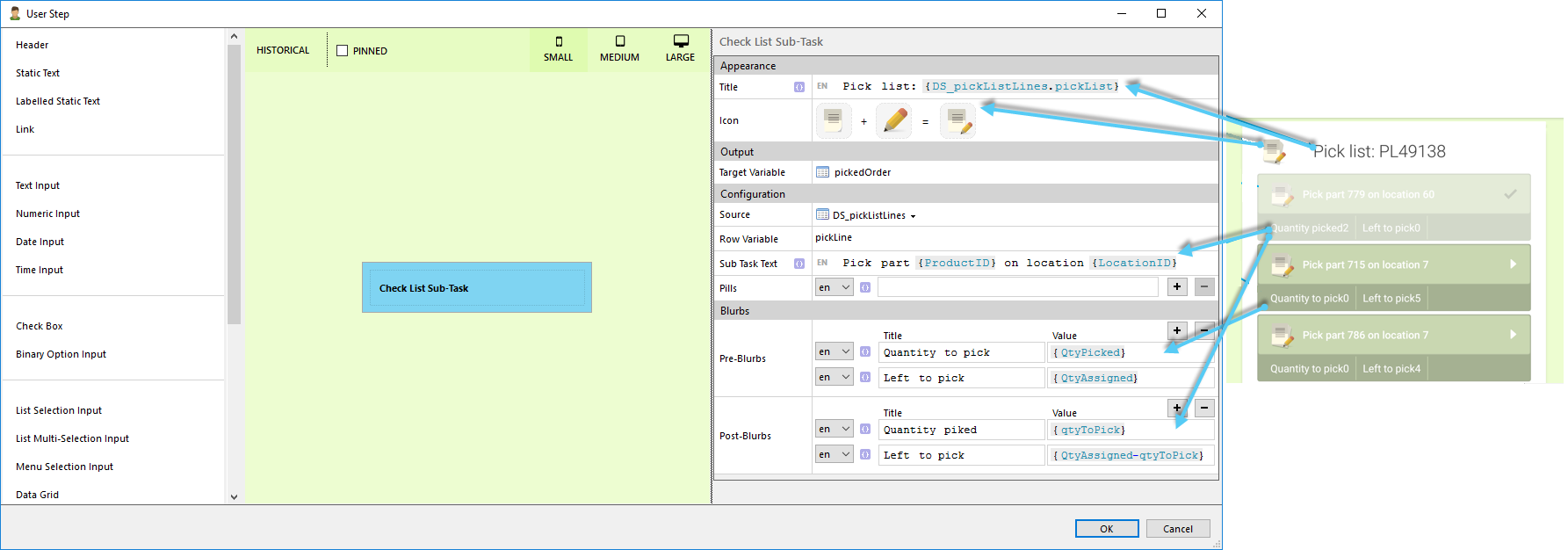

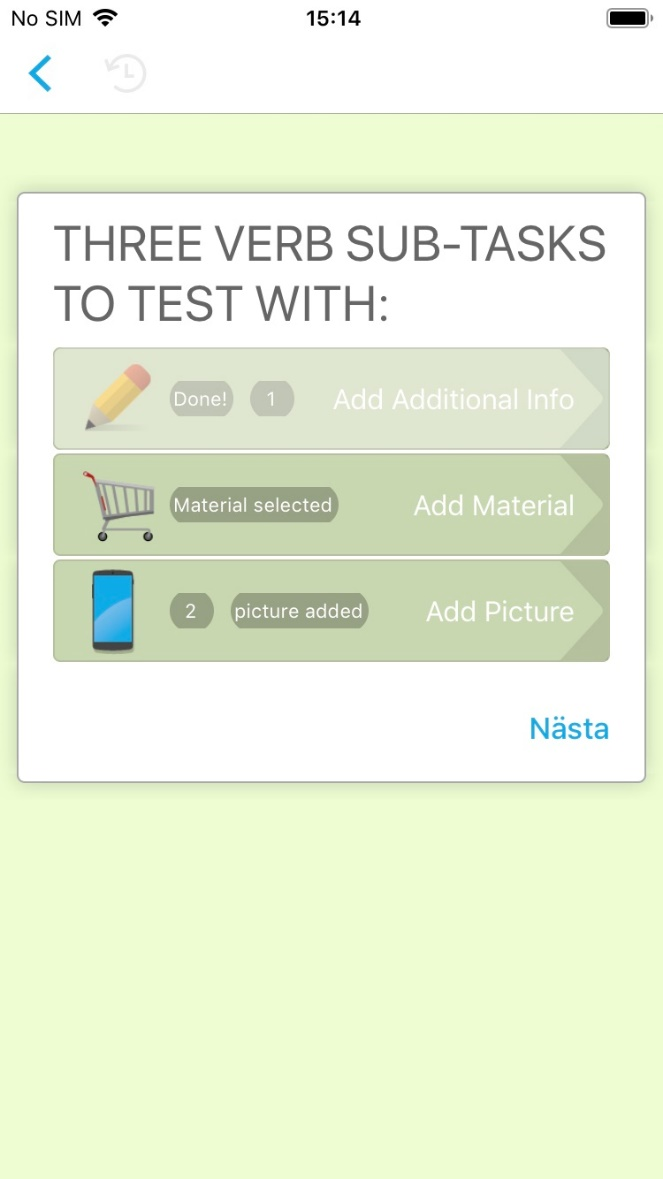

How to configure a seed item in a workflow:

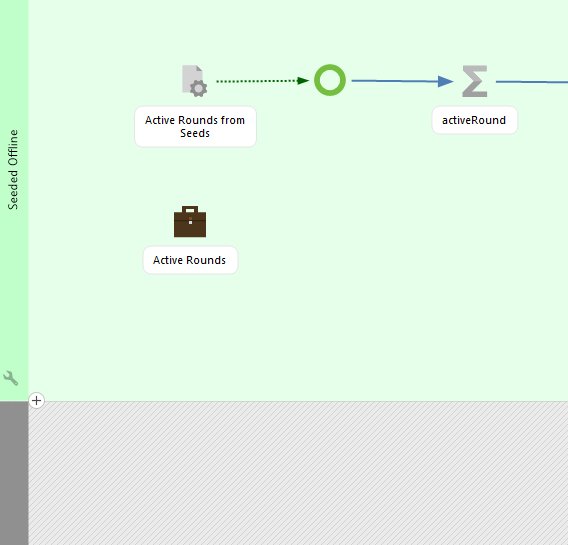

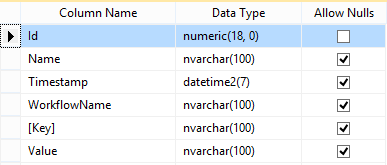

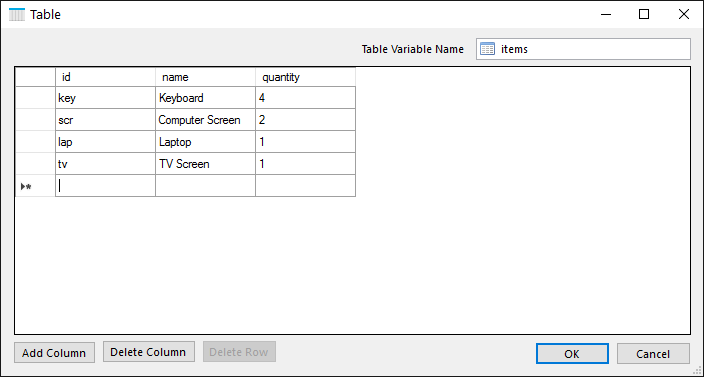

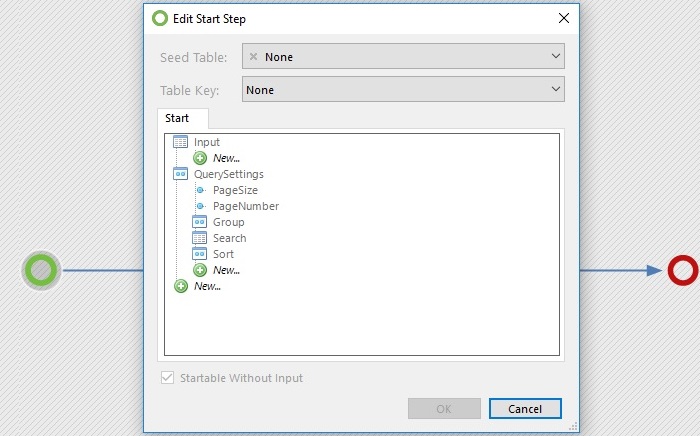

Connect a table or machine step with a data arrow to the start step.

A machine step is connected to the start step

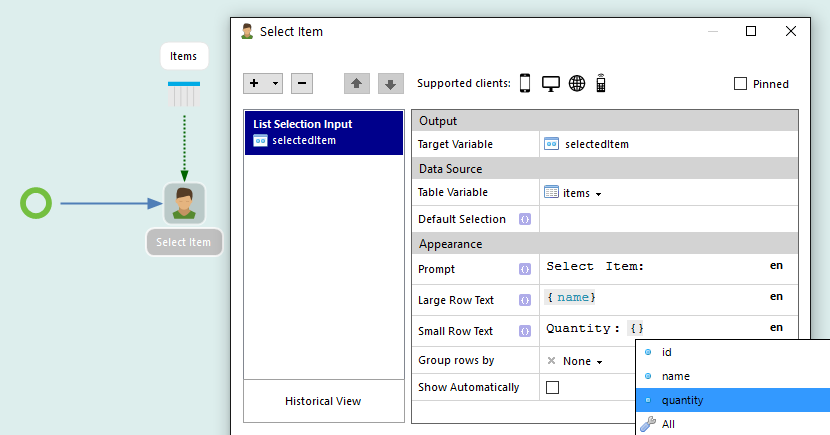

Click on the start step and configure:

- Seed table: Choose a table

- Table key: Choose a column (from the data source)

How to configure seed item in an inbox:

Click on "+" and choose Seed Item and then select your workflow in the server content.

Step to select a seed item to the inbox

Example how Seed Item is set up in the inbox

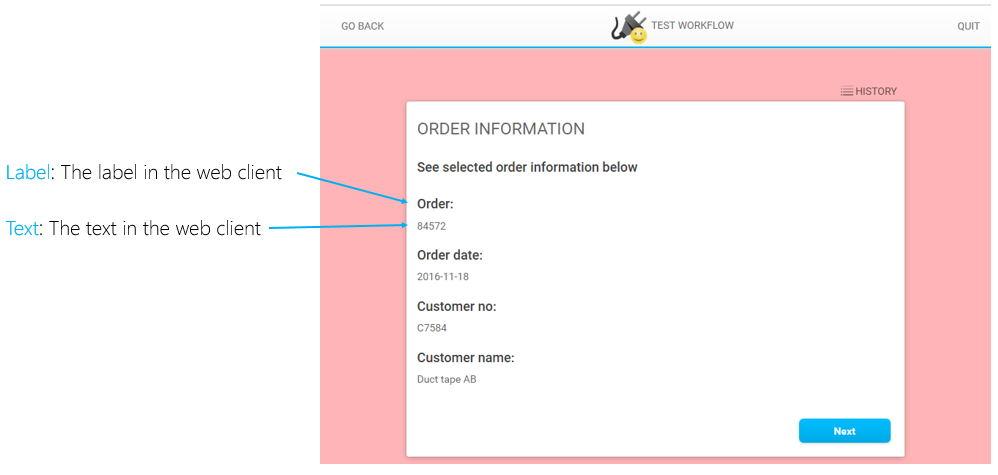

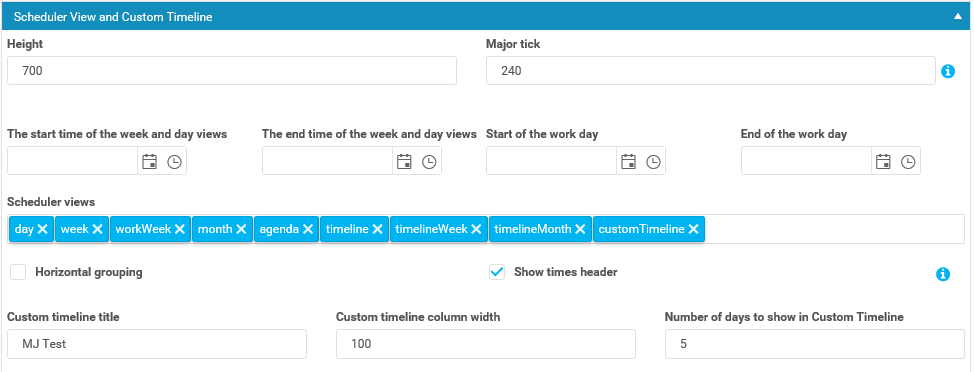

How to configure inboxes:

- Title: The title is displayed on the inbox item.

- Description: The description is displayed under the title text on the inbox.

- Item Title: The title is displayed on the inbox item after clicking on the inbox.

- Description: The description is displayed under the title text after clicking on the inbox.

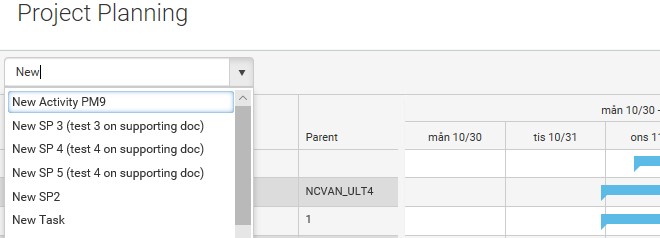

- Filter: Displays items after entered filter.

- Calendar: Displays items on a specific date in the calender tab in the inbox.

- Favorite: Displays items under the favorite tab in the inbox.

- Priority: Displays items under the priority tab in the inbox.

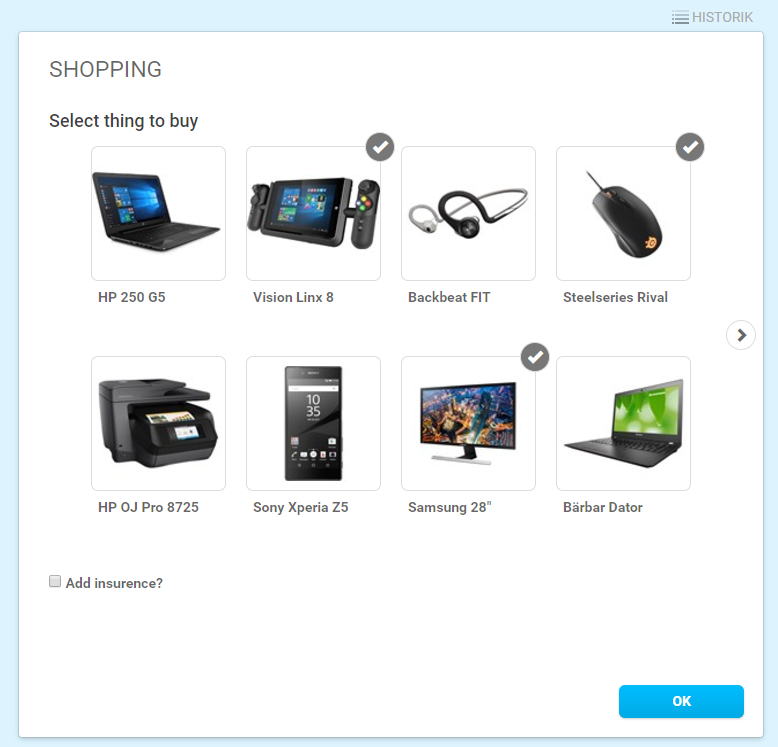

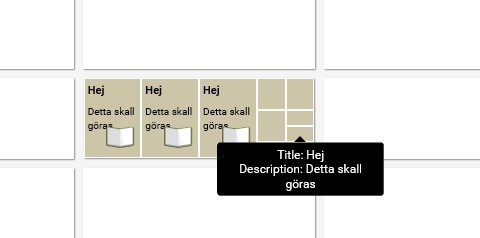

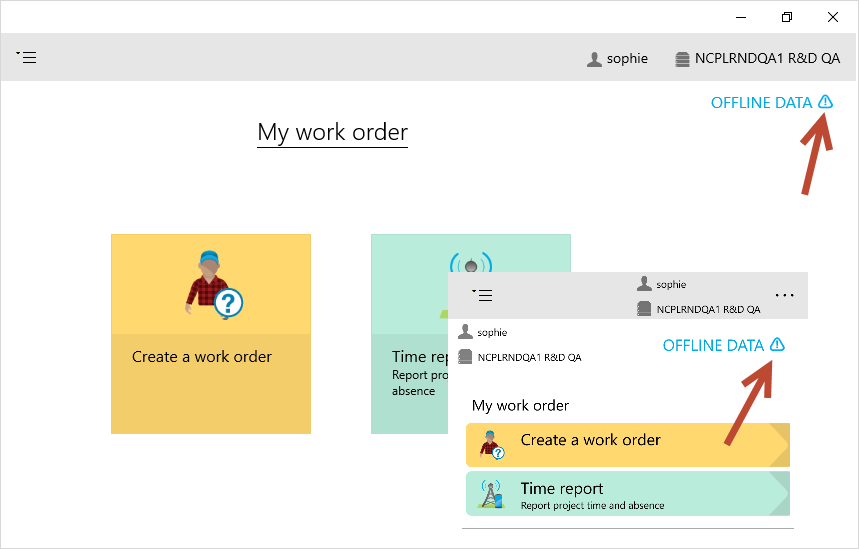

Example how an inbox with seed items can look in Universal Windows Client

Handover Item

How to configure handover item in an inbox:

Click on + and choose Handover Item and then select your workflow in the server content.

Step to select a handover item to the inbox

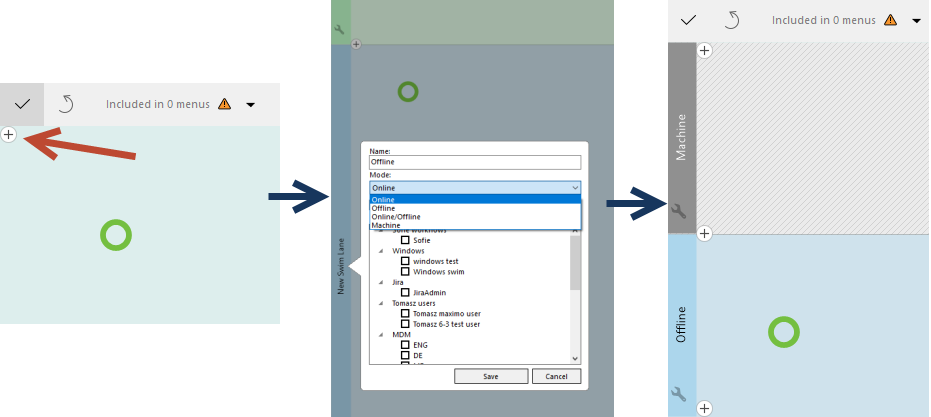

Menu

One or more menus needs to be configurated to make workflows available in the clients. A menu can have three different types of content Category, Workflow Item and Inbox.

- Category: A catecory will categorize workflows and menus in the clients.

- Workflow Item: Choose what workflows that should be available in the menu.

- Inbox: Add and configure an inbox to make seeded and hand overed workflows available for the user. Read more about how to configure an inbox here.

Edit Roles to assign the menu to those roles that the menu should be availalbe to.

Menu example

Generate Link

Generate link helps the user to construct a link to execute a workflow. This is supported for both User Workflows and Machine Workflows. Generate Link is located in the context menu in server content panel.

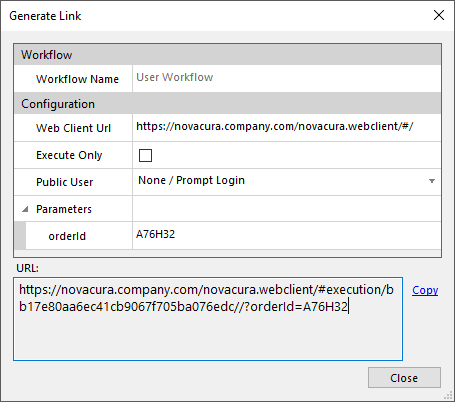

User Workflows

User workflow links points to the web client and provides a direct link to a workflow execution.

Configuration example

- Web Client Url this field should contain the url to the web client.

- Execute Only locks the user to the execution view and prehibit the user from quiting the workflow or returning to a menu after execution.

- Public User this option configures a public user to run the execution, when a public user is selected no credential prompt will be displayed.

- Parameters this sections show the input variables that are configured in the start event of the workflow. Only simple variables can be used in user workflows.

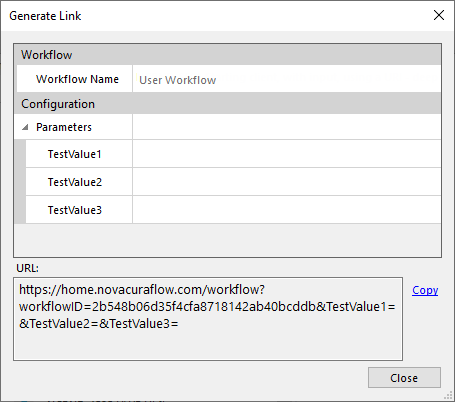

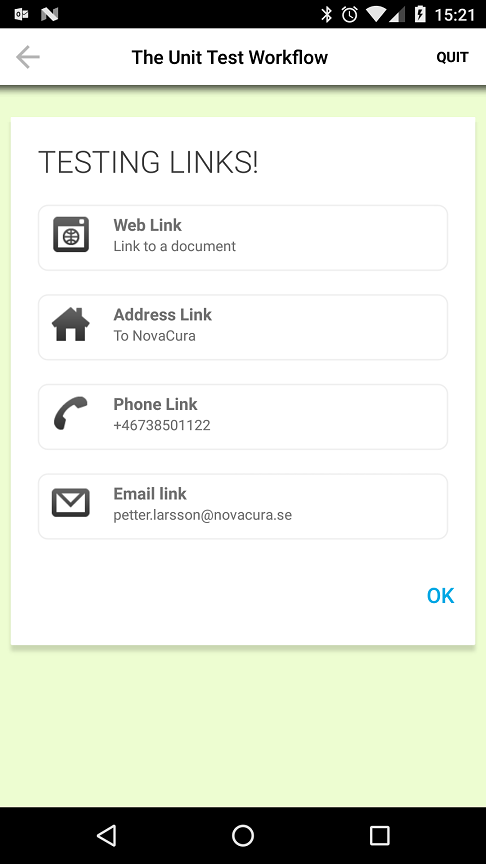

Mobile Link

Configuration example

User workflow links points to the mobile client and provides a direct link to a workflow execution.

- Parameters these sections show the input variables that are configured in the start event of the workflow. Only simple variables can be used in user workflows.

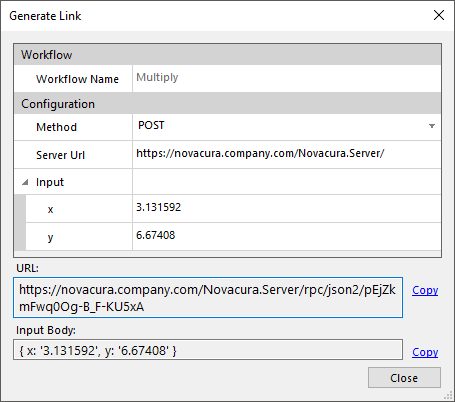

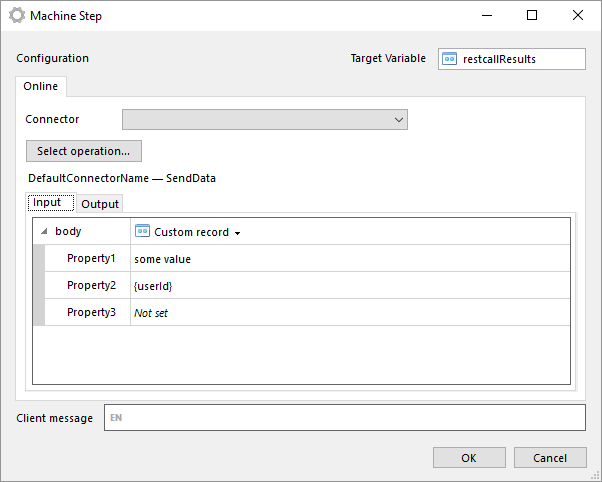

Machine Workflows

Machine workflows can be executed over http request. These request are made directly to the flow server. The workflow is executed by a machine user and authentication is made by basic authentication.

Configuration example

- Method supported methods are POST and GET, POST supports complexe structures as input and GET only supports simple variables feetched as query string values.

- Server URL url to server, must be accessible from where the request is made.

- Input in this section all simple variables are shown and provides a help function for constructing the input parameters. In POST requests the input should be sent as JSON in body content and GET should send input variables as part of the url.

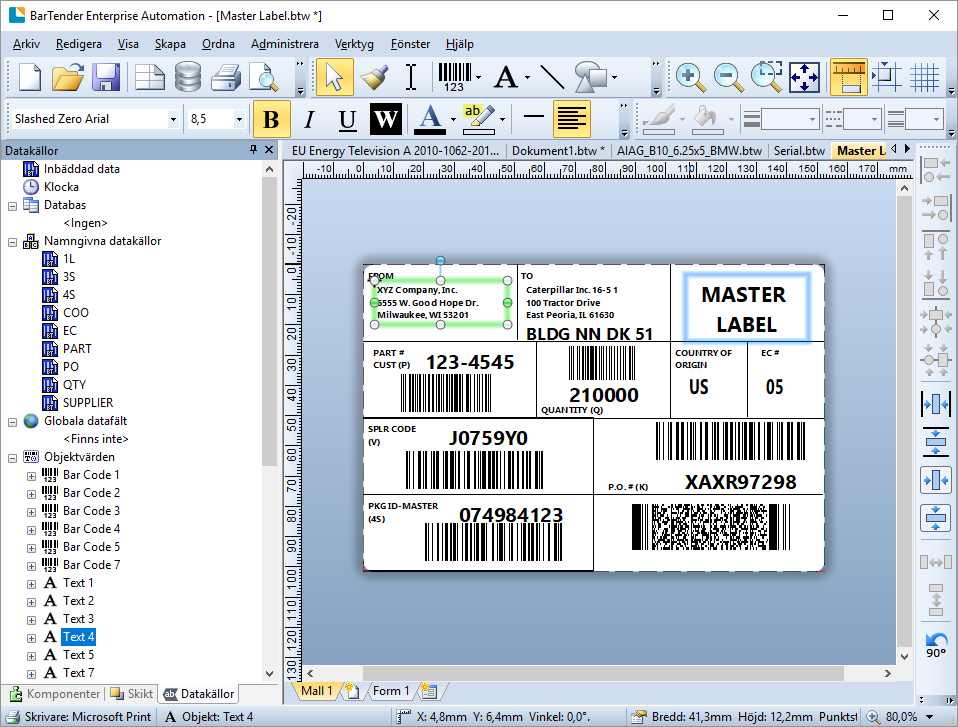

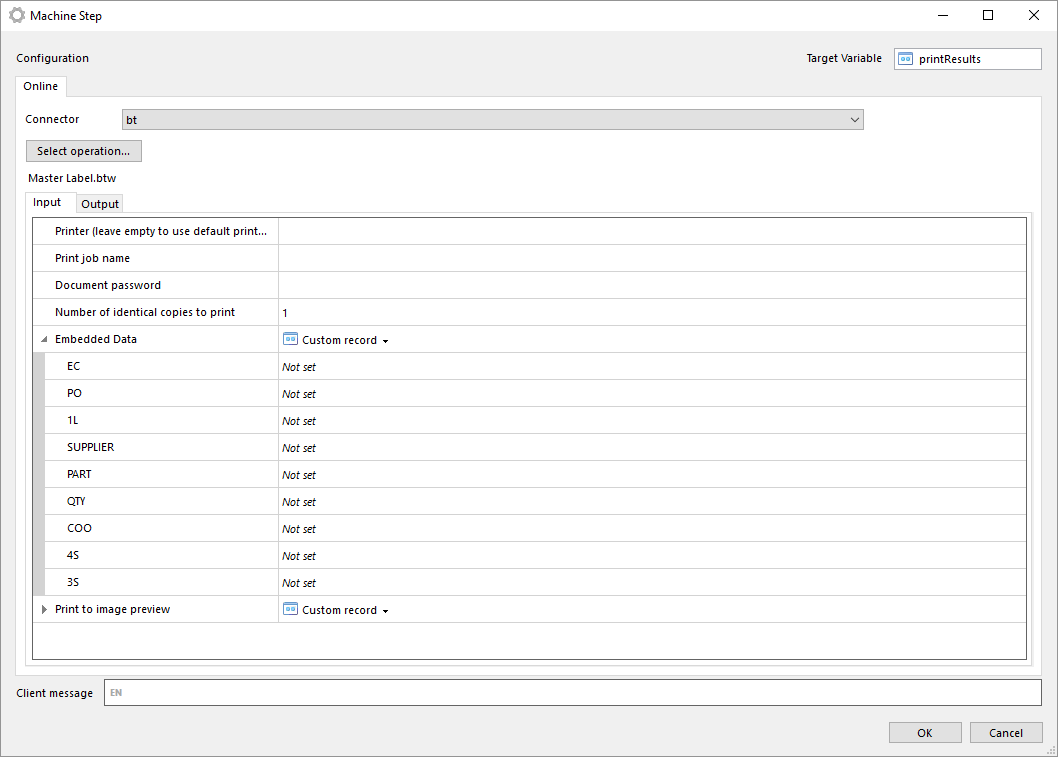

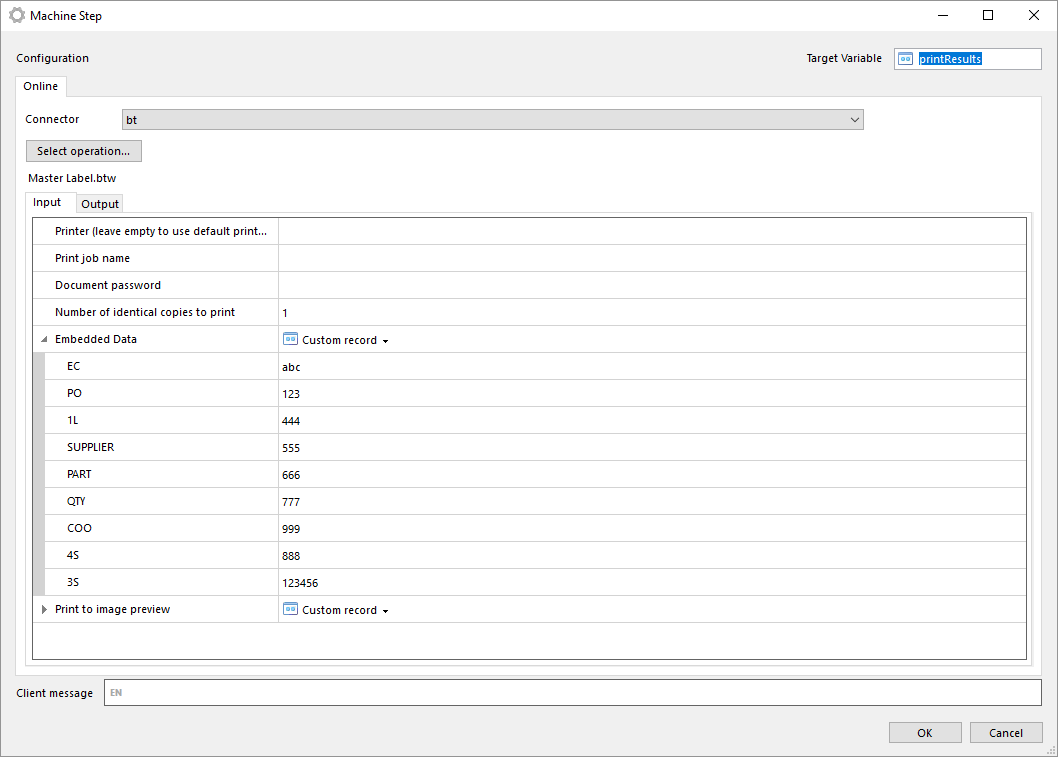

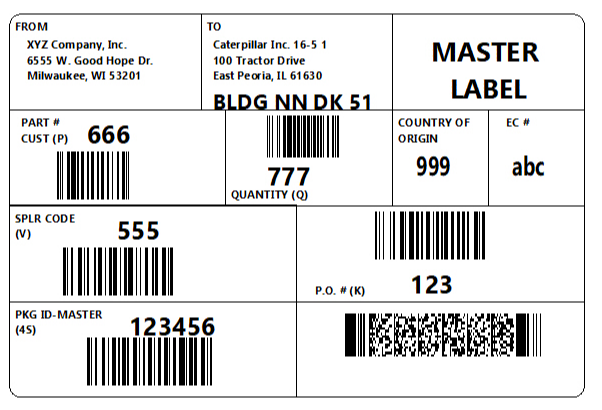

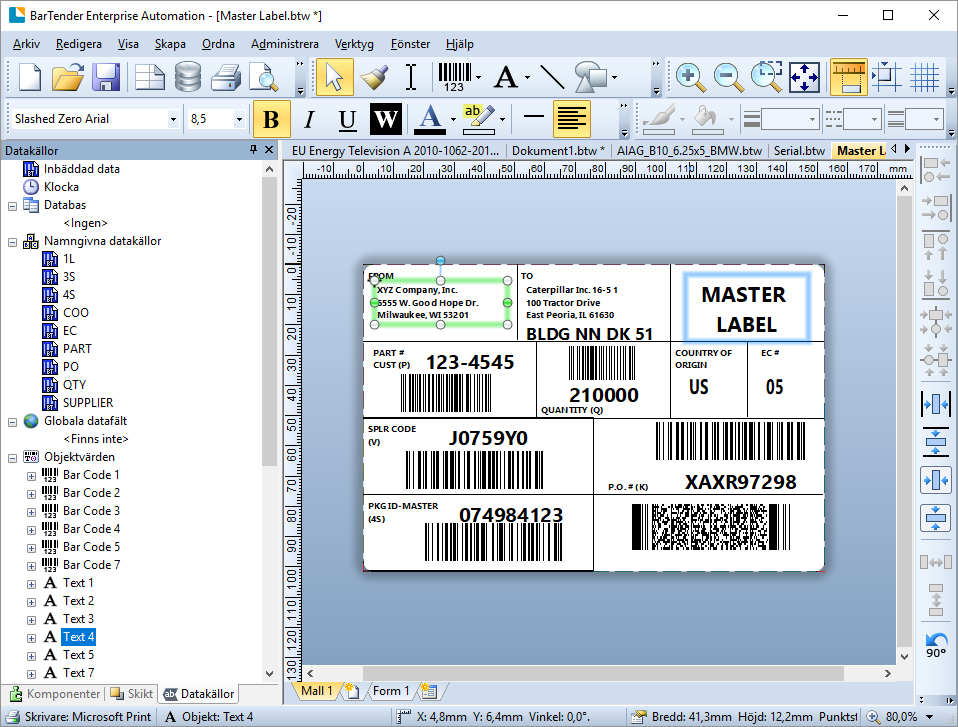

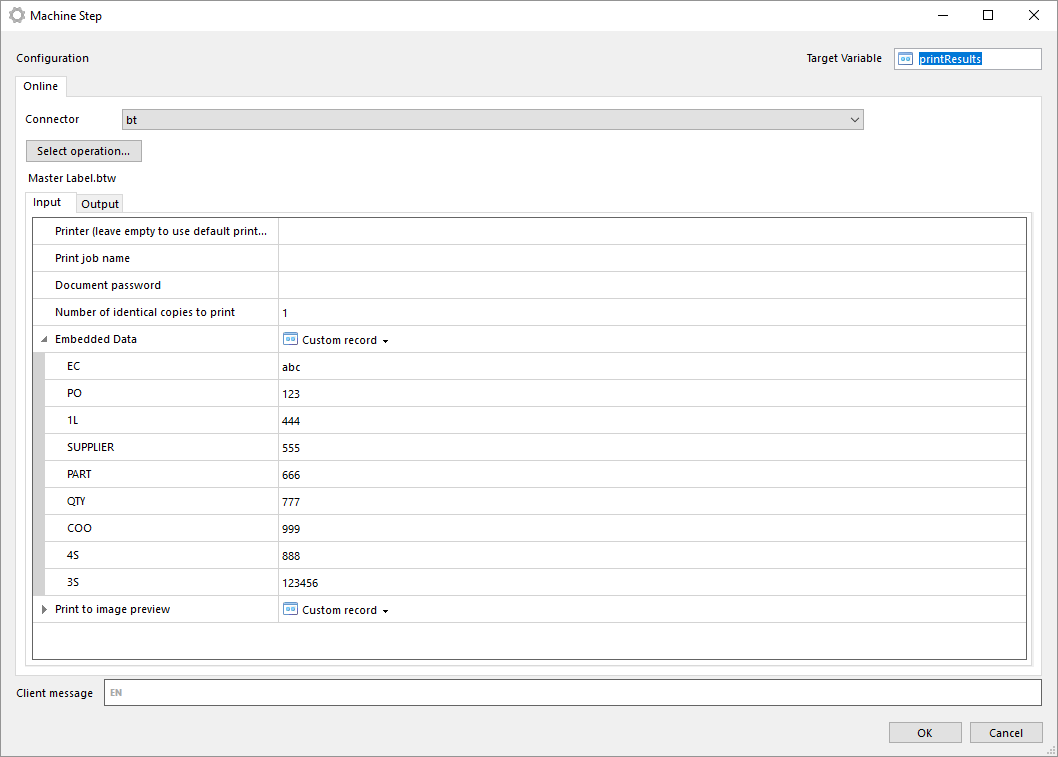

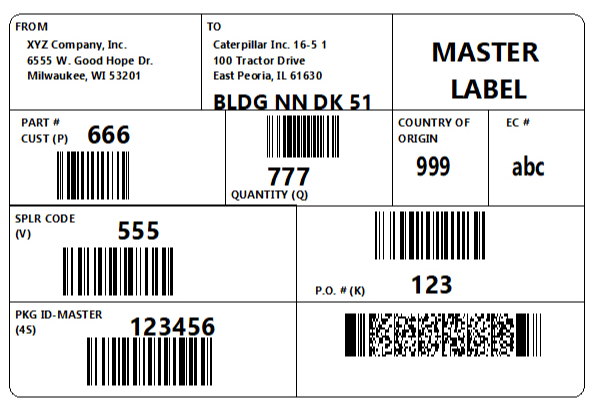

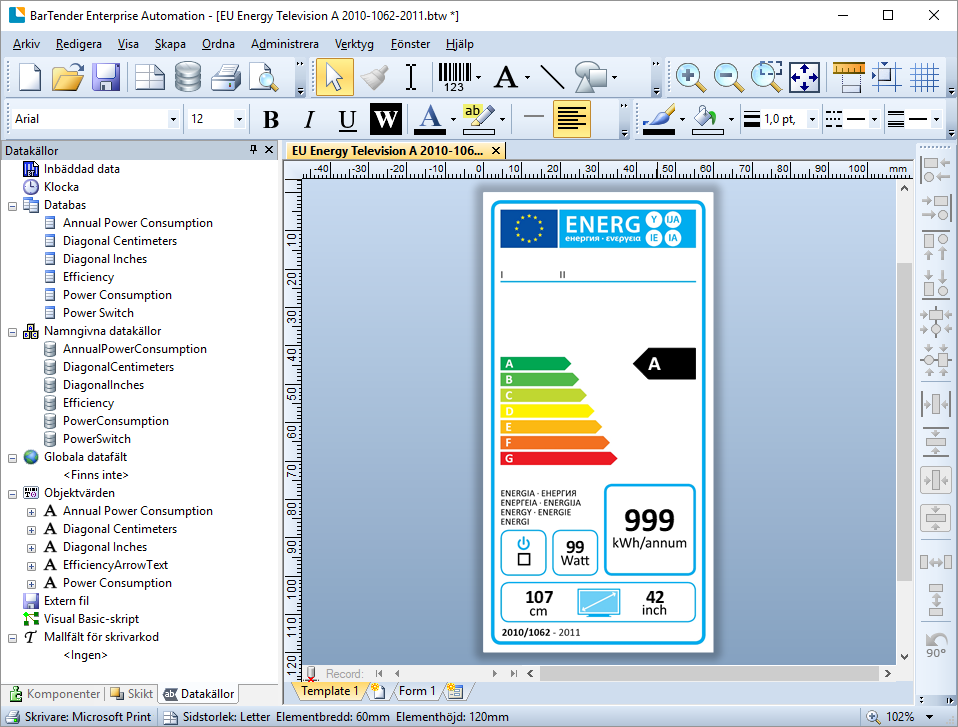

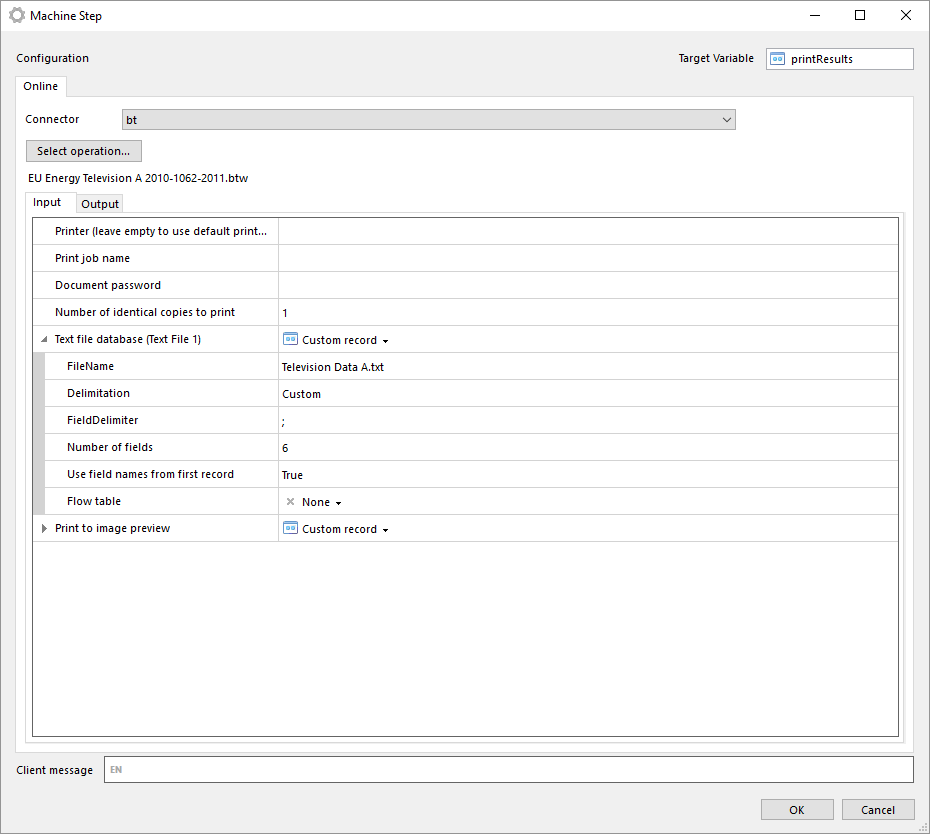

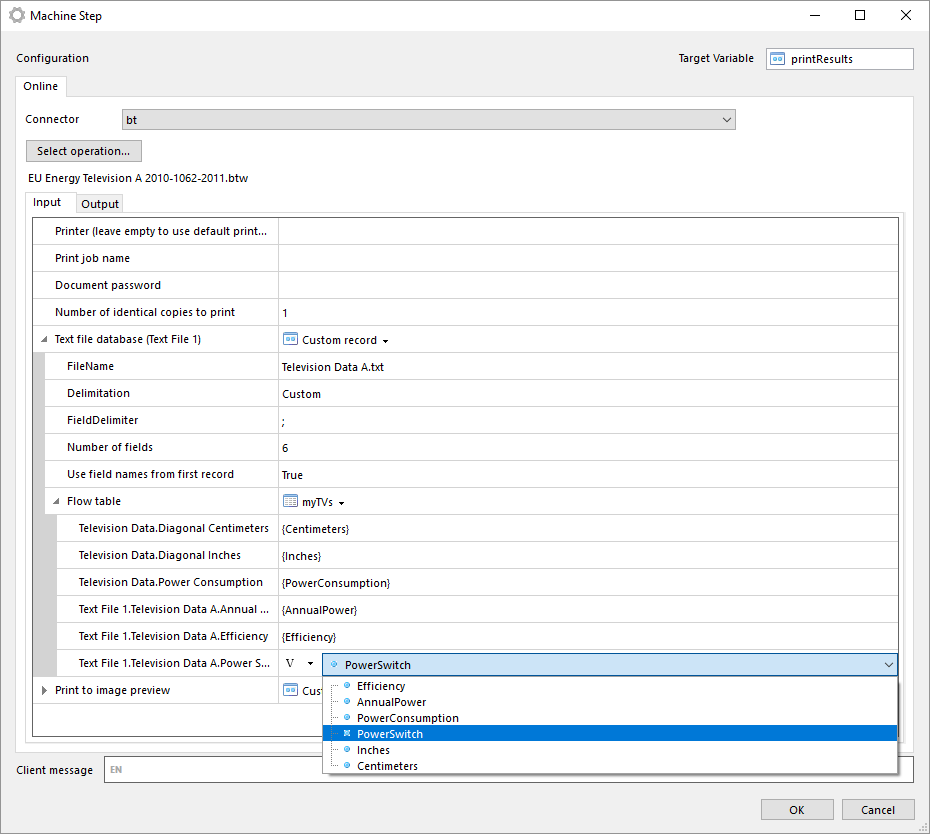

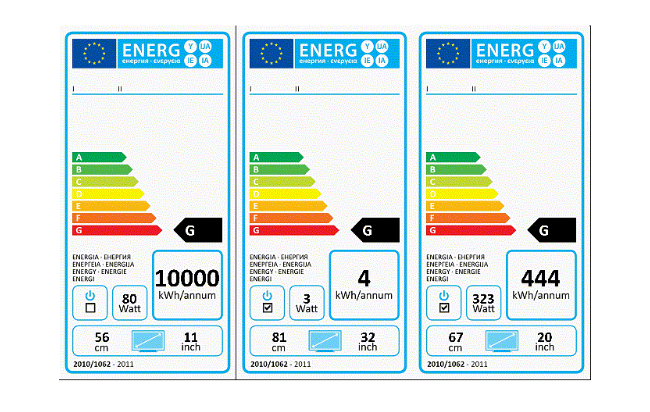

Manually select BarTender document

With this operation you can manually select a BarTender document from your local file system.

The file is stored in the machine step and is not in any way synchronized with the file in your local file system. Once the file has been selected you can export the file by clicking on Export file....

Note that if you select another operation in the machine step the document will be lost and has to be selected from the file system again.

The same input and outputs as 'Print' applies, see Print for more information.

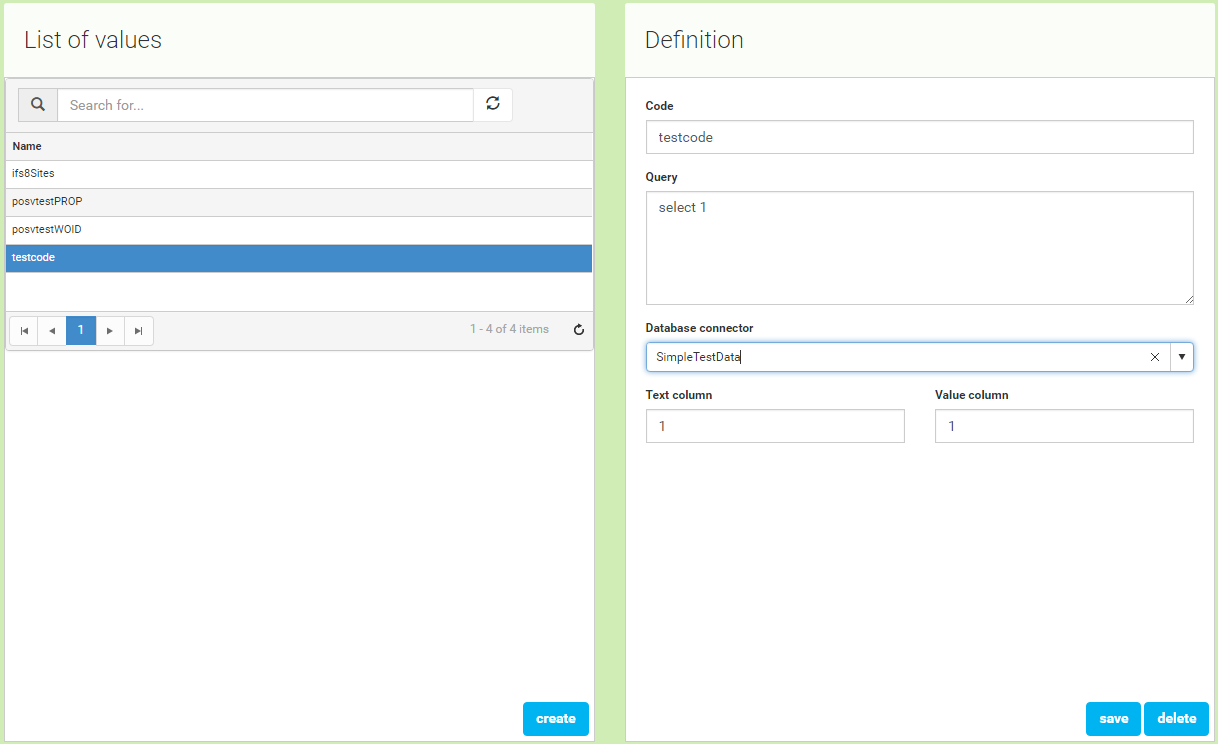

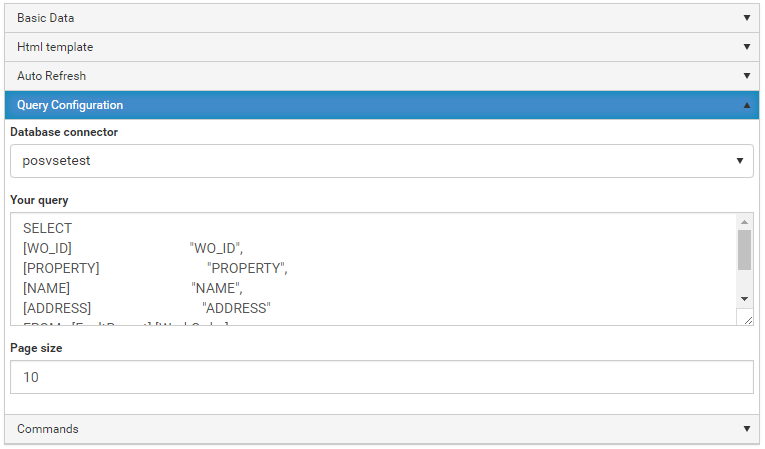

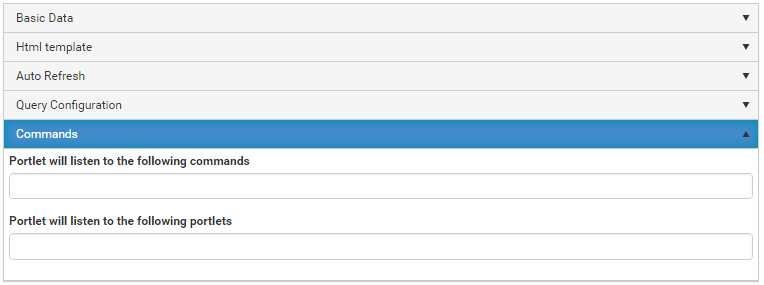

Database Connector

TODO

IFS Applications Connector

TODO

M3 Connector

TODO

Infor M3 REST Connector

The M3 Rest Connector is used to execute transactions in M3 via the M3-API-REST bulk API.

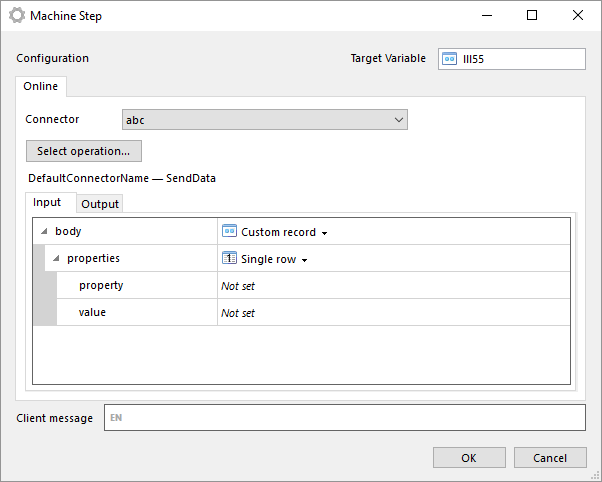

Press Select operation to select which transaction to execute.

Input

The input will of course depend on the transaction to execute. There is one common parameter that always is available though, readtimeoutmillis. In this parameter you can specify the amount of time to wait for response from job.

Another common feature for all transactions is the possibility to use an iterator. If you select a iterator it means that the transaction will be executed once for each row in the table specified as iterator. Using a iterator will change the output of the cogwheel to a table with the same number of rows as the iterator table, each row in the table is corresponding result of transaction execution for row in iterator table. You can of course map columns in iterator to parameters of transaction. Simply select an iterator, and for a parameter select I Iterator in dropdown menu for parameter and then select the suitable column of iterator table.

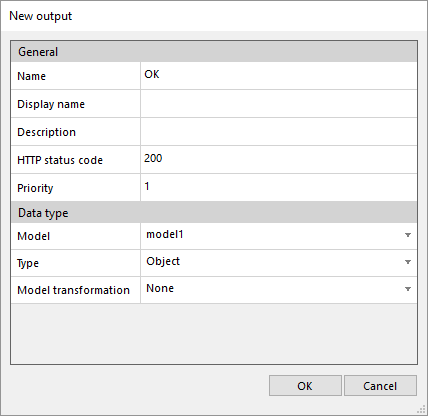

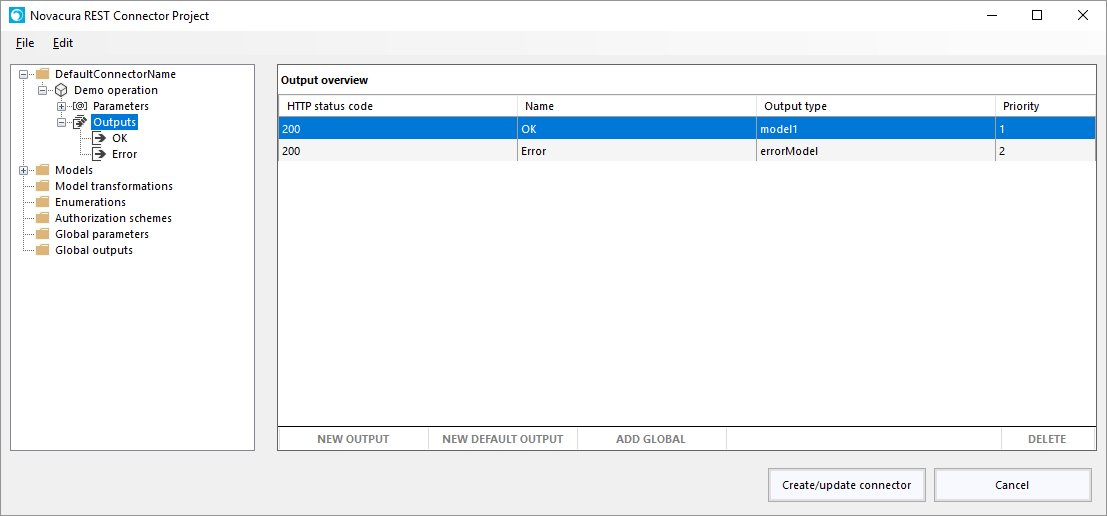

Output

Record (or table of records if iterator is used).

| Member | Description |

|---|---|

| HttpStatusCode | The status code returned from REST API. Typically 200 if request (not necessarily transaction) was successful. 401 if not authorized etc. |

| ReasonPhrase | HTTP ReasonPhrase, if any |

| AllHeaders | Simple variable containing all headers (with line feed between each header) returned by REST API. |

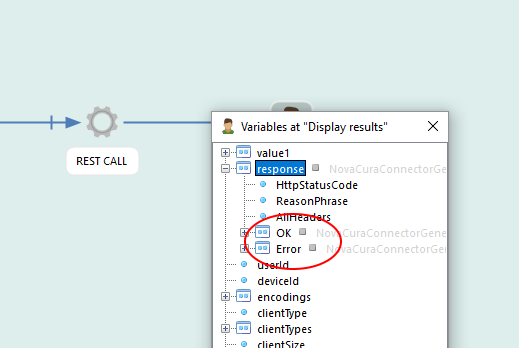

| OK | A record containing result of transaction if it was successfully executed. Will be empty if transaction failed. - Program - Simple variable containing the program executed - Transaction - Simple variable containing the transaction executed - Records - A table containing the records (if any) returned by transaction. The columns of the table will of course depend on the transaction, but you can always choose not the request particular columns by unchecking the Included checkbox. By doing that, it will not be requested from the REST API, which could boost performance. You can also specifiy a row limit on Records (default 100). |

| Error | A record containing error information if transaction failed for some reason. Will be empty if transaction was successfully executed. |

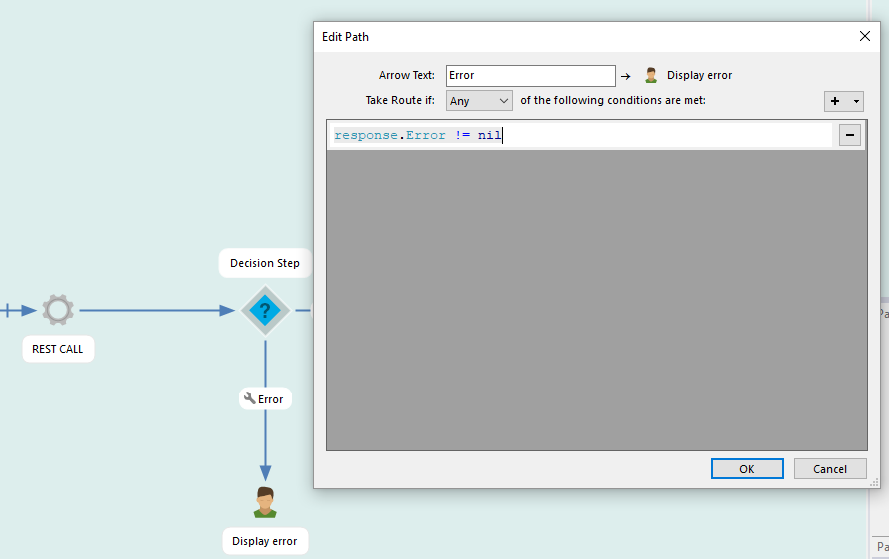

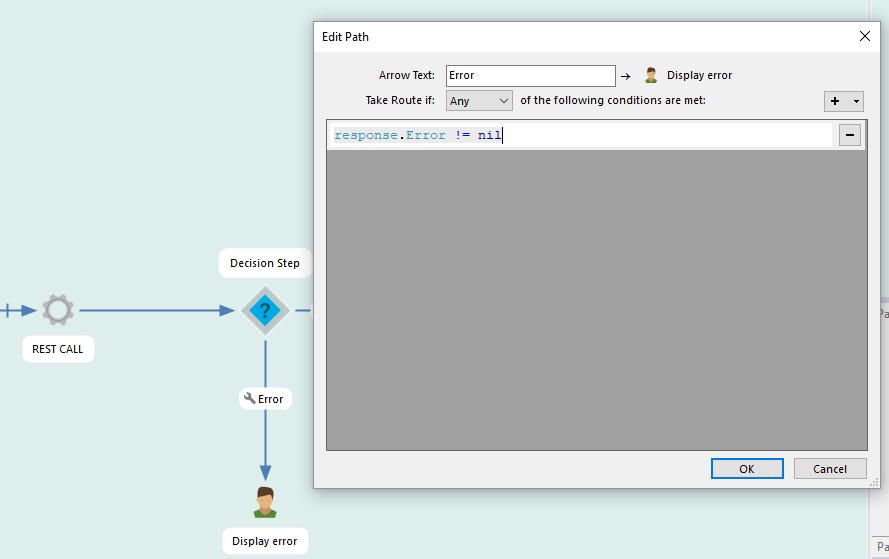

Note that if HttpStatusCode is 200, either OK or Error (never both) contains data. This can be used to take different paths in your workflow. Simply add a 'Decision Step' and add a script rule like transactionResults.Error != nil to check for errors.

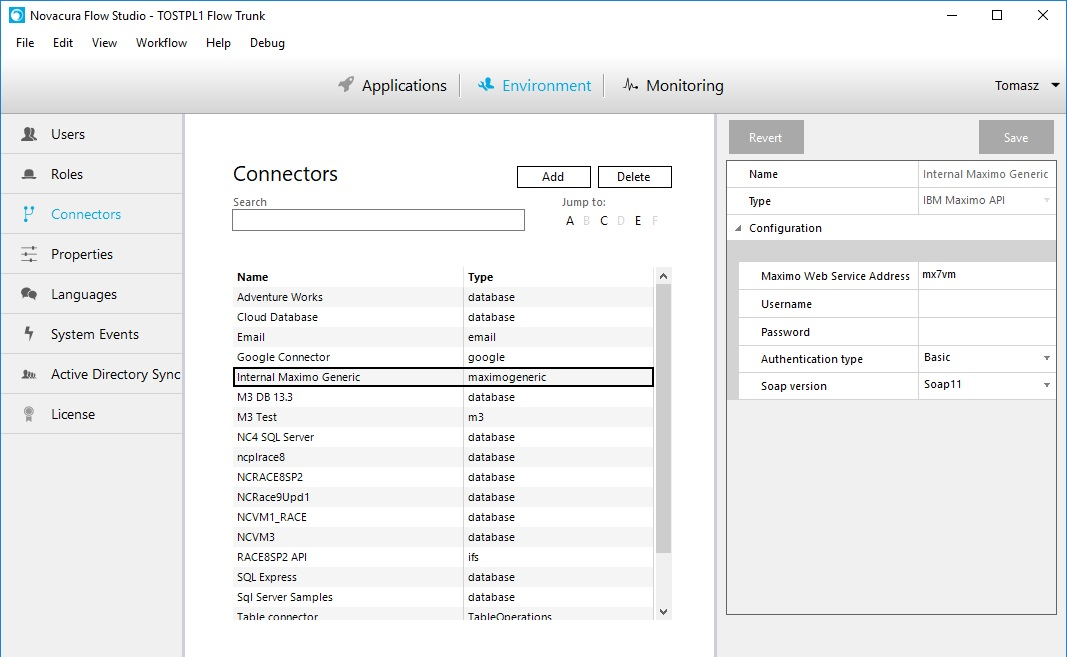

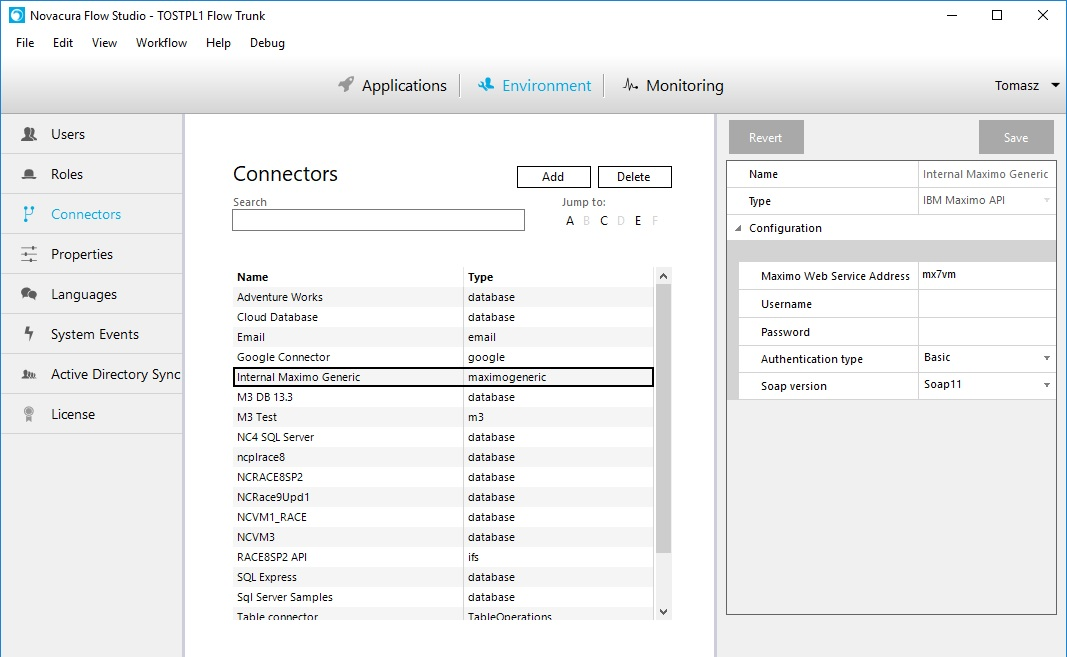

Maximo Generic Connector

Maximo Generic connector communicates with Maximo Web Services.

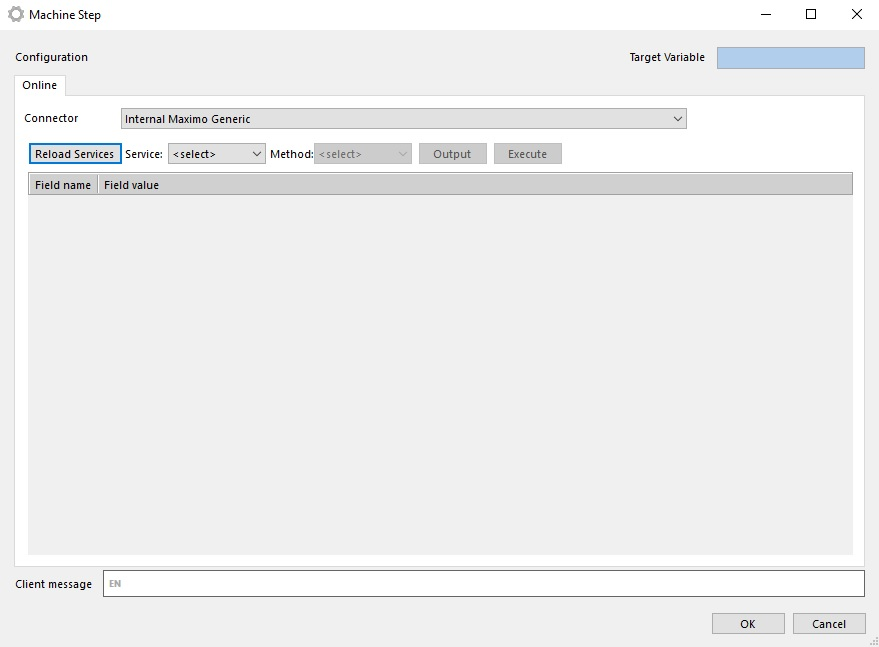

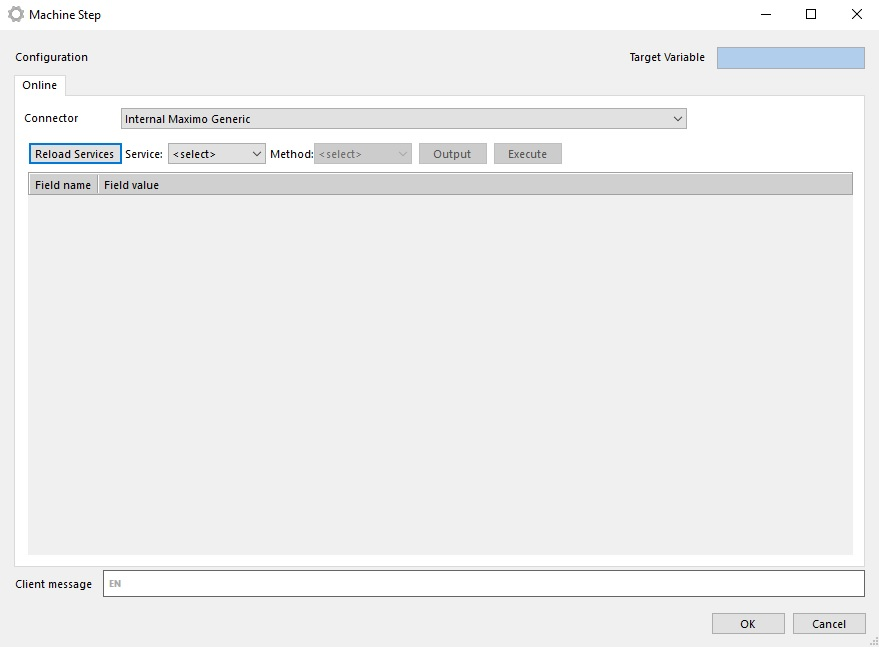

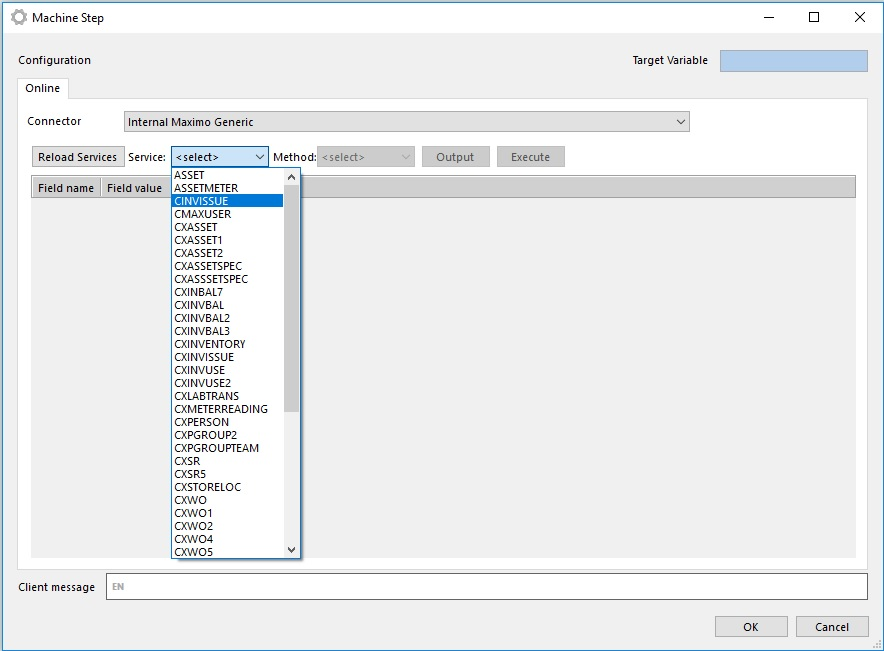

Creating a Maximo Connector Machinestep

When connector is configured we can use it in machine steps. We have to do the following steps:

- Reload Maximo Web Services

Connector reads WSDL from Maximo Main Web Services from MaximoWebServiceAddress (configuration):

for example: http://{MaximoWebServiceAddress}/meaweb/wsdl/MXWSREGISTRY.wsdl

After that it creates the web service dotnet client object and call Maximo Main Web Services.

Response from Maximo Main Web Service contains list with all available Maximo Web Services.

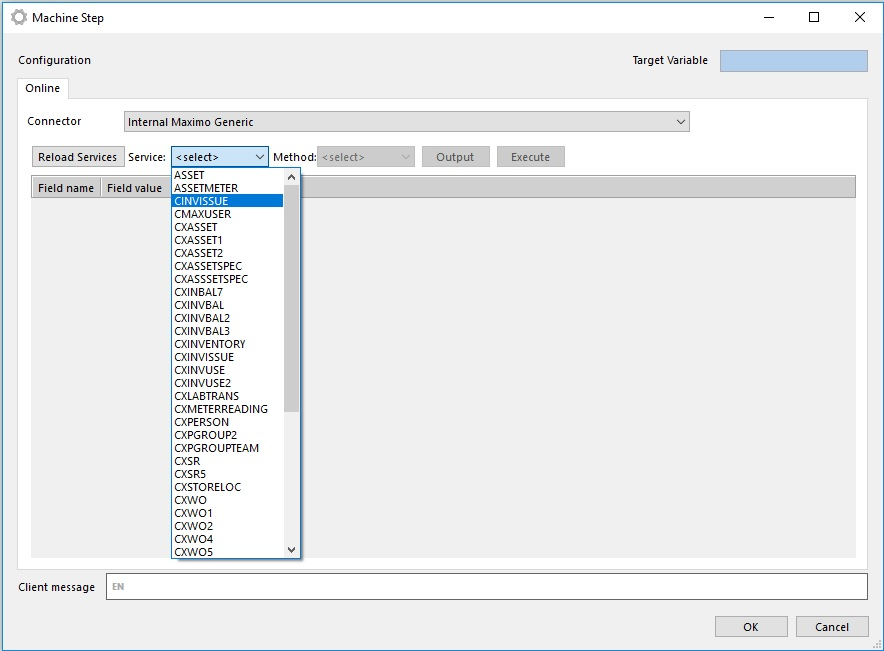

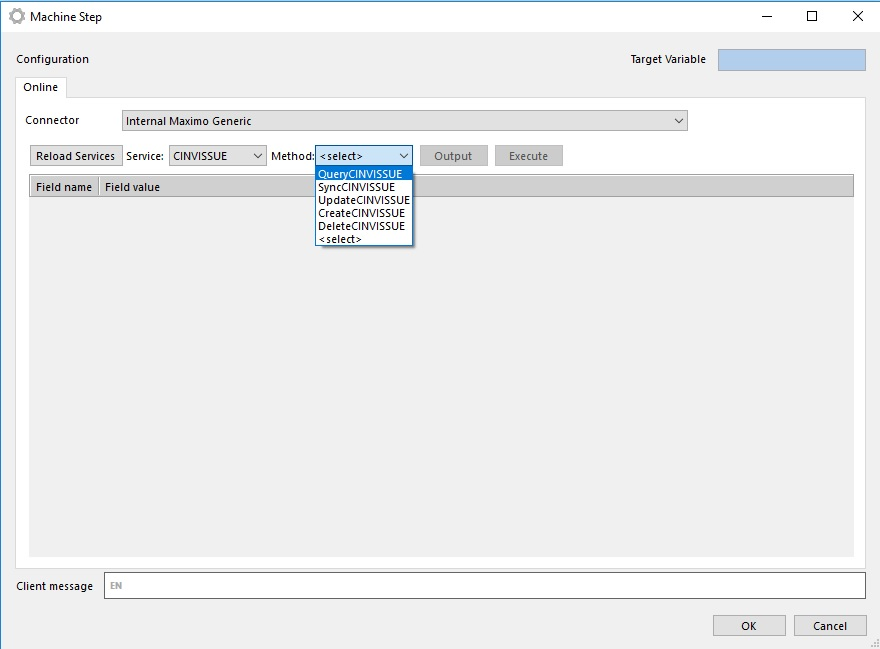

- Choose specific Maximo Web Service

When specific web service is chosen the connector reads the WSDL for the SelectedWebService from MaximoWebServiceAddress (configuration):

for example: http://{MaximoWebServiceAddress}/meaweb/wsdl/{SelectedWebService}.wsdl

After that it creates web service dotnet client object and calls the selected Maximo Web Service.

Response from Selected Maximo Web Service contains list with all available methods and their parameters.

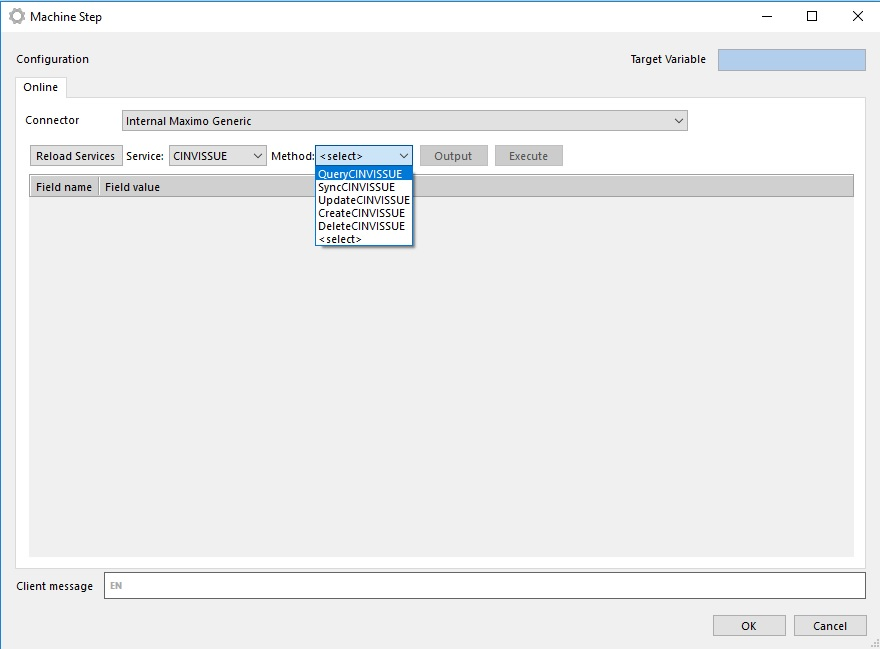

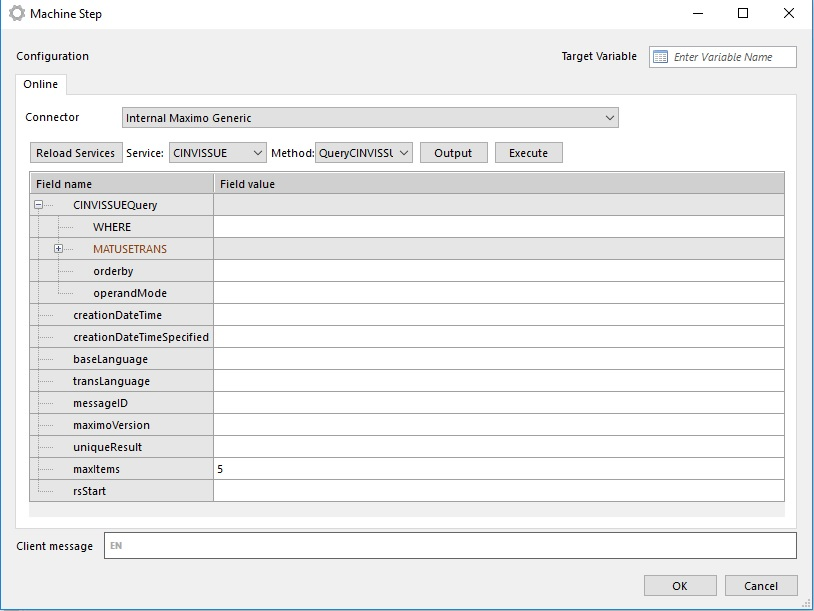

- Choose Method and fill method parameters

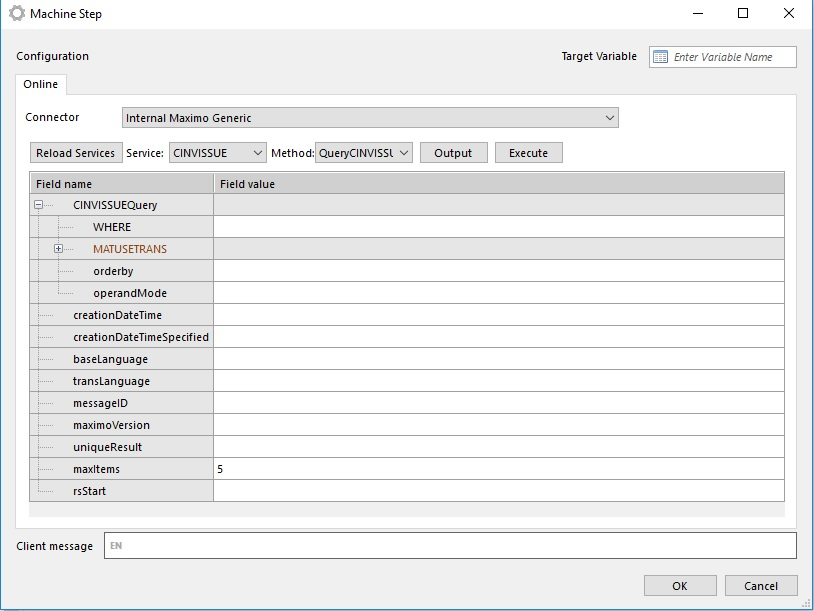

When specific web service method is chosen, the connector reads all input parameters from the method definition and displays it:

When all required parameters are set we can use connector (by running workflow or directly from Flow Studio to test it).

When Connector is executed it use web service dotnet client (created in point 2) and execute method and read response data.

Basic API

About the Novacura Flow Transporter Package

The Novacura Flow SAP connector will call a number of SAP BAPIs in order to retrieve the interface information. The following functions give the required information:

- ZNCFLOW_APPLICATION_COMPONENT: List BAPI application tree

- BAPI_MONITOR_GETLIST: Returns a list of all available BAPI's in the SAP system.

- BAPI_INTERFACE_GETDOCU: Returns the help text documentation for a BAPI on different levels.

- RFC_GET_FUNCTION_INTERFACE: Returns all parameters for a BAPI or function module in SAP.

- ZNCFLOW_DDIF_FIELDINFO_GET: Returns the data dictionary specification for parameter fields

BAPI: BAPI_INTERFACE_GETDOCU

With this BAPI you will get the documentation for the requested BAPI in the form of help text. This is useful for giving detailed descriptions of the BAPI on a general level or on the Method. If you give the Object you will get text on the object and if you give the object and the method you get details on the method. The fields in the input Is the same as the resulting table (BAPILIST) in the previous BAPI (BAPI_MONITOR_GETLIST).

| Field | Description | Type | Spec | Example |

|---|---|---|---|---|

| OBJTYPE | Object type | I | CHAR10 | SFLIGHT |

| OBJNAME | Object name | I | CHAR32 | |

| METHOD | Method name of the object type | I | CHAR32 | GetDetail |

| PARAMETER | Name of parameter in method | I | CHAR32 | |

| FIELD | Field name in the parameter for F4 values | I | CHAR30 | |

| LANGUAGE | Language of the text to be displayed | I | LANG1 | |

| TEXTFORMAT | Format of the text to be displayed | I | CHAR3 | |

| LINKPATTERN | Convert SAPscript Hyperlinks to HTML | I | CHAR255 | |

| RETURN | Return messages | O | BAPIRET2 | |

| TEXT | Table for the text | T | BAPITGB |

Table: BAPITGB

| Field | Description | Spec | Example |

|---|---|---|---|

| LINE | Line in documentation text | CHAR255 |

BAPI: BAPI_MONITOR_GETLIST

This BAPI returns information for BAPI's relating to the position in the BAPI application tree. Giving an * in the first input parameter will give a full list of all BAPI's.

The other parameters can be left blank thus letting the system using default values. It is possible to select BAPI's for a list of application nodes by sending in a table COMPONENTS2SELECT with rows of nodes in the field COMPONENT. This BAPI together with the first (ZNCFLOW_APPLICATION_COMPONENT) will give you enough information to draw the complete Application tree containing all BAPI's.

| Field | Description | Type | Spec | Example |

|---|---|---|---|---|

| OBJECTTYPE | Object type | I | CHAR10 | * (will result in full list) |

| SHOW_RELEASE | Release /Reference Release to Display | I | CHAR4 | Use default |

| BAPIS_POTENTIAL | Display Potential BAPIs | I | CHAR1 | Use default |

| BAPIS_NEW | Display New BAPIs in Release | I | CHAR1 | Use default |

| BAPIS_OLD | Display BAPIs from Previous Releases | I | CHAR1 | Use default |

| RELEASED_BAPI | Release Status of BAPIs | I | CHAR1 | Use default |

| RELEASED_FUNC | Release Status of Function Modules | I | CHAR1 | Use default |

| RETURN | Return messages | O | BAPIRET2 | |

| COMPONENTS2SELECT | Application Components/Areas to Select | T | BAPIMONCOM | |

| SYSTEMS2SELECT | Original System of BAPIs to Select | T | BAPISRCSYS | Use default |

| BAPILIST | List of Selected BAPIs | T | BAPIMONSTR |

Structure: BAPIRET2

| Field | Description | Spec | Example |

|---|---|---|---|

| TYPE | Message type: S Success, E Error, W Warning, I Info, A Abort | CHAR1 | |

| ID | Message Class | CHAR30 | |

| NUMBER | Message Number | NUMC3 | |

| MESSAGE | Message text | CHAR220 | |

| LOG_NO | Application log: log number | CHAR20 | |

| LOG_MSG_NO | Application log: Internal message serial number | NUMC6 | |

| MESSAGE_V1 | Message Variable | CHAR50 | |

| MESSAGE_V2 | Message Variable | CHAR50 | |

| MESSAGE_V3 | Message Variable | CHAR50 | |

| MESSAGE_V4 | Message Variable | CHAR50 | |

| PARAMETER | Parameter Name | CHAR32 | |

| ROW | Lines in parameter | INT4 | |

| FIELD | Field in parameter | CHAR30 | |

| SYSTEM | Logical system from which message originates | CHAR10 |

Table: COMPONENTS2SELECT

| Field | Description | Spec | Example |

|---|---|---|---|

| COMPONENT | Application component ID | CHAR24 |

Table: BAPILIST

| Field | Description | Spec | Example |

|---|---|---|---|

| OBJECTTYPE | Object Type | CHAR10 | SFLIGHT |

| OBJECTNAME | Object name | CHAR32 | Flight |

| BAPINAME | Method name of BAPI | CHAR32 | GetDetail |

| ABAPNAME | Function module name | CHAR30 | BAPI_FLIGHT_GETDETAIL |

| COMP | Application component ID | CHAR24 | BC-DWB |

| CREA_REL | Release at Creation | CHAR4 | 610 |

| CREATOR | Author | CHAR12 | SAP |

| UDATE | Changed On | CHAR8 | 18.09.2001 |

| CHANGER | Last changed by | CHAR12 | SAP |

| SOURCESYS | Name of the SAP system | CHAR8 | SAP |

| BAPI_AG | Application area or BAPI work group responsible | CHAR5 | Basis |

| ISINTERFAC | Interface object type | CHAR1 | |

| BAPI_REL | Release status of BAPI method | CHAR1 | X |

| FUNC_REL | Release status of function module | CHAR1 | R |

| OBSOLETE | Release in which the status was set to obsolete | CHAR4 | |

| FM_DOCU | Documentation on function module exist | CHAR1 | |

| BO_DOCU | Documentation for business object exist | CHAR1 | |

| MESTYPE | Message type | CHAR30 | |

| VERB | Object type component | CHAR32 | GETLIST |

| BO_TEXT | Description | CHAR80 | Flight with connection data (SAP training) |

| BAPI_TEXT | Description | CHAR80 | Find list of flights |

BAPI: ZNCFLOW_DDIF_FIELDINFO_GET

This BAPI will return de definition of data dictionary objects. In this case the parameters for BAPI's or function modules returned from BAPI: RFC_GET_FUNCTION_INTERFACE.

| Field | Description | Type | Spec | Example |

|---|---|---|---|---|

| TABNAME | Name of the Table (of the Type) for which Information is Required | I | CHAR30 | |

| FIELDNAME | Use Parameter LFIELDNAME Instead | I | CHAR30 | |

| LANGU | Language of the Texts | I | LANG1 | |

| LFIELDNAME | If Filled, only Field with this Long Name | I | CHAR132 | |

| ALL_TYPES | Take all Types into Consideration | I | CHAR1 | |

| GROUP_NAMES | Take Named Includes into Consideration | I | CHAR1 | |

| UCLEN | Unicode length with which runtime object was generated | I | RAW1 | |

| DO_NOT_WRITE | Write | I | CHAR1 | |

| X030L_WA | Nametab Header of the Table (of the Type) | E | CHAR30 | |

| DDOBJTYPE | Kind of Type | E | CHAR8 | |

| DFIES_WA | Single Information if Necessary | E | CHAR30 | |

| LINES_DESCR | Information about Other Referenced Types | E | DDTYPEDESC-TYPENAME (CHAR30)-TYPEKIND (CHAR4)-DFIES | |

| DFIES_TAB | Field List if Necessary | T | DFIES | |

| FIXED_VALUES | Description of Domain Fixed Values | T | DDFIXVALUES |

Table/Structure: DFIES_TAB

| Field | Description | Spec | Example |

|---|---|---|---|

| TABNAME | Table Name | CHAR30 | |

| FIELDNAME | Field Name | CHAR30 | |

| LANGU | Language Key | LANG1 | |

| POSITION | Position of the field in the table | NUMC4 | |

| OFFSET | Offset of a field | NUMC6 | |

| DOMNAME | Domain name | CHAR30 | |

| ROLLNAME | Data element (semantic domain) | CHAR30 | |

| CHECKTABLE | Table Name | CHAR30 | |

| LENG | Length (No. of Characters) | NUMC6 | |

| INTLEN | Internal Length in Bytes | NUMC6 | |

| OUTPUTLEN | Output Length | NUMC6 | |

| DECIMALS | Number of Decimal Places | NUMC6 | |

| DATATYPE | ABAP/4 Dictionary: Screen data type for Screen Painter | CHAR4 | |

| INTTYPE | ABAP data type (C,D,N,...) | CHAR1 | |

| REFTABLE | Table for reference field | CHAR30 | |

| REFFIELD | Reference field for currency and qty fields | CHAR30 | |

| PRECFIELD | Name of included table | CHAR30 | |

| AUTHORID | Authorization class | CHAR3 | |

| MEMORYID | Set/Get parameter ID | CHAR20 | |

| LOGFLAG | Indicator for writing change documents | CHAR1 | |

| MASK | Template (not used) | CHAR20 | |

| MASKLEN | Template length (not used) | NUMC4 | |

| CONVEXIT | Conversion Routine | CHAR5 | |

| HEADLEN | Maximum length of heading | NUMC2 | |

| SCRLEN1 | Max. length for short field label | NUMC2 | |

| SCRLEN2 | Max. length for medium field label | NUMC2 | |

| SCRLEN3 | Max. length for long field label | NUMC2 | |

| FIELDTEXT | Short Description of Repository Objects | CHAR60 | |

| REPTEXT | Heading | CHAR55 | |

| SCRTEXT_S | Short Field Label | CHAR10 | |

| SCRTEXT_M | Medium Field Label | CHAR20 | |

| SCRTEXT_L | Long Field Label | CHAR40 | |

| KEYFLAG | Identifies a key field of a table | CHAR1 | |

| LOWERCASE | Lowercase letters allowed/not allowed | CHAR1 | |

| MAC | Flag if search help is attached to the field | CHAR1 | |

| GENKEY | Flag (X or Blank) | CHAR1 | |

| NOFORKEY | Flag (X or Blank) | CHAR1 | |

| VALEXI | Existence of fixed values | CHAR1 | |

| NOAUTHCH | Flag (X or Blank) | CHAR1 | |

| SIGN | Flag for sign in numerical fields | CHAR1 | |

| DYNPFLD | Flag: field to be displayed on the screen | CHAR1 | |

| F4AVAILABL | Does the field have an input help | CHAR1 | |

| COMPTYPE | DD: Component Type | CHAR1 | |

| LFIELDNAME | Field name | CHAR132 | |

| LTRFLDDIS | Basic write direction has been defined LTR (left-to-right) | CHAR1 | |

| BIDICTRLC | DD: No Filtering of BIDI Formatting Characters | CHAR1 | |

| OUTPUTSTYLE | DD: Output Style (Output Style) for Decfloat Types | NUMC2 | |

| NOHISTORY | DD: Flag for Deactivating Input History in Screen Field | CHAR1 | |

| AMPMFORMAT | DD: Indicator whether AM/PM time format is required | CHAR1 |

Table: FIXED_VALUES

| Field | Description | Spec | Example |

|---|---|---|---|

| LOW | Values for Domains: Single Value / Upper Limit | CHAR10 | |

| HIGH | Values for domains: upper limit | CHAR10 | |

| OPTION | Option for domain fixed values | CHAR2 | |

| DDLANGUAGE | Language Key | LANG1 | |

| DDTEXT | Short Text for Fixed Values | CHAR60 |

BAPI: RFC_GET_FUNCTION_INTERFACE

This BAPI will return all parameters and parameter attributes for a specific BAPI or function module. The function module name (FUNCNAME) is the same value that was returned for each BAPI in the call to BAPI_MONITOR_GETLIST (field ABAPNAME in table BAPILIST).

| Field | Description | Type | Spec | Example |

|---|---|---|---|---|

| FUNCNAME | Name of the function module | I | CHAR30 | BAPI_FLIGHT_GETDETAIL |

| LANGUAGE | Language of the parameter text | I | LANG1 | |

| NONE_UNICODE_LENGTH | Length is also supplied in Unicode systems in non-Unicode format | I | CHAR1 | |

| REMOTE_BASXML_SUPPORTED | BasXML Protokoll | E | CHAR1 | |

| REMOTE_CALL | Function module can be called Remote-Function | E | CHAR1 | |

| UPDATE_TASK | Function module is in the update | E | CHAR1 | |

| PARAMS | Parameter of function module | T | RFC_FUNINT | |

| RESUMABLE_EXCEPTIONS | Resumable Exceptions | T | RSEXC |

Table: PARAMS

| Field | Description | Spec | Example |

|---|---|---|---|

| PARAMCLASS | Parameter type | CHAR1 | I |

| PARAMETER | Parameter name | CHAR30 | AIRLINEID |

| TABNAME | Table Name | CHAR30 | BAPISFLKEY |

| FIELDNAME | Field Name | CHAR30 | AIRLINEID |

| EXID | ABAP Data Type | CHAR1 | C |

| POSITION | Position of field in structure (from 1) | CHAR10 | 1 |

| OFFSET | Field offset from beginning of structure (from 0) | INT10 | 0 |

| INTLENGTH | Internal length of field | INT10 | 6 |

| DECIMALS | Number of decimal places | INT10 | 0 |

| DEFAULT | Default value for import parameter | CHAR21 | |

| PARAMTEXT | Short text | CHAR79 | Airline Code |

| OPTIONAL | Optional parameters | CHAR1 |

BAPI: ZNCFLOW_APPLICATION_COMPONENT

This BAPI returns the BAPI application tree. The resulting table contains all nodes in the BAPI tree indicating what level the node is on and what the superior node is. The input parameter can list all nodes (giving only a *) or a specific component. Ending with an * can give all nodes starting with a specific name. For example AP-MD* will give all nodes starting with AP-MD. I.e all nodes below that node.

| Field | Description | Type | Spec | Example |

|---|---|---|---|---|

| COMP | Application Component | I | CHAR24 | * (will result in full list) |

Table: COMPONENTS

| Field | Description | Spec | Example |

|---|---|---|---|

| COMP | Application component ID | CHAR24 | AP-MD-BF |

| NAME | Short text | CHAR60 | Master data |

| LEVEL | Numc3, internal use | NUMC3 | 002 |

| SUPERIOR | Application component ID | CHAR220 | AP-MD |

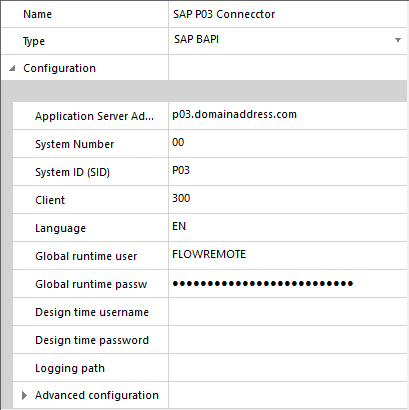

SAP BAPI Connector

Introduction

Novacura Flow is delivered with a API connector for SAP ECC (supporting version ECC 6 and newer), where Flow is communication with SAP through the SAP BAPIs.

In order to use the SAP connector in Flow, the connector needs a license for the current environment.

Getting started

There are a couple of things that needs to be done before you can start using the SAP connector.

In short, these four steps needs to be done prior to creating workflows with the SAP connector (assuming that you of course already have installed the Flow Server):

- Install the SAP GUI for Windows on the Flow server. This is necessary for Flow to be able to connect to SAP.

- Verify that the connection between the Flow Server and SAP works, using the SAP Logon Pad.

- Install the Novacura Flow Transporter package in the SAP environment you want to connect to. The Transporter package will install a couple of BAPIs that the connector need to generate an API tree in the Flow Studio. Download Transporter package here.

- Make sure you have a license for the SAP Connector, read more; here.

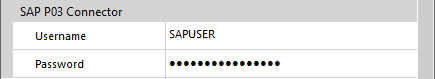

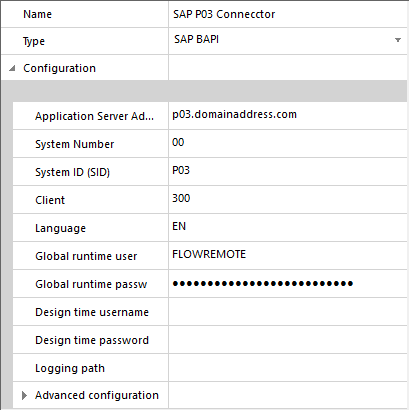

- Configure the SAP Connector in the Flow Studio, read more; here.

Steps 3 to 5 are described below.

Designing a SAP workflow

If you want an example of a SAP workflow, you can find a simple workflow here, using the ABAP workbench BAPIs for Flight Booking: http://community.novacuraflow.com/product/sap-flight-booking-example/

Make sure you have these BAPI in your SAP environment before you use this workflow.

When in the Flow Studio, adding a Machine Step to your workflow will let you create a connection to SAP. After selecting the SAP connector you have defined in Environment/Connectors, you will be able to configure a method call to SAP with the following steps.

Get the list of BAPIs: When selecting youe SAP connector in the list of available connectors, press the button with the three dots. This will connect to your SAP environment and generate the entire BAPI tree in the connector. So whatever BAPIs you have in your installatione of SAP will be accessible directly from Flow.

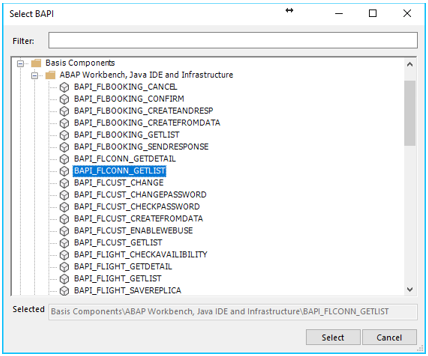

Select a BAPI: After the generation of the BAPI tree is done, you will see all BAPIs arranged in a folder structure. You can find the BAPI you need by either browse through the structure or by searching for the BAPI using the Filter option.

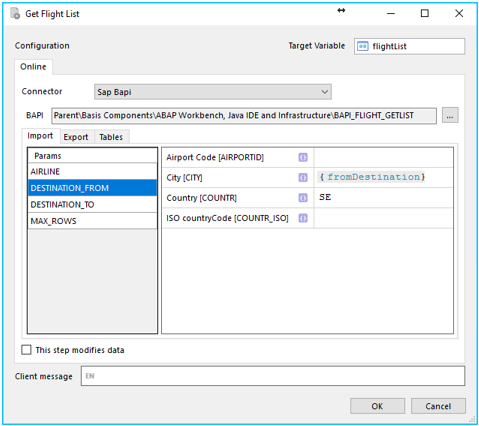

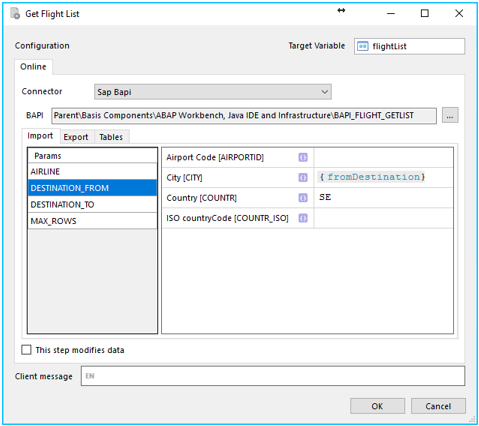

Configure the BAPI call (Input):

There are three tabs that can be used for configuration of the BAPI all:

- Import: input data do the BAPI

- Export: output data from the BAPI

- Tables: both input and output. One or many tables can be used here.

First, define the input. You can either set fixed data by just typing the value you need or by using existing (single) variables in the workflow. Note that Input can be set both on the Import and Tables tabs.

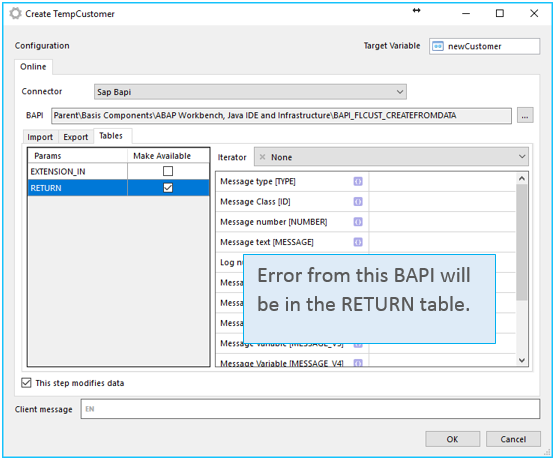

Configure the BAPI call (Output): All data coming out from the called BAPI will end up in the Target Variable, so the Target Variable must be set (as in all Machine Steps returning data). There are two tabs for output; Export and Tables. Export are singe variables returned from the BAPI. Tables are table variables returned from the BAPI. Depending on the BAPI, Export and Tables will look and function differently.

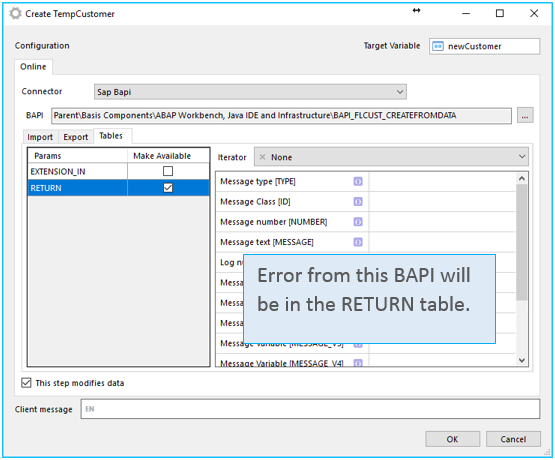

Configuring Commit step

Selecting check box This step modifies data will ensure that a BAPI commit step is executed. Check this box for any BAPI that updates or inserts data in the ECC system. For a BAPI that only reads data it can be left unchecked.

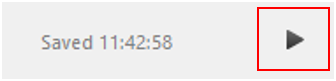

When you have configure the BAPI call, you can test it using the Play button on the top right of the Application Window in the Studio.

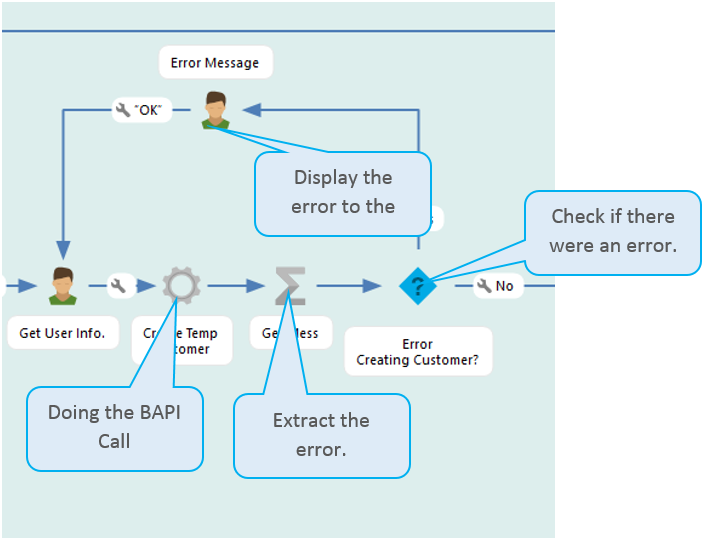

Error handling

SAP returns errors as output from the BAPI. This means that you will need to handle errors from SAP in the workflow. Like this:

So, now you can create any app directly on top of your SAP environment!

Add attached file (from bytes)

Upload a file as an attachment to task item.

Parameters

| Parameter | Description |

|---|---|

| Custom List app name | Name of Custom List app in SharePoint (not id) |

| Item ID | ID of item |

| Remote filename | Remote filename |

| Source bytes | Table (consisting of bytes) to read from |

Output

Record with information about whether operation failed or not.

| Member | Description |

|---|---|

| HasFailed | Whether operation has failed or not |

| ErrorMessage | A description of the failure, if any |

| ErrorCode | An error code related to operation failure. Is 0 if no failure occured |

Errors

| Error code | Description |

|---|---|

| 1000 | Unspecified error. |

| 1000001 | User is not authorized to perform operation. |

| 1000004 | Item with given id does not exist. |

Add attached file (from file system)

Upload a file as an attachment to task item.

Parameters

| Parameter | Description |

|---|---|

| Custom List app name | Name of Custom List app in SharePoint (not id) |

| Item ID | ID of item |

| Remote filename | Remote filename |

| Local filename | Full path to file to upload. Note that the user the application pool running Flow Server must have access to the file. |

Output

Record with information about whether operation failed or not.

| Member | Description |

|---|---|

| HasFailed | Whether operation has failed or not |

| ErrorMessage | A description of the failure, if any |

| ErrorCode | An error code related to operation failure. Is 0 if no failure occured |

Errors

| Error code | Description |

|---|---|

| 1000 | Unspecified error. |

| 1000001 | User is not authorized to perform operation. |

| 1000004 | Item with given id does not exist. |

Add attached file (from stream)

Upload a file as an attachment to task item.

Parameters

| Parameter | Description |

|---|---|

| Custom List app name | Name of Custom List app in SharePoint (not id) |

| Item ID | ID of item |

| Filename | Remote filename |

| Source stream | Simple variable containg a stream |

Output

Record with information about whether operation failed or not.

| Member | Description |

|---|---|

| HasFailed | Whether operation has failed or not |

| ErrorMessage | A description of the failure, if any |

| ErrorCode | An error code related to operation failure. Is 0 if no failure occured |

Errors

| Error code | Description |

|---|---|

| 1000 | Unspecified error. |

| 1000001 | User is not authorized to perform operation. |

| 1000004 | Item with given id does not exist. |

Delete all attached files

Delete all attachments on provided task item.

Parameters

| Parameter | Description |

|---|---|

| Custom List app name | Name of Custom List app in SharePoint (not id) |

| Item ID | ID of item |

| Filename | Remote filename |

Output

Record with information about whether operation failed or not.

| Member | Description |

|---|---|

| HasFailed | Whether operation has failed or not |

| ErrorMessage | A description of the failure, if any |

| ErrorCode | An error code related to operation failure. Is 0 if no failure occured |

Errors

| Error code | Description |

|---|---|

| 1000 | Unspecified error. |

| 1000001 | User is not authorized to perform operation. |

| 1000004 | Item with given id does not exist. |

Delete attached file

Delete an attachment on provided task item.

Parameters

| Parameter | Description |

|---|---|

| Custom List app name | Name of Custom List app in SharePoint (not id) |

| Item ID | ID of item |

| Filename | Remote filename |

Output

Record with information about whether operation failed or not.

| Member | Description |

|---|---|

| HasFailed | Whether operation has failed or not |

| ErrorMessage | A description of the failure, if any |

| ErrorCode | An error code related to operation failure. Is 0 if no failure occured |

Errors

| Error code | Description |

|---|---|

| 1000 | Unspecified error. |

| 1000001 | User is not authorized to perform operation. |

| 1000004 | Item with given id does not exist. |

| 1000005 | File not found. |

List attached files

Lists all files attached to specified item.

Parameters

| Parameter | Description |

|---|---|

| Custom List app name | Name of Custom List app in SharePoint (not id) |

| Item ID | ID of item |

Output

Record with information about whether operation failed or not. If succesful, result of operation is stored in Results member

| Member | Description |

|---|---|

| HasFailed | Whether operation has failed or not |

| ErrorMessage | A description of the failure, if any |

| ErrorCode | An error code related to operation failure. Is 0 if no failure occured |

| Results | Table variable containing the results of operation |

Structure of Results

ResultsErrors

| Error code | Description |

|---|---|

| 1000 | Unspecified error. |

| 1000001 | User is not authorized to perform operation. |

| 1000004 | Item with given id does not exist. |

Delete item

Deletes item with given ID.

Parameters

| Parameter | Description |

|---|---|

| Custom List app name | Name of Custom List app in SharePoint (not id) |

| Item ID | ID of item |

Output

Record with information about whether operation failed or not.

| Member | Description |

|---|---|

| HasFailed | Whether operation has failed or not |

| ErrorMessage | A description of the failure, if any |

| ErrorCode | An error code related to operation failure. Is 0 if no failure occured |

Errors

| Error code | Description |

|---|---|

| 1000 | Unspecified error. |

| 1000001 | User is not authorized to perform operation. |

| 1000004 | Item with given id does not exist. |

Get items by query

Gets information about items by provided CAML Query

Parameters

| Parameter | Description |

|---|---|

| Custom List app name | Name of Custom List app in SharePoint (not id) |

| CAML Query | The query to send to SharePoint |

Output

Record with information about whether operation failed or not. If succesful, result of operation is stored in Results member

| Member | Description |

|---|---|

| HasFailed | Whether operation has failed or not |

| ErrorMessage | A description of the failure, if any |

| ErrorCode | An error code related to operation failure. Is 0 if no failure occured |

| Results | Table variable containing the results of operation |

Structure of Results

Results- StaticName

- Value

Errors

| Error code | Description |

|---|---|

| 1000 | Unspecified error. |

| 1000001 | User is not authorized to perform operation. |

List all items

Lists all items in Custom List app.

Parameters

| Parameter | Description |

|---|---|

| Custom List app name | Name of Custom List app in SharePoint (not id) |

| Row limit | Limit on how many items to list. A value of -1 indicates no limit |

Output

Record with information about whether operation failed or not. If succesful, result of operation is stored in Results member

| Member | Description |

|---|---|

| HasFailed | Whether operation has failed or not |

| ErrorMessage | A description of the failure, if any |

| ErrorCode | An error code related to operation failure. Is 0 if no failure occured |

| Results | Table variable containing the results of operation |

Structure of Results

Results- StaticName

- Value

Errors

| Error code | Description |

|---|---|

| 1000 | Unspecified error. |

| 1000001 | User is not authorized to perform operation. |

List fields of items

Lists the fields that are available for items in Custom List app.

Parameters

| Parameter | Description |

|---|---|

| Custom List app name | Name of Custom List app in SharePoint (not id) |

Output

Record with information about whether operation failed or not. If succesful, result of operation is stored in Results member

| Member | Description |

|---|---|

| HasFailed | Whether operation has failed or not |

| ErrorMessage | A description of the failure, if any |

| ErrorCode | An error code related to operation failure. Is 0 if no failure occured |

| Results | Table variable containing the results of operation |

Structure of Results

ResultsErrors

| Error code | Description |

|---|---|

| 1000 | Unspecified error. |

| 1000001 | User is not authorized to perform operation. |

Create new item

Creates a new item in a Custom List app. Returns the ID of created item in Results.

Parameters

| Parameter | Description |

|---|---|

| Custom List app name | Name of Custom List app in SharePoint (not id) |

| Item name | Title of new item |

| Folder url | Url to folder to create item in. Leave empty for root folder. Example if adding item to folder 'folder1' in Custom List 'clist' in subsite 'subsite1': '/subsite1/Lists/clist/folder1' |

Output

Record with information about whether operation failed or not.

| Member | Description |

|---|---|

| HasFailed | Whether operation has failed or not |

| ErrorMessage | A description of the failure, if any |

| Results | Simple variable containing the results of operation |

Errors

| Error code | Description |

|---|---|

| 1000 | Unspecified error. |

| 1000001 | User is not authorized to perform operation. |

Create new item, with field values

Creates a new item in a Custom List app. Returns the ID of created item in Results.

Parameters

| Parameter | Description |

|---|---|

| Custom List app name | Name of Custom List app in SharePoint (not id) |

| Item name | Title of new item |

| Field values | Table consisting of field values. Note that StaticName of fields needs to be provided, not Title |

| Folder url | Url to folder to create item in. Leave empty for root folder. Example if adding item to folder 'folder1' in Custom List 'clist' in subsite 'subsite1': '/subsite1/Lists/clist/folder1' |

Output

Record with information about whether operation failed or not.

| Member | Description |

|---|---|

| HasFailed | Whether operation has failed or not |

| ErrorMessage | A description of the failure, if any |

| Results | Simple variable containing the results of operation |

Errors

| Error code | Description |

|---|---|

| 1000 | Unspecified error. |

| 1000001 | User is not authorized to perform operation. |

Update item field

Updates a field of a given item.

Parameters

| Parameter | Description |

|---|---|

| Custom List app name | Name of Custom List app in SharePoint (not id) |

| Item ID | ID of item |

| Field | Name of field/column (StaticName, not Title) |

| Value | New value of property |

Output

Record with information about whether operation failed or not.

| Member | Description |

|---|---|

| HasFailed | Whether operation has failed or not |

| ErrorMessage | A description of the failure, if any |

| ErrorCode | An error code related to operation failure. Is 0 if no failure occured |

Errors

| Error code | Description |

|---|---|

| 1000 | Unspecified error. |

| 1000001 | User is not authorized to perform operation. |

| 1000004 | Item with given id does not exist. |

Check in file

Checks in a file to SharePoint that the user has checked out

Parameters

| Parameter | Description |

|---|---|

| Document Library Name | Name of Document Library in SharePoint (not id) |

| Filename | Remote filename in Document Library, including any extensions or folders if applicable. E.g. "file.txt" or "subfolder1/subfolder2/file.txt" |

| Comment | Comment, must not exceed 1023 characters |

| Checkin type | Specifies check in type |

Output

Record with information about whether operation failed or not.

| Member | Description |

|---|---|

| HasFailed | Whether operation has failed or not |

| ErrorMessage | A description of the failure, if any |

| ErrorCode | An error code related to operation failure. Is 0 if no failure occured |

Errors

| Error code | Description |

|---|---|

| 1000 | Unspecified error. |

| 1000001 | User is not authorized to perform operation. |

| 1000002 | User has not checked out the file. |

Check out file

Checks out a file to the SharePoint user the connector is running as.

Parameters

| Parameter | Description |

|---|---|

| Document Library Name | Name of Document Library in SharePoint (not id) |

| Filename | Remote filename in Document Library, including any extensions or folders if applicable. E.g. "file.txt" or "subfolder1/subfolder2/file.txt" |

Output

Record with information about whether operation failed or not.

| Member | Description |

|---|---|

| HasFailed | Whether operation has failed or not |

| ErrorMessage | A description of the failure, if any |

| ErrorCode | An error code related to operation failure. Is 0 if no failure occured |

Errors

| Error code | Description |

|---|---|

| 1000 | Unspecified error. |

| 1000001 | User is not authorized to perform operation. |

| 1000003 | File already checked out. |

Discard check out

Undo a check out of a file.

Parameters

| Parameter | Description |

|---|---|

| Document Library Name | Name of Document Library in SharePoint (not id) |

| Filename | Remote filename in Document Library, including any extensions or folders if applicable. E.g. "file.txt" or "subfolder1/subfolder2/file.txt" |

Output

Record with information about whether operation failed or not.

| Member | Description |

|---|---|

| HasFailed | Whether operation has failed or not |

| ErrorMessage | A description of the failure, if any |

| ErrorCode | An error code related to operation failure. Is 0 if no failure occured |

Errors

| Error code | Description |

|---|---|

| 1000 | Unspecified error. |

| 1000001 | User is not authorized to perform operation. |

| 1000002 | User has not checked out the file. |

Copy file

Copies a file from a Document Library to another (or the same) Document Library at the same SharePoint site.

Parameters

| Parameter | Description |

|---|---|

| Source Document Library Name | Name of source Document Library in SharePoint to copy file from |

| Source filename | Remote filename in Document Library, including any extensions or folders if applicable. E.g. "file.txt" or "subfolder1/subfolder2/file.txt" to move |

| Destination Document Library Name | Name of target Document Library in SharePoint to copy file to |

| Destination filename | Name of file in destination Document Library |

| Overwrite | Specifies whether to overwrite destination file if it already exists |

Output

Record with information about whether operation failed or not.

| Member | Description |

|---|---|

| HasFailed | Whether operation has failed or not |

| ErrorMessage | A description of the failure, if any |

| ErrorCode | An error code related to operation failure. Is 0 if no failure occured |

Errors

| Error code | Description |

|---|---|

| 1000 | Unspecified error. |

| 1000001 | User is not authorized to perform operation. |

Delete file

Deletes a file in the Document Library. If the file does not exist, the operation is considered succesful.

Parameters

| Parameter | Description |

|---|---|

| Document Library Name | Name of Document Library in SharePoint (not id) |

| Filename | Remote filename in Document Library, including any extensions or folders if applicable. E.g. "file.txt" or "subfolder1/subfolder2/file.txt" to move |

Output

Record with information about whether operation failed or not.

| Member | Description |

|---|---|

| HasFailed | Whether operation has failed or not |

| ErrorMessage | A description of the failure, if any |

| ErrorCode | An error code related to operation failure. Is 0 if no failure occured |

Errors

| Error code | Description |

|---|---|

| 1000 | Unspecified error. |

| 1000001 | User is not authorized to perform operation. |

Get file information

Gets information about a file provided as a releative url, example: '/subsite1/Shared Documents/file.doc'

Parameters

| Parameter | Description |

|---|---|

| Document Library Name | Name of Document Library in SharePoint (not id) |

| Filename | Remote filename in Document Library, including any extensions or folders if applicable. E.g. "file.txt" or "subfolder1/subfolder2/file.txt" |

Output

Record with information about whether operation failed or not. If succesful, result of operation is stored in Results member

| Member | Description |

|---|---|

| HasFailed | Whether operation has failed or not |

| ErrorMessage | A description of the failure, if any |

| ErrorCode | An error code related to operation failure. Is 0 if no failure occured |

| Results | Record variable containing the results of operation |

Structure of Results

Results- Id

- LoginName

- Title

- IsSiteAdmin

- Id

- LoginName

- Title

- IsSiteAdmin

- Id

- LoginName

- Title

- IsSiteAdmin

- Id

- LoginName

- Title

- IsSiteAdmin

Errors

| Error code | Description |

|---|---|

| 1000 | Unspecified error. |

List files

Lists all files at the root of a Document Library

Parameters

| Parameter | Description |

|---|---|

| Document Library Name | Name of Document Library in SharePoint (not id) |

| Populate user members | Specifies whether to populate members that relates to SharePoint users, e.g. Author. |

Output

Record with information about whether operation failed or not. If succesful, result of operation is stored in Results member

| Member | Description |

|---|---|

| HasFailed | Whether operation has failed or not |

| ErrorMessage | A description of the failure, if any |

| ErrorCode | An error code related to operation failure. Is 0 if no failure occured |

| Results | Table variable containing the results of operation |

Structure of Results

Results- Id

- LoginName

- Title

- IsSiteAdmin

- Id

- LoginName

- Title

- IsSiteAdmin

- Id

- LoginName

- Title

- IsSiteAdmin

- Id

- LoginName

- Title

- IsSiteAdmin

Errors

| Error code | Description |

|---|---|

| 1000 | Unspecified error. |

Move file

Moves a file from a Document Library to another (or the same) Document Library at the same SharePoint site.

Parameters

| Parameter | Description |

|---|---|

| Source Document Library Name | Name of source Document Library in SharePoint to move file from |

| Source filename | Remote filename in Document Library, including any extensions or folders if applicable. E.g. "file.txt" or "subfolder1/subfolder2/file.txt" to move |

| Destination Document Library Name | Name of target Document Library in SharePoint to move file to |

| Destination filename | Name of file in destination Document Library |

| Move operations | Specifies move options. |

Output

Record with information about whether operation failed or not.

| Member | Description |

|---|---|

| HasFailed | Whether operation has failed or not |

| ErrorMessage | A description of the failure, if any |

| ErrorCode | An error code related to operation failure. Is 0 if no failure occured |

Errors

| Error code | Description |

|---|---|

| 1000 | Unspecified error. |

| 1000001 | User is not authorized to perform operation. |

Update file property

Parameters

| Parameter | Description |

|---|---|

| Server relative url | An url relative to server, e.g. "/subsite/doclib/folder/file.txt". |

| Field name | Name of field or column |

| New value | The new value to set on field |

Output

Record with information about whether operation failed or not.

| Member | Description |

|---|---|

| HasFailed | Whether operation has failed or not |

| ErrorMessage | A description of the failure, if any |

| ErrorCode | An error code related to operation failure. Is 0 if no failure occured |

Errors

| Error code | Description |

|---|---|

| 1000 | Unspecified error. |

Create subfolder

Creates a new folder beneth specfied folder. If the folder already exists, the operations is considered succesful

Parameters

| Parameter | Description |

|---|---|

| Document Library Name | Name of Document Library in SharePoint (not id) |

| Parent folder | Name of folder to create sub folders in. E.g. "folder1" or "folder1/subfolder2" |

| Name of sub folder | Name of folder to create |

Output

Record with information about whether operation failed or not.

| Member | Description |

|---|---|

| HasFailed | Whether operation has failed or not |

| ErrorMessage | A description of the failure, if any |

| ErrorCode | An error code related to operation failure. Is 0 if no failure occured |

Errors

| Error code | Description |

|---|---|

| 1000 | Unspecified error. |

Delete folder

Deletes a folder by its relative url.

Parameters

| Parameter | Description |

|---|---|

| Server relative url of folder | An url relative to server, e.g. "/subsite/doclib/folder" |

Output

Record with information about whether operation failed or not.

| Member | Description |

|---|---|

| HasFailed | Whether operation has failed or not |

| ErrorMessage | A description of the failure, if any |

| ErrorCode | An error code related to operation failure. Is 0 if no failure occured |

Errors

| Error code | Description |

|---|---|

| 1000 | Unspecified error. |

| 1000001 | User is not authorized to perform operation. |

List files in folder

Lists all files in a specified folder.

Parameters

| Parameter | Description |

|---|---|

| Document Library Name | Name of Document Library in SharePoint (not id) |

| Folder | Remote folder in Document Library, can include sub folders if applicable. E.g. "subfolder1/subfolder2" |

| Populate user properties | Specifies whether to populate members that relates to SharePoint users, e.g. Author |

Output

Record with information about whether operation failed or not. If succesful, result of operation is stored in Results member

| Member | Description |

|---|---|

| HasFailed | Whether operation has failed or not |

| ErrorMessage | A description of the failure, if any |

| ErrorCode | An error code related to operation failure. Is 0 if no failure occured |

| Results | Table variable containing the results of operation |

Structure of Results

Results- Id

- LoginName

- Title

- IsSiteAdmin

- Id

- LoginName

- Title

- IsSiteAdmin

- Id

- LoginName

- Title

- IsSiteAdmin

- Id

- LoginName

- Title

- IsSiteAdmin

Errors

| Error code | Description |

|---|---|

| 1000 | Unspecified error. |

List files in folder by relative url

Lists all files in a folder specified by relative url. Useful for instance if you got a Record containg folder information including its relative url and want to list those files.

Parameters

| Parameter | Description |

|---|---|

| Server relative url | An url relative to server, e.g. "/subsite/doclib/folder". |

| Populate user properties | Specifies whether to populate members that relates to SharePoint users, e.g. Author |

Output

Record with information about whether operation failed or not. If succesful, result of operation is stored in Results member

| Member | Description |

|---|---|

| HasFailed | Whether operation has failed or not |

| ErrorMessage | A description of the failure, if any |

| ErrorCode | An error code related to operation failure. Is 0 if no failure occured |

| Results | Table variable containing the results of operation |

Structure of Results

Results- Id

- LoginName

- Title

- IsSiteAdmin

- Id

- LoginName

- Title

- IsSiteAdmin

- Id

- LoginName

- Title

- IsSiteAdmin

- Id

- LoginName

- Title

- IsSiteAdmin

Errors

| Error code | Description |

|---|---|

| 1000 | Unspecified error. |

List folders in root of Document Library

Lists all folders in the root of the Document Library.

Parameters

| Parameter | Description |

|---|---|

| Document Library Name | Name of Document Library in SharePoint (not id) |

Output

Record with information about whether operation failed or not. If succesful, result of operation is stored in Results member

| Member | Description |

|---|---|

| HasFailed | Whether operation has failed or not |

| ErrorMessage | A description of the failure, if any |

| ErrorCode | An error code related to operation failure. Is 0 if no failure occured |

| Results | Table variable containing the results of operation |

Structure of Results

ResultsErrors

| Error code | Description |

|---|---|

| 1000 | Unspecified error. |

List subfolders of folder

Lists all subfolders of specified folder.

Parameters

| Parameter | Description |

|---|---|

| Document Library Name | Name of Document Library in SharePoint (not id) |

| Folder | Name of folder to list sub folders of. E.g. "subfolder1/subfolder2" |

Output

Record with information about whether operation failed or not. If succesful, result of operation is stored in Results member

| Member | Description |

|---|---|

| HasFailed | Whether operation has failed or not |

| ErrorMessage | A description of the failure, if any |

| ErrorCode | An error code related to operation failure. Is 0 if no failure occured |

| Results | Table variable containing the results of operation |

Structure of Results

ResultsErrors

| Error code | Description |

|---|---|

| 1000 | Unspecified error. |

Get subfolders by relative url

Lists all subfolders of specified folder provided by relative url. Useful for instance if you got a Record containg folder information including its relative url and want to list the sub folders of that folder.

Parameters

| Parameter | Description |

|---|---|

| Server relative url | An url relative to server, e.g. "/subsite/doclib/folder" |

Output

Record with information about whether operation failed or not. If succesful, result of operation is stored in Results member

| Member | Description |

|---|---|

| HasFailed | Whether operation has failed or not |

| ErrorMessage | A description of the failure, if any |

| ErrorCode | An error code related to operation failure. Is 0 if no failure occured |

| Results | Table variable containing the results of operation |

Structure of Results

ResultsErrors

| Error code | Description |

|---|---|

| 1000 | Unspecified error. |

Download file by url to file system

Downloads a single file by server relative url to file system.

Parameters

| Parameter | Description |

|---|---|

| Server relative url | An url relative to server, e.g. "/subsite/doclib/folder/file.txt". |

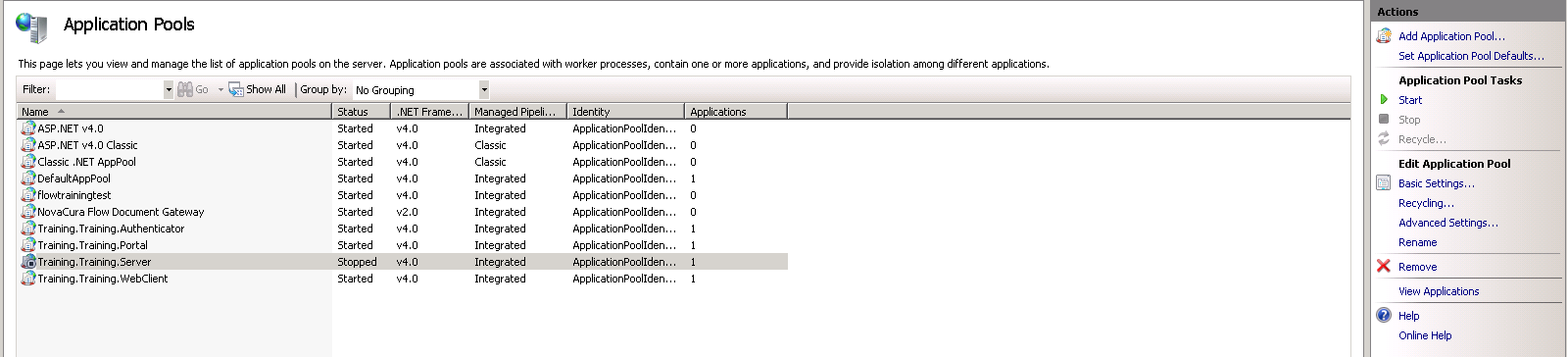

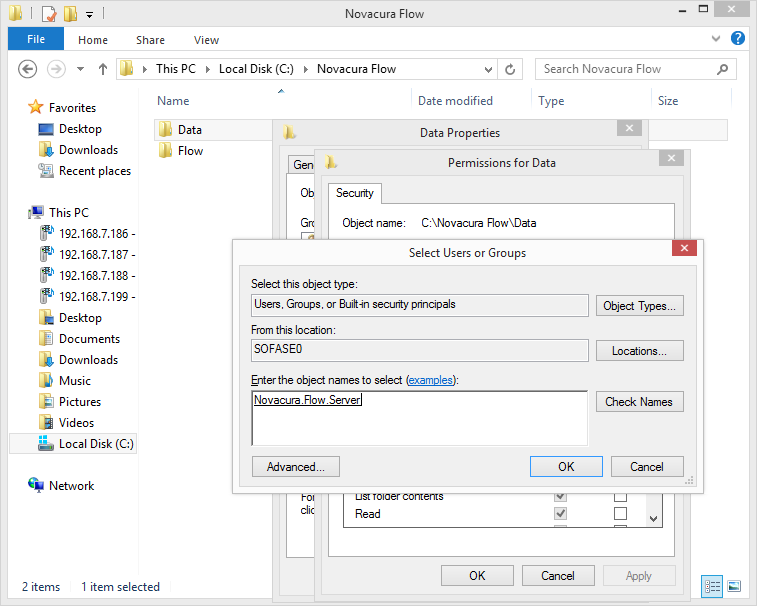

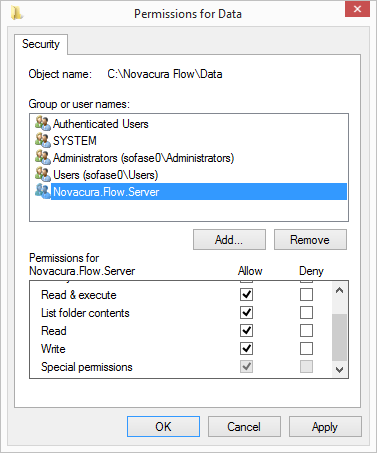

| Local filename | Full path where to download file. Note that the user the application pool running Flow Server must have write access to the file. If the file already exists, it is overwritten. |

Output

Record with information about whether operation failed or not.

| Member | Description |

|---|---|

| HasFailed | Whether operation has failed or not |

| ErrorMessage | A description of the failure, if any |

| ErrorCode | An error code related to operation failure. Is 0 if no failure occured |

Errors

| Error code | Description |

|---|---|

| 1000 | Unspecified error. |

Download file by url to stream

Downloads a single file by server relative url to a simple variable (stream).

Parameters

| Parameter | Description |

|---|---|

| Server relative url | An url relative to server, e.g. "/subsite/doclib/folder/file.txt". |

Output

Record with information about whether operation failed or not. If succesful, result of operation is stored in Results member

| Member | Description |

|---|---|

| HasFailed | Whether operation has failed or not |

| ErrorMessage | A description of the failure, if any |

| ErrorCode | An error code related to operation failure. Is 0 if no failure occured |

| Results | Simple variable containing the results of operation |

Errors

| Error code | Description |

|---|---|

| 1000 | Unspecified error. |

Download file to file system

Downloads a single file from Document Library by path to file system.

Parameters

| Parameter | Description |

|---|---|

| Document Library Name | Name of Document Library in SharePoint (not id) |

| Remote filename | Remote filename in Document Library, including any extensions or folders if applicable. E.g. "file.txt" or "subfolder1/subfolder2/file.txt" |

| Local filename | Full path where to download file. Note that the user the application pool running Flow Server must have write access to the file. If the file already exists, it is overwritten. |

Output

Record with information about whether operation failed or not.

| Member | Description |

|---|---|

| HasFailed | Whether operation has failed or not |

| ErrorMessage | A description of the failure, if any |

| ErrorCode | An error code related to operation failure. Is 0 if no failure occured |

Errors

| Error code | Description |

|---|---|

| 1000 | Unspecified error. |

Download file to stream

Downloads a single file to a simple variable (stream).

Parameters

| Parameter | Description |

|---|---|

| Document Library Name | Name of Document Library in SharePoint (not id) |

| Filename | Remote filename in Document Library, including any extensions or folders if applicable. E.g. "file.txt" or "subfolder1/subfolder2/file.txt" |

Output

Record with information about whether operation failed or not. If succesful, result of operation is stored in Results member

| Member | Description |

|---|---|

| HasFailed | Whether operation has failed or not |

| ErrorMessage | A description of the failure, if any |

| ErrorCode | An error code related to operation failure. Is 0 if no failure occured |

| Results | Simple variable containing the results of operation |

Errors

| Error code | Description |

|---|---|

| 1000 | Unspecified error. |

Upload file from bytes

Uploads a single file from a Table of bytes to a SharePoint Document Library.

Parameters

| Parameter | Description |

|---|---|

| Source bytes | Table (consisting of bytes) to read from |

| Document Library Name | Name of Document Library in SharePoint (not id) |

| Filename | Remote filename to use in Document Library, including any extensions or folders if applicable. E.g. "file.txt" or "subfolder1/subfolder2/file.txt" |

| Overwrite if exists | Specifies whether to overwrite remote file if it already exists or abort |

Output

Record with information about whether operation failed or not.

| Member | Description |

|---|---|

| HasFailed | Whether operation has failed or not |

| ErrorMessage | A description of the failure, if any |

| ErrorCode | An error code related to operation failure. Is 0 if no failure occured |

Errors

| Error code | Description |

|---|---|

| 1000 | Unspecified error. |

Upload file from local file system

Uploads a single file from file system to a SharePoint Document Library.

Parameters

| Parameter | Description |

|---|---|

| Local filename | Full path to file to upload. Note that the user the application pool running Flow Server must have access to the file. |

| Document Library Name | Name of Document Library in SharePoint (not id) |

| Remote filename | Remote filename to use in Document Library, including any extensions or folders if applicable. E.g. "file.txt" or "subfolder1/subfolder2/file.txt" |

| Overwrite if exists | Specifies whether to overwrite remote file if it already exists or abort |

Output

Record with information about whether operation failed or not.

| Member | Description |

|---|---|

| HasFailed | Whether operation has failed or not |

| ErrorMessage | A description of the failure, if any |

| ErrorCode | An error code related to operation failure. Is 0 if no failure occured |

Errors

| Error code | Description |

|---|---|

| 1000 | Unspecified error. |

Upload file from stream

Uploads a single file from a stream to a SharePoint Document Library.

Parameters

| Parameter | Description |

|---|---|

| Source stream | Simple variable containg a stream |

| Document Library Name | Name of Document Library in SharePoint (not id) |

| Filename | Remote filename to use in Document Library, including any extensions or folders if applicable. E.g. "file.txt" or "subfolder1/subfolder2/file.txt" |

| Overwrite if exists | Specifies whether to overwrite remote file if it already exists or abort |

Output

Record with information about whether operation failed or not.

| Member | Description |

|---|---|

| HasFailed | Whether operation has failed or not |

| ErrorMessage | A description of the failure, if any |

| ErrorCode | An error code related to operation failure. Is 0 if no failure occured |

Errors

| Error code | Description |

|---|---|

| 1000 | Unspecified error. |

Set description

Set a new description on a Document Library

Parameters

| Parameter | Description |

|---|---|

| Document Library Name | Name of Document Library in SharePoint (not id) |

| New description | New description to set on Document Library |

Output

Record with information about whether operation failed or not.

| Member | Description |

|---|---|

| HasFailed | Whether operation has failed or not |

| ErrorMessage | A description of the failure, if any |

| ErrorCode | An error code related to operation failure. Is 0 if no failure occured |

Errors

| Error code | Description |

|---|---|

| 1000 | Unspecified error. |

Set name

Set a new title on a Document Library

Parameters

| Parameter | Description |

|---|---|

| Document Library Name | Name of Document Library in SharePoint (not id) |

| New title | The new title |

Output

Record with information about whether operation failed or not.

| Member | Description |

|---|---|

| HasFailed | Whether operation has failed or not |

| ErrorMessage | A description of the failure, if any |

| ErrorCode | An error code related to operation failure. Is 0 if no failure occured |

Errors

| Error code | Description |

|---|---|

| 1000 | Unspecified error. |

Add attached file (from bytes)

Upload a file as an attachment to task item.

Parameters

| Parameter | Description |

|---|---|

| Task app name | Name of Task app in SharePoint (not id) |

| Task ID | ID of task |

| Remote filename | Remote filename |

| Source bytes | Table (consisting of bytes) to read from |

Output

Record with information about whether operation failed or not.

| Member | Description |

|---|---|

| HasFailed | Whether operation has failed or not |

| ErrorMessage | A description of the failure, if any |

| ErrorCode | An error code related to operation failure. Is 0 if no failure occured |

Errors

| Error code | Description |

|---|---|

| 1000 | Unspecified error. |

| 1000001 | User is not authorized to perform operation. |

| 1000004 | Item with given id does not exist. |

Add attached file (from file system)

Upload a file as an attachment to task item.

Parameters

| Parameter | Description |

|---|---|

| Task app name | Name of Task app in SharePoint (not id) |

| Task ID | ID of task |

| Remote filename | Remote filename |

| Local filename | Full path to file to upload. Note that the user the application pool running Flow Server must have access to the file. |

Output

Record with information about whether operation failed or not.

| Member | Description |

|---|---|

| HasFailed | Whether operation has failed or not |

| ErrorMessage | A description of the failure, if any |

| ErrorCode | An error code related to operation failure. Is 0 if no failure occured |

Errors

| Error code | Description |

|---|---|

| 1000 | Unspecified error. |

| 1000001 | User is not authorized to perform operation. |

| 1000004 | Item with given id does not exist. |

Add attached file (from stream)

Upload a file as an attachment to task item.

Parameters

| Parameter | Description |

|---|---|

| Task app name | Name of Task app in SharePoint (not id) |

| Task ID | ID of task |

| Filename | Remote filename |

| Source stream | Simple variable containg a stream |

Output

Record with information about whether operation failed or not.

| Member | Description |

|---|---|

| HasFailed | Whether operation has failed or not |

| ErrorMessage | A description of the failure, if any |

| ErrorCode | An error code related to operation failure. Is 0 if no failure occured |

Errors

| Error code | Description |

|---|---|

| 1000 | Unspecified error. |

| 1000001 | User is not authorized to perform operation. |

| 1000004 | Item with given id does not exist. |

Delete all attached files

Delete all attachments on provided task item.

Parameters

| Parameter | Description |

|---|---|

| Task app name | Name of Task app in SharePoint (not id) |

| Task ID | ID of task |

| Filename | Remote filename |

Output

Record with information about whether operation failed or not.

| Member | Description |

|---|---|

| HasFailed | Whether operation has failed or not |

| ErrorMessage | A description of the failure, if any |

| ErrorCode | An error code related to operation failure. Is 0 if no failure occured |

Errors

| Error code | Description |

|---|---|

| 1000 | Unspecified error. |

| 1000001 | User is not authorized to perform operation. |

| 1000004 | Item with given id does not exist. |

Delete attached file

Delete an attachment on provided task item.

Parameters

| Parameter | Description |

|---|---|

| Task app name | Name of Task app in SharePoint (not id) |

| Task ID | ID of task |

| Filename | Remote filename |

Output

Record with information about whether operation failed or not.

| Member | Description |

|---|---|

| HasFailed | Whether operation has failed or not |

| ErrorMessage | A description of the failure, if any |

| ErrorCode | An error code related to operation failure. Is 0 if no failure occured |

Errors

| Error code | Description |

|---|---|

| 1000 | Unspecified error. |

| 1000001 | User is not authorized to perform operation. |

| 1000004 | Item with given id does not exist. |

| 1000005 | File not found. |

List attached files

Lists all files attached to specified task item.

Parameters

| Parameter | Description |

|---|---|

| Task app name | Name of Task app in SharePoint (not id) |

| Task ID | ID of task |

Output

Record with information about whether operation failed or not. If succesful, result of operation is stored in Results member

| Member | Description |

|---|---|

| HasFailed | Whether operation has failed or not |

| ErrorMessage | A description of the failure, if any |

| ErrorCode | An error code related to operation failure. Is 0 if no failure occured |

| Results | Table variable containing the results of operation |

Structure of Results

ResultsErrors

| Error code | Description |

|---|---|

| 1000 | Unspecified error. |

| 1000001 | User is not authorized to perform operation. |

| 1000004 | Item with given id does not exist. |

Get task information by ID

Get information such as start date, due date etc about a task.

Parameters

| Parameter | Description |

|---|---|

| Task app name | Name of Task app in SharePoint (not id) |

| Task ID | ID of task |

Output

Record with information about whether operation failed or not. If succesful, result of operation is stored in Results member

| Member | Description |

|---|---|

| HasFailed | Whether operation has failed or not |

| ErrorMessage | A description of the failure, if any |

| ErrorCode | An error code related to operation failure. Is 0 if no failure occured |

| Results | Record variable containing the results of operation |

Structure of Results

Results- UserID

- Value

- TaskID

- Value

Errors

| Error code | Description |

|---|---|

| 1000 | Unspecified error. |

| 1000001 | User is not authorized to perform operation. |

| 1000004 | Item with given id does not exist. |

Get task information by name

Get information such as start date, due date etc about all tasks with given name.

Parameters

| Parameter | Description |

|---|---|

| Task app name | Name of Task app in SharePoint (not id) |

| Task name | Name of task |

Output

Record with information about whether operation failed or not. If succesful, result of operation is stored in Results member

| Member | Description |

|---|---|

| HasFailed | Whether operation has failed or not |

| ErrorMessage | A description of the failure, if any |

| ErrorCode | An error code related to operation failure. Is 0 if no failure occured |

| Results | Table variable containing the results of operation |

Structure of Results

Results- UserID

- Value

- TaskID

- Value

Errors

| Error code | Description |

|---|---|

| 1000 | Unspecified error. |

| 1000001 | User is not authorized to perform operation. |

Get task information by query

Gets information about tasks by provided CAML Query. Example to get all tasks that are 50% or more completed:

<View>

<Query>

<Where>

<Geq>

<FieldRef Name='PercentComplete' />

<Value Type='Number'>0.50</Value>

</Geq>

</Where>

</Query>

</View>

Parameters

| Parameter | Description |

|---|---|

| Task app name | Name of Task app in SharePoint (not id) |

| CAML Query | The query to send to SharePoint. |

Output

Record with information about whether operation failed or not. If succesful, result of operation is stored in Results member

| Member | Description |

|---|---|

| HasFailed | Whether operation has failed or not |

| ErrorMessage | A description of the failure, if any |

| ErrorCode | An error code related to operation failure. Is 0 if no failure occured |

| Results | Table variable containing the results of operation |

Structure of Results

Results- UserID

- Value

- TaskID

- Value

Errors

| Error code | Description |

|---|---|

| 1000 | Unspecified error. |

| 1000001 | User is not authorized to perform operation. |

Get task property value

Gets the value of a field of a given task. This is an advanced operation that require deeper knowledge on how SharePoint works. The Property parameter is the identifier of a field, which might not be the same as is displayed in SharePoint. E.g use 'Body' as Property to update 'Description'.

Parameters

| Parameter | Description |

|---|---|

| Task app name | Name of Task app in SharePoint (not id) |

| Task ID | ID of task |

| Property | Name of property |

Output

Record with information about whether operation failed or not. If succesful, result of operation is stored in Results member

| Member | Description |

|---|---|

| HasFailed | Whether operation has failed or not |

| ErrorCode | An error code related to operation failure. Is 0 if no failure occured |

| Results | Simple variable containing the results of operation |

Errors

| Error code | Description |

|---|---|

| 1000 | Unspecified error. |

| 1000001 | User is not authorized to perform operation. |

| 1000004 | Item with given id does not exist. |

List tasks

Lists all tasks.

Parameters

| Parameter | Description |

|---|---|

| Task app name | Name of Task app in SharePoint (not id) |

| Row limit | Limit on how many tasks to list. A value of -1 indicates no limit |

Output

Record with information about whether operation failed or not. If succesful, result of operation is stored in Results member

| Member | Description |

|---|---|

| HasFailed | Whether operation has failed or not |

| ErrorMessage | A description of the failure, if any |

| ErrorCode | An error code related to operation failure. Is 0 if no failure occured |

| Results | Table variable containing the results of operation |

Structure of Results

Results- UserID

- Value

- TaskID

- Value

Errors

| Error code | Description |

|---|---|

| 1000 | Unspecified error. |

| 1000001 | User is not authorized to perform operation. |

List fields of tasks

Lists the fields that are available for tasks in Task app.

Parameters

| Parameter | Description |

|---|---|

| Task app name | Name of Task app in SharePoint (not id) |

| Include read only fields | Specifies whether to include fields that can only be read in the results of the operation |

Output

Record with information about whether operation failed or not. If succesful, result of operation is stored in Results member

| Member | Description |

|---|---|

| HasFailed | Whether operation has failed or not |

| ErrorMessage | A description of the failure, if any |

| ErrorCode | An error code related to operation failure. Is 0 if no failure occured |

| Results | Table variable containing the results of operation |

Structure of Results

ResultsErrors

| Error code | Description |

|---|---|

| 1000 | Unspecified error. |

| 1000001 | User is not authorized to perform operation. |

List tasks assigned to current user

Lists all tasks assigned to current user. Optionally including finished tasks.

Parameters

| Parameter | Description |

|---|---|

| Task app name | Name of Task app in SharePoint (not id) |

| Include already finshed tasks | Specifies whether to also include completed tasks |

Output

Record with information about whether operation failed or not. If succesful, result of operation is stored in Results member

| Member | Description |

|---|---|

| HasFailed | Whether operation has failed or not |

| ErrorMessage | A description of the failure, if any |

| ErrorCode | An error code related to operation failure. Is 0 if no failure occured |

| Results | Table variable containing the results of operation |

Structure of Results

Results- UserID

- Value

- TaskID

- Value

Errors

| Error code | Description |

|---|---|

| 1000 | Unspecified error. |

| 1000001 | User is not authorized to perform operation. |

List tasks assigned to specific user

Lists all tasks assigned to specified user. Optionally including finished tasks.

Parameters

| Parameter | Description |

|---|---|

| Task app name | Name of Task app in SharePoint (not id) |

| User ID | ID of user |

| Include already finshed tasks | Specifies whether to also include completed tasks |

Output

Record with information about whether operation failed or not. If succesful, result of operation is stored in Results member

| Member | Description |

|---|---|

| HasFailed | Whether operation has failed or not |

| ErrorMessage | A description of the failure, if any |

| ErrorCode | An error code related to operation failure. Is 0 if no failure occured |

| Results | Table variable containing the results of operation |

Structure of Results

Results- UserID

- Value

- TaskID

- Value